Alexandre D'Hooge

Unipd

Towards Explainable and Interpretable Musical Difficulty Estimation: A Parameter-efficient Approach

Aug 01, 2024

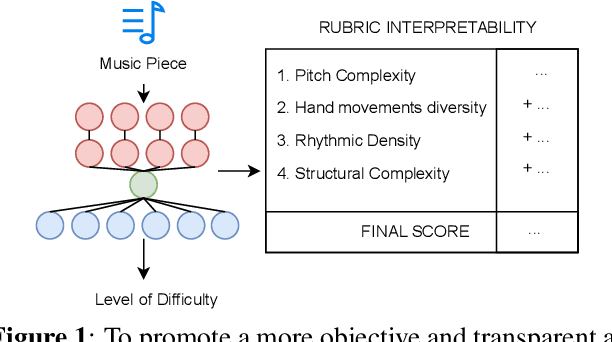

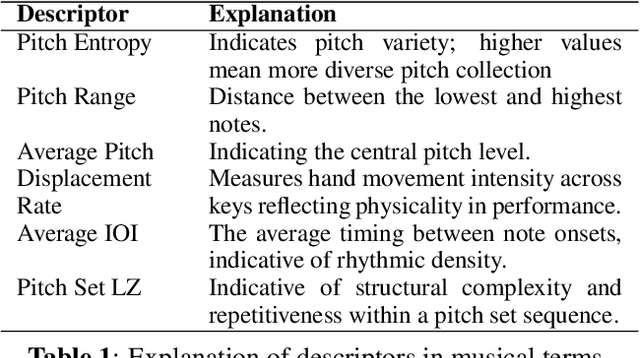

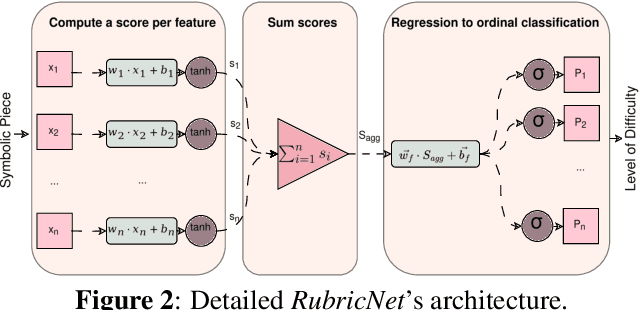

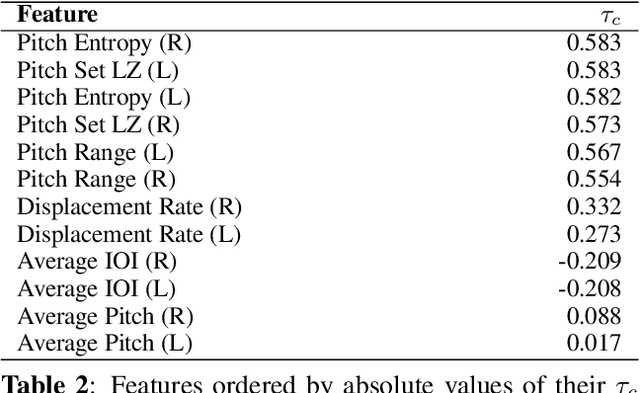

Abstract:Estimating music piece difficulty is important for organizing educational music collections. This process could be partially automatized to facilitate the educator's role. Nevertheless, the decisions performed by prevalent deep-learning models are hardly understandable, which may impair the acceptance of such a technology in music education curricula. Our work employs explainable descriptors for difficulty estimation in symbolic music representations. Furthermore, through a novel parameter-efficient white-box model, we outperform previous efforts while delivering interpretable results. These comprehensible outcomes emulate the functionality of a rubric, a tool widely used in music education. Our approach, evaluated in piano repertoire categorized in 9 classes, achieved 41.4% accuracy independently, with a mean squared error (MSE) of 1.7, showing precise difficulty estimation. Through our baseline, we illustrate how building on top of past research can offer alternatives for music difficulty assessment which are explainable and interpretable. With this, we aim to promote a more effective communication between the Music Information Retrieval (MIR) community and the music education one.

From MIDI to Rich Tablatures: an Automatic Generative System incorporating Lead Guitarists' Fingering and Stylistic choices

Jul 12, 2024

Abstract:Although the automatic identification of the optimal fingering for the performance of melodies on fretted string instruments has already been addressed (at least partially) in the literature, the specific case regarding lead electric guitar requires a dedicated approach. We propose a system that can generate, from simple MIDI melodies, tablatures enriched by fingerings, articulations, and expressive techniques. The basic fingering is derived by solving a constrained and multi-attribute optimization problem, which derives the best position of the fretting hand, not just the finger used at each moment.Then, by analyzing statistical data from the mySongBook corpus, the most common clich{\'e}s and biomechanical feasibility, articulations, and expressive techniques are introduced. Finally, the obtained output is converted into MusicXML format, which allows for easy visualization and use. The quality of the tablatures derived and the high configurability of the proposed approach can have several impacts, in particular in the fields of instrumental teaching, assisted composition and arranging, and computational expressive music performance models.

Modeling Bends in Popular Music Guitar Tablatures

Aug 22, 2023Abstract:Tablature notation is widely used in popular music to transcribe and share guitar musical content. As a complement to standard score notation, tablatures transcribe performance gesture information including finger positions and a variety of guitar-specific playing techniques such as slides, hammer-on/pull-off or bends.This paper focuses on bends, which enable to progressively shift the pitch of a note, therefore circumventing physical limitations of the discrete fretted fingerboard. In this paper, we propose a set of 25 high-level features, computed for each note of the tablature, to study how bend occurrences can be predicted from their past and future short-term context. Experiments are performed on a corpus of 932 lead guitar tablatures of popular music and show that a decision tree successfully predicts bend occurrences with an F1 score of 0.71 anda limited amount of false positive predictions, demonstrating promising applications to assist the arrangement of non-guitar music into guitar tablatures.

VaPar Synth -- A Variational Parametric Model for Audio Synthesis

Mar 30, 2020

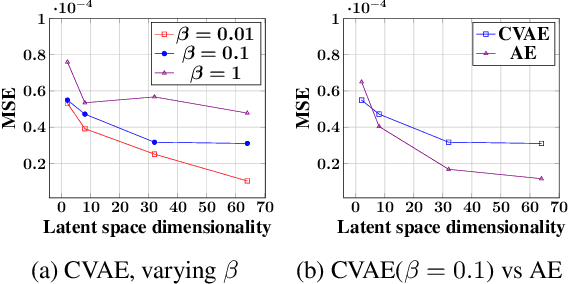

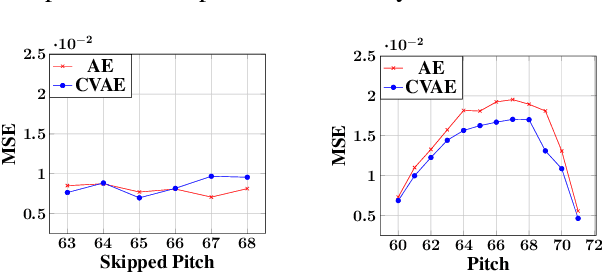

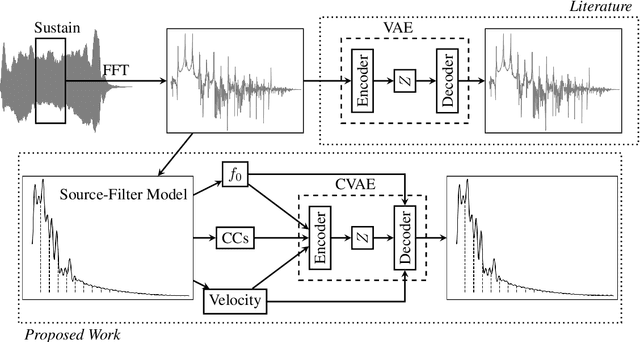

Abstract:With the advent of data-driven statistical modeling and abundant computing power, researchers are turning increasingly to deep learning for audio synthesis. These methods try to model audio signals directly in the time or frequency domain. In the interest of more flexible control over the generated sound, it could be more useful to work with a parametric representation of the signal which corresponds more directly to the musical attributes such as pitch, dynamics and timbre. We present VaPar Synth - a Variational Parametric Synthesizer which utilizes a conditional variational autoencoder (CVAE) trained on a suitable parametric representation. We demonstrate our proposed model's capabilities via the reconstruction and generation of instrumental tones with flexible control over their pitch.

Generative Audio Synthesis with a Parametric Model

Nov 15, 2019

Abstract:Use a parametric representation of audio to train a generative model in the interest of obtaining more flexible control over the generated sound.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge