Alexandre Boucaud

Opportunities in AI/ML for the Rubin LSST Dark Energy Science Collaboration

Jan 20, 2026Abstract:The Vera C. Rubin Observatory's Legacy Survey of Space and Time (LSST) will produce unprecedented volumes of heterogeneous astronomical data (images, catalogs, and alerts) that challenge traditional analysis pipelines. The LSST Dark Energy Science Collaboration (DESC) aims to derive robust constraints on dark energy and dark matter from these data, requiring methods that are statistically powerful, scalable, and operationally reliable. Artificial intelligence and machine learning (AI/ML) are already embedded across DESC science workflows, from photometric redshifts and transient classification to weak lensing inference and cosmological simulations. Yet their utility for precision cosmology hinges on trustworthy uncertainty quantification, robustness to covariate shift and model misspecification, and reproducible integration within scientific pipelines. This white paper surveys the current landscape of AI/ML across DESC's primary cosmological probes and cross-cutting analyses, revealing that the same core methodologies and fundamental challenges recur across disparate science cases. Since progress on these cross-cutting challenges would benefit multiple probes simultaneously, we identify key methodological research priorities, including Bayesian inference at scale, physics-informed methods, validation frameworks, and active learning for discovery. With an eye on emerging techniques, we also explore the potential of the latest foundation model methodologies and LLM-driven agentic AI systems to reshape DESC workflows, provided their deployment is coupled with rigorous evaluation and governance. Finally, we discuss critical software, computing, data infrastructure, and human capital requirements for the successful deployment of these new methodologies, and consider associated risks and opportunities for broader coordination with external actors.

Bayesian Deconvolution of Astronomical Images with Diffusion Models: Quantifying Prior-Driven Features in Reconstructions

Nov 28, 2024Abstract:Deconvolution of astronomical images is a key aspect of recovering the intrinsic properties of celestial objects, especially when considering ground-based observations. This paper explores the use of diffusion models (DMs) and the Diffusion Posterior Sampling (DPS) algorithm to solve this inverse problem task. We apply score-based DMs trained on high-resolution cosmological simulations, through a Bayesian setting to compute a posterior distribution given the observations available. By considering the redshift and the pixel scale as parameters of our inverse problem, the tool can be easily adapted to any dataset. We test our model on Hyper Supreme Camera (HSC) data and show that we reach resolutions comparable to those obtained by Hubble Space Telescope (HST) images. Most importantly, we quantify the uncertainty of reconstructions and propose a metric to identify prior-driven features in the reconstructed images, which is key in view of applying these methods for scientific purposes.

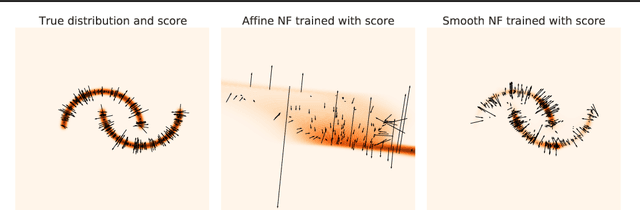

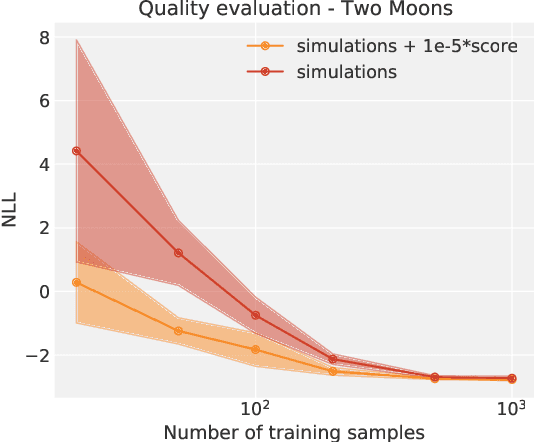

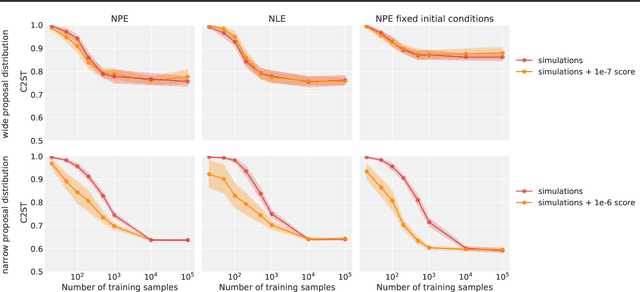

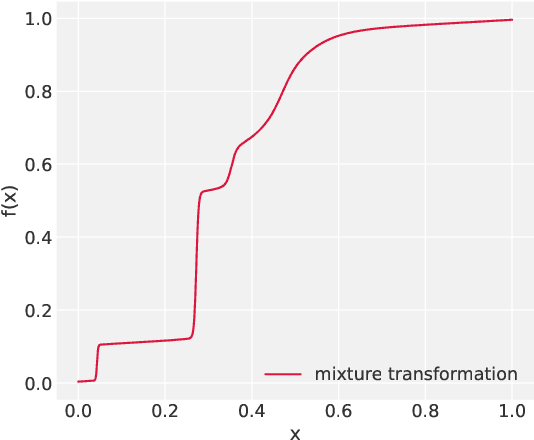

Neural Posterior Estimation with Differentiable Simulators

Jul 12, 2022

Abstract:Simulation-Based Inference (SBI) is a promising Bayesian inference framework that alleviates the need for analytic likelihoods to estimate posterior distributions. Recent advances using neural density estimators in SBI algorithms have demonstrated the ability to achieve high-fidelity posteriors, at the expense of a large number of simulations ; which makes their application potentially very time-consuming when using complex physical simulations. In this work we focus on boosting the sample-efficiency of posterior density estimation using the gradients of the simulator. We present a new method to perform Neural Posterior Estimation (NPE) with a differentiable simulator. We demonstrate how gradient information helps constrain the shape of the posterior and improves sample-efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge