Alexandra Alles

In-Vehicle Interface Adaptation to Environment-Induced Cognitive Workload

Oct 20, 2022

Abstract:Many car accidents are caused by human distractions, including cognitive distractions. In-vehicle human-machine interfaces (HMIs) have evolved throughout the years, providing more and more functions. Interaction with the HMIs can, however, also lead to further distractions and, as a consequence, accidents. To tackle this problem, we propose using adaptive HMIs that change according to the mental workload of the driver. In this work, we present the current status as well as preliminary results of a user study using naturalistic secondary tasks while driving (i.e., the primary task) that attempt to understand the effects of one such interface.

What's on your mind? A Mental and Perceptual Load Estimation Framework towards Adaptive In-vehicle Interaction while Driving

Aug 10, 2022

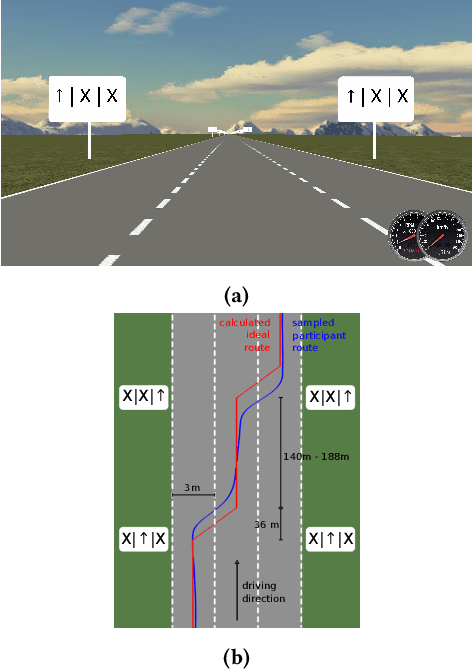

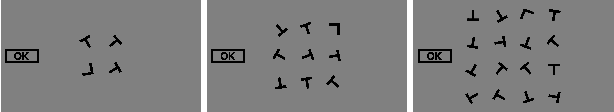

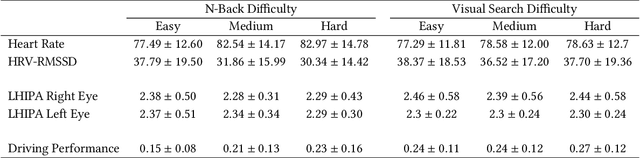

Abstract:Several researchers have focused on studying driver cognitive behavior and mental load for in-vehicle interaction while driving. Adaptive interfaces that vary with mental and perceptual load levels could help in reducing accidents and enhancing the driver experience. In this paper, we analyze the effects of mental workload and perceptual load on psychophysiological dimensions and provide a machine learning-based framework for mental and perceptual load estimation in a dual task scenario for in-vehicle interaction (https://github.com/amrgomaaelhady/MWL-PL-estimator). We use off-the-shelf non-intrusive sensors that can be easily integrated into the vehicle's system. Our statistical analysis shows that while mental workload influences some psychophysiological dimensions, perceptual load shows little effect. Furthermore, we classify the mental and perceptual load levels through the fusion of these measurements, moving towards a real-time adaptive in-vehicle interface that is personalized to user behavior and driving conditions. We report up to 89% mental workload classification accuracy and provide a real-time minimally-intrusive solution.

Studying Person-Specific Pointing and Gaze Behavior for Multimodal Referencing of Outside Objects from a Moving Vehicle

Sep 23, 2020

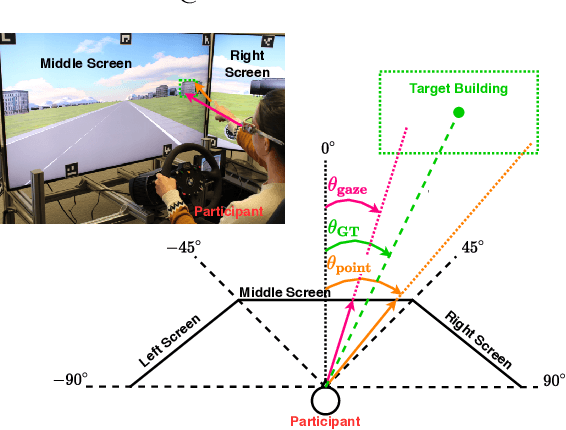

Abstract:Hand pointing and eye gaze have been extensively investigated in automotive applications for object selection and referencing. Despite significant advances, existing outside-the-vehicle referencing methods consider these modalities separately. Moreover, existing multimodal referencing methods focus on a static situation, whereas the situation in a moving vehicle is highly dynamic and subject to safety-critical constraints. In this paper, we investigate the specific characteristics of each modality and the interaction between them when used in the task of referencing outside objects (e.g. buildings) from the vehicle. We furthermore explore person-specific differences in this interaction by analyzing individuals' performance for pointing and gaze patterns, along with their effect on the driving task. Our statistical analysis shows significant differences in individual behaviour based on object's location (i.e. driver's right side vs. left side), object's surroundings, driving mode (i.e. autonomous vs. normal driving) as well as pointing and gaze duration, laying the foundation for a user-adaptive approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge