Alexander Prutsch

The RoboSense Challenge: Sense Anything, Navigate Anywhere, Adapt Across Platforms

Jan 08, 2026Abstract:Autonomous systems are increasingly deployed in open and dynamic environments -- from city streets to aerial and indoor spaces -- where perception models must remain reliable under sensor noise, environmental variation, and platform shifts. However, even state-of-the-art methods often degrade under unseen conditions, highlighting the need for robust and generalizable robot sensing. The RoboSense 2025 Challenge is designed to advance robustness and adaptability in robot perception across diverse sensing scenarios. It unifies five complementary research tracks spanning language-grounded decision making, socially compliant navigation, sensor configuration generalization, cross-view and cross-modal correspondence, and cross-platform 3D perception. Together, these tasks form a comprehensive benchmark for evaluating real-world sensing reliability under domain shifts, sensor failures, and platform discrepancies. RoboSense 2025 provides standardized datasets, baseline models, and unified evaluation protocols, enabling large-scale and reproducible comparison of robust perception methods. The challenge attracted 143 teams from 85 institutions across 16 countries, reflecting broad community engagement. By consolidating insights from 23 winning solutions, this report highlights emerging methodological trends, shared design principles, and open challenges across all tracks, marking a step toward building robots that can sense reliably, act robustly, and adapt across platforms in real-world environments.

Efficient Motion Prediction: A Lightweight & Accurate Trajectory Prediction Model With Fast Training and Inference Speed

Sep 25, 2024

Abstract:For efficient and safe autonomous driving, it is essential that autonomous vehicles can predict the motion of other traffic agents. While highly accurate, current motion prediction models often impose significant challenges in terms of training resource requirements and deployment on embedded hardware. We propose a new efficient motion prediction model, which achieves highly competitive benchmark results while training only a few hours on a single GPU. Due to our lightweight architectural choices and the focus on reducing the required training resources, our model can easily be applied to custom datasets. Furthermore, its low inference latency makes it particularly suitable for deployment in autonomous applications with limited computing resources.

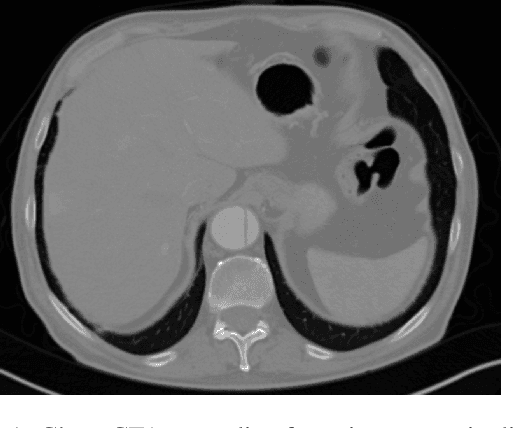

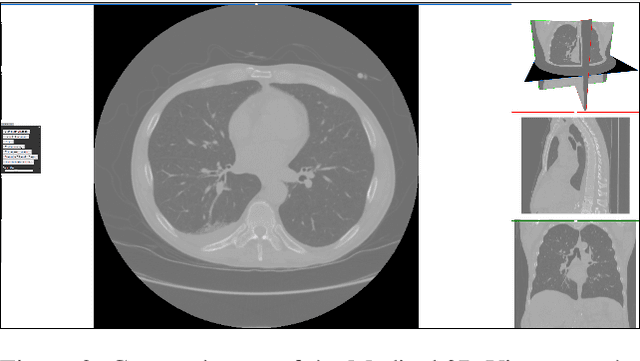

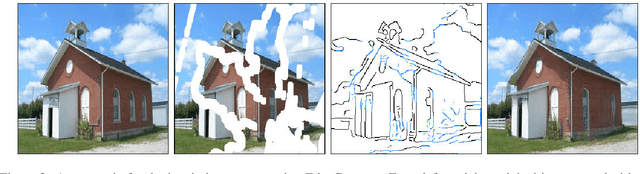

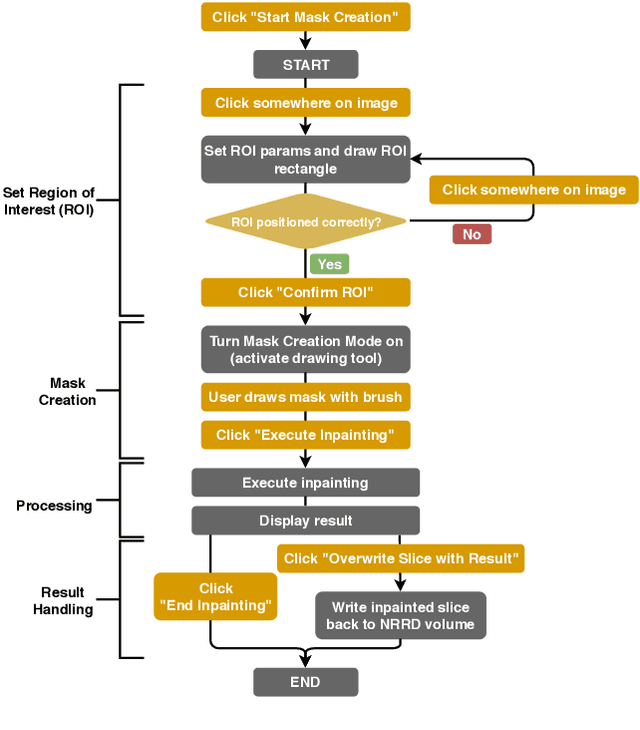

Design and Development of a Web-based Tool for Inpainting of Dissected Aortae in Angiography Images

May 06, 2020

Abstract:Medical imaging is an important tool for the diagnosis and the evaluation of an aortic dissection (AD); a serious condition of the aorta, which could lead to a life-threatening aortic rupture. AD patients need life-long medical monitoring of the aortic enlargement and of the disease progression, subsequent to the diagnosis of the aortic dissection. Since there is a lack of 'healthy-dissected' image pairs from medical studies, the application of inpainting techniques offers an alternative source for generating them by doing a virtual regression from dissected aortae to healthy aortae; an indirect way to study the origin of the disease. The proposed inpainting tool combines a neural network, which was trained on the task of inpainting aortic dissections, with an easy-to-use user interface. To achieve this goal, the inpainting tool has been integrated within the 3D medical image viewer of StudierFenster (www.studierfenster.at). By designing the tool as a web application, we simplify the usage of the neural network and reduce the initial learning curve.

* 9 figures, 14 references

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge