Alexander Potapov

PT-MMD: A Novel Statistical Framework for the Evaluation of Generative Systems

Oct 28, 2019

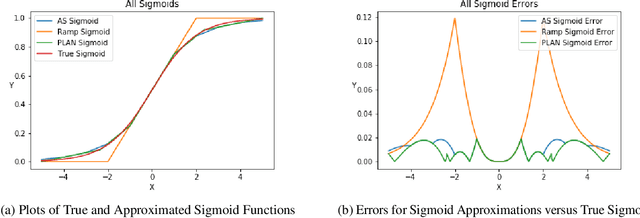

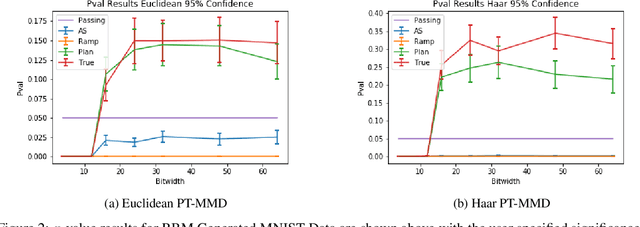

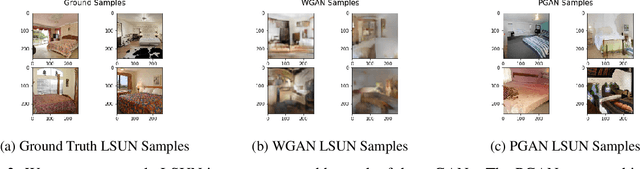

Abstract:Stochastic-sampling-based Generative Neural Networks, such as Restricted Boltzmann Machines and Generative Adversarial Networks, are now used for applications such as denoising, image occlusion removal, pattern completion, and motion synthesis. In scenarios which involve performing such inference tasks with these models, it is critical to determine metrics that allow for model selection and/or maintenance of requisite generative performance under pre-specified implementation constraints. In this paper, we propose a new metric for evaluating generative model performance based on $p$-values derived from the combined use of Maximum Mean Discrepancy (MMD) and permutation-based (PT-based) resampling, which we refer to as PT-MMD. We demonstrate the effectiveness of this metric for two cases: (1) Selection of bitwidth and activation function complexity to achieve minimum power-at-performance for Restricted Boltzmann Machines; (2) Quantitative comparison of images generated by two types of Generative Adversarial Networks (PGAN and WGAN) to facilitate model selection in order to maximize the fidelity of generated images. For these applications, our results are shown using Euclidean and Haar-based kernels for the PT-MMD two sample hypothesis test. This demonstrates the critical role of distance functions in comparing generated images against their corresponding ground truth counterparts as what would be perceived by human users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge