Alexander Krentsel

Let the Barbarians In: How AI Can Accelerate Systems Performance Research

Dec 22, 2025Abstract:Artificial Intelligence (AI) is beginning to transform the research process by automating the discovery of new solutions. This shift depends on the availability of reliable verifiers, which AI-driven approaches require to validate candidate solutions. Research focused on improving systems performance is especially well-suited to this paradigm because system performance problems naturally admit such verifiers: candidates can be implemented in real systems or simulators and evaluated against predefined workloads. We term this iterative cycle of generation, evaluation, and refinement AI-Driven Research for Systems (ADRS). Using several open-source ADRS instances (i.e., OpenEvolve, GEPA, and ShinkaEvolve), we demonstrate across ten case studies (e.g., multi-region cloud scheduling, mixture-of-experts load balancing, LLM-based SQL, transaction scheduling) that ADRS-generated solutions can match or even outperform human state-of-the-art designs. Based on these findings, we outline best practices (e.g., level of prompt specification, amount of feedback, robust evaluation) for effectively using ADRS, and we discuss future research directions and their implications. Although we do not yet have a universal recipe for applying ADRS across all of systems research, we hope our preliminary findings, together with the challenges we identify, offer meaningful guidance for future work as researcher effort shifts increasingly toward problem formulation and strategic oversight. Note: This paper is an extension of our prior work [14]. It adds extensive evaluation across multiple ADRS frameworks and provides deeper analysis and insights into best practices.

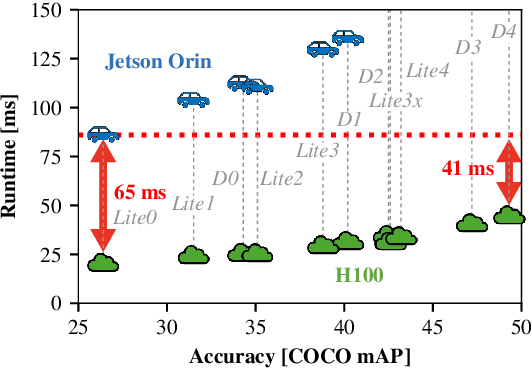

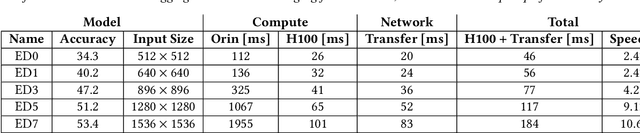

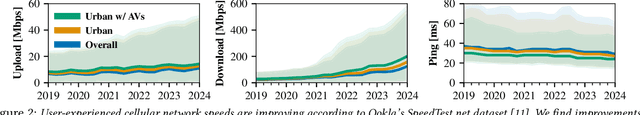

Bandwidth Allocation for Cloud-Augmented Autonomous Driving

Mar 26, 2025Abstract:Autonomous vehicle (AV) control systems increasingly rely on ML models for tasks such as perception and planning. Current practice is to run these models on the car's local hardware due to real-time latency constraints and reliability concerns, which limits model size and thus accuracy. Prior work has observed that we could augment current systems by running larger models in the cloud, relying on faster cloud runtimes to offset the cellular network latency. However, prior work does not account for an important practical constraint: limited cellular bandwidth. We show that, for typical bandwidth levels, proposed techniques for cloud-augmented AV models take too long to transfer data, thus mostly falling back to the on-car models and resulting in no accuracy improvement. In this work, we show that realizing cloud-augmented AV models requires intelligent use of this scarce bandwidth, i.e. carefully allocating bandwidth across tasks and providing multiple data compression and model options. We formulate this as a resource allocation problem to maximize car utility, and present our system \sysname which achieves an increase in average model accuracy by up to 15 percentage points on driving scenarios from the Waymo Open Dataset.

Managing Bandwidth: The Key to Cloud-Assisted Autonomous Driving

Oct 21, 2024

Abstract:Prevailing wisdom asserts that one cannot rely on the cloud for critical real-time control systems like self-driving cars. We argue that we can, and must. Following the trends of increasing model sizes, improvements in hardware, and evolving mobile networks, we identify an opportunity to offload parts of time-sensitive and latency-critical compute to the cloud. Doing so requires carefully allocating bandwidth to meet strict latency SLOs, while maximizing benefit to the car.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge