Alexander Htet Kyaw

Scene-Aware Urban Design: A Human-AI Recommendation Framework Using Co-Occurrence Embeddings and Vision-Language Models

Nov 09, 2025Abstract:This paper introduces a human-in-the-loop computer vision framework that uses generative AI to propose micro-scale design interventions in public space and support more continuous, local participation. Using Grounding DINO and a curated subset of the ADE20K dataset as a proxy for the urban built environment, the system detects urban objects and builds co-occurrence embeddings that reveal common spatial configurations. From this analysis, the user receives five statistically likely complements to a chosen anchor object. A vision language model then reasons over the scene image and the selected pair to suggest a third object that completes a more complex urban tactic. The workflow keeps people in control of selection and refinement and aims to move beyond top-down master planning by grounding choices in everyday patterns and lived experience.

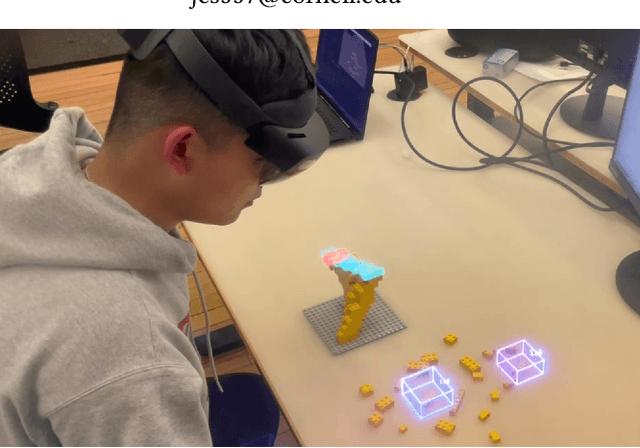

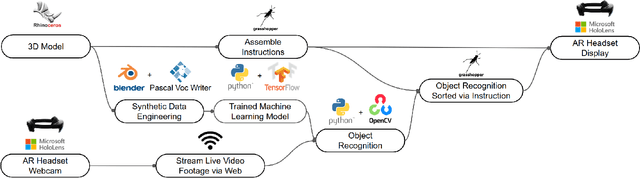

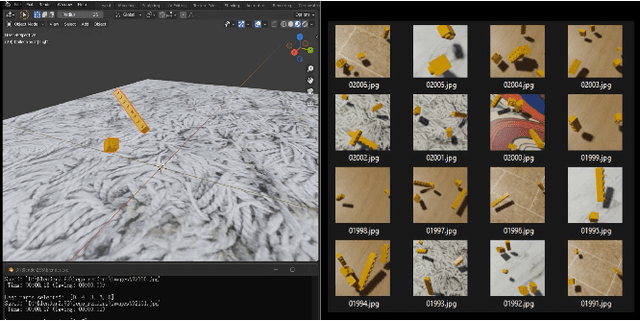

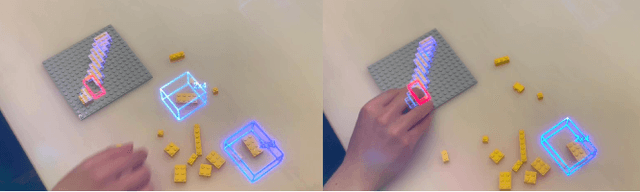

AI Assisted AR Assembly: Object Recognition and Computer Vision for Augmented Reality Assisted Assembly

Nov 07, 2025

Abstract:We present an AI-assisted Augmented Reality assembly workflow that uses deep learning-based object recognition to identify different assembly components and display step-by-step instructions. For each assembly step, the system displays a bounding box around the corresponding components in the physical space, and where the component should be placed. By connecting assembly instructions with the real-time location of relevant components, the system eliminates the need for manual searching, sorting, or labeling of different components before each assembly. To demonstrate the feasibility of using object recognition for AR-assisted assembly, we highlight a case study involving the assembly of LEGO sculptures.

Insights Informed Generative AI for Design: Incorporating Real-world Data for Text-to-Image Output

Jun 17, 2025

Abstract:Generative AI, specifically text-to-image models, have revolutionized interior architectural design by enabling the rapid translation of conceptual ideas into visual representations from simple text prompts. While generative AI can produce visually appealing images they often lack actionable data for designers In this work, we propose a novel pipeline that integrates DALL-E 3 with a materials dataset to enrich AI-generated designs with sustainability metrics and material usage insights. After the model generates an interior design image, a post-processing module identifies the top ten materials present and pairs them with carbon dioxide equivalent (CO2e) values from a general materials dictionary. This approach allows designers to immediately evaluate environmental impacts and refine prompts accordingly. We evaluate the system through three user tests: (1) no mention of sustainability to the user prior to the prompting process with generative AI, (2) sustainability goals communicated to the user before prompting, and (3) sustainability goals communicated along with quantitative CO2e data included in the generative AI outputs. Our qualitative and quantitative analyses reveal that the introduction of sustainability metrics in the third test leads to more informed design decisions, however, it can also trigger decision fatigue and lower overall satisfaction. Nevertheless, the majority of participants reported incorporating sustainability principles into their workflows in the third test, underscoring the potential of integrated metrics to guide more ecologically responsible practices. Our findings showcase the importance of balancing design freedom with practical constraints, offering a clear path toward holistic, data-driven solutions in AI-assisted architectural design.

Making Physical Objects with Generative AI and Robotic Assembly: Considering Fabrication Constraints, Sustainability, Time, Functionality, and Accessibility

Apr 27, 2025

Abstract:3D generative AI enables rapid and accessible creation of 3D models from text or image inputs. However, translating these outputs into physical objects remains a challenge due to the constraints in the physical world. Recent studies have focused on improving the capabilities of 3D generative AI to produce fabricable outputs, with 3D printing as the main fabrication method. However, this workshop paper calls for a broader perspective by considering how fabrication methods align with the capabilities of 3D generative AI. As a case study, we present a novel system using discrete robotic assembly and 3D generative AI to make physical objects. Through this work, we identified five key aspects to consider in a physical making process based on the capabilities of 3D generative AI. 1) Fabrication Constraints: Current text-to-3D models can generate a wide range of 3D designs, requiring fabrication methods that can adapt to the variability of generative AI outputs. 2) Time: While generative AI can generate 3D models in seconds, fabricating physical objects can take hours or even days. Faster production could enable a closer iterative design loop between humans and AI in the making process. 3) Sustainability: Although text-to-3D models can generate thousands of models in the digital world, extending this capability to the real world would be resource-intensive, unsustainable and irresponsible. 4) Functionality: Unlike digital outputs from 3D generative AI models, the fabrication method plays a crucial role in the usability of physical objects. 5) Accessibility: While generative AI simplifies 3D model creation, the need for fabrication equipment can limit participation, making AI-assisted creation less inclusive. These five key aspects provide a framework for assessing how well a physical making process aligns with the capabilities of 3D generative AI and values in the world.

AR Glulam: Accurate Augmented Reality Using Multiple Fiducial Markers for Glulam Fabrication

Feb 12, 2025Abstract:Recent advancements in Augmented Reality (AR) have demonstrated applications in architecture, design, and fabrication. Compared to conventional 2D construction drawings, AR can be used to superimpose contextual instructions, display 3D spatial information and enable on-site engagement. Despite the potential of AR, the widespread adoption of the technology in the industry is limited by its precision. Precision is important for projects requiring strict construction tolerances, design fidelity, and fabrication feedback. For example, the manufacturing of glulam beams requires tolerances of less than 2mm. The goal of this project is to explore the industrial application of using multiple fiducial markers for high-precision AR fabrication. While the method has been validated in lab settings with a precision of 0.97, this paper focuses on fabricating glulam beams in a factory setting with an industry manufacturer, Unalam Factory.

Gesture Recognition for Feedback Based Mixed Reality and Robotic Fabrication: A Case Study of the UnLog Tower

Sep 28, 2024Abstract:Mixed Reality (MR) platforms enable users to interact with three-dimensional holographic instructions during the assembly and fabrication of highly custom and parametric architectural constructions without the necessity of two-dimensional drawings. Previous MR fabrication projects have primarily relied on digital menus and custom buttons as the interface for user interaction with the MR environment. Despite this approach being widely adopted, it is limited in its ability to allow for direct human interaction with physical objects to modify fabrication instructions within the MR environment. This research integrates user interactions with physical objects through real-time gesture recognition as input to modify, update or generate new digital information enabling reciprocal stimuli between the physical and the virtual environment. Consequently, the digital environment is generative of the user's provided interaction with physical objects to allow seamless feedback in the fabrication process. This research investigates gesture recognition for feedback-based MR workflows for robotic fabrication, human assembly, and quality control in the construction of the UnLog Tower.

Speech to Reality: On-Demand Production using Natural Language, 3D Generative AI, and Discrete Robotic Assembly

Sep 27, 2024

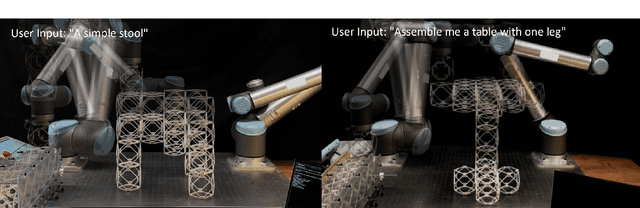

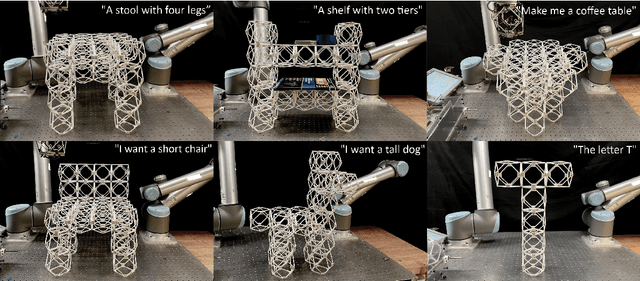

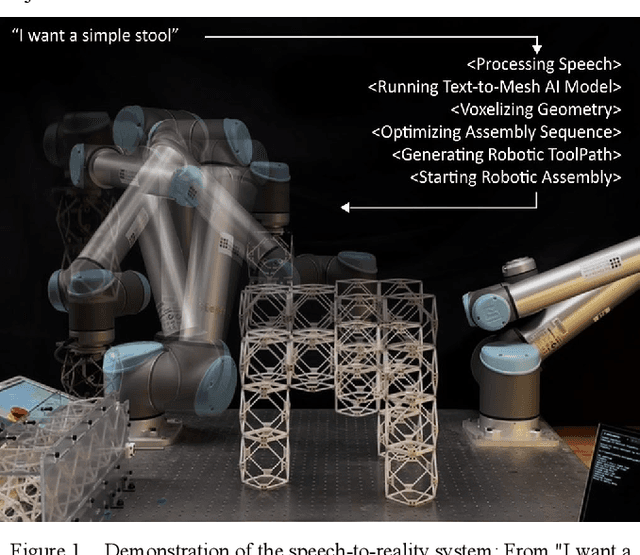

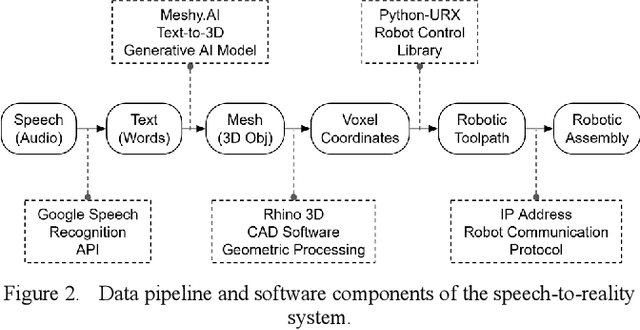

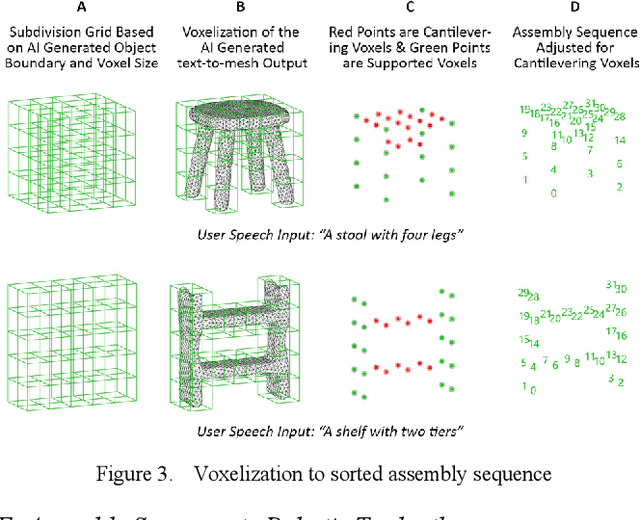

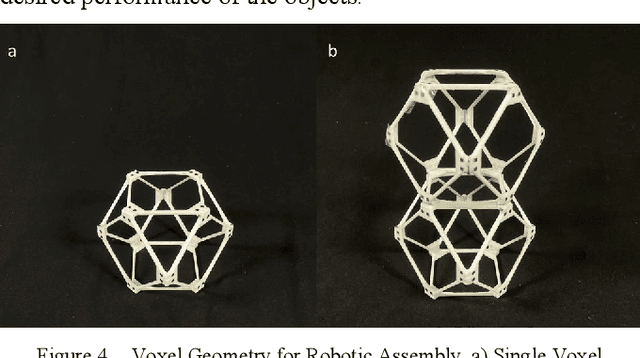

Abstract:We present a system that transforms speech into physical objects by combining 3D generative Artificial Intelligence with robotic assembly. The system leverages natural language input to make design and manufacturing more accessible, enabling individuals without expertise in 3D modeling or robotic programming to create physical objects. We propose utilizing discrete robotic assembly of lattice-based voxel components to address the challenges of using generative AI outputs in physical production, such as design variability, fabrication speed, structural integrity, and material waste. The system interprets speech to generate 3D objects, discretizes them into voxel components, computes an optimized assembly sequence, and generates a robotic toolpath. The results are demonstrated through the assembly of various objects, ranging from chairs to shelves, which are prompted via speech and realized within 5 minutes using a 6-axis robotic arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge