Sasa Zivkovic

AI Assisted AR Assembly: Object Recognition and Computer Vision for Augmented Reality Assisted Assembly

Nov 07, 2025

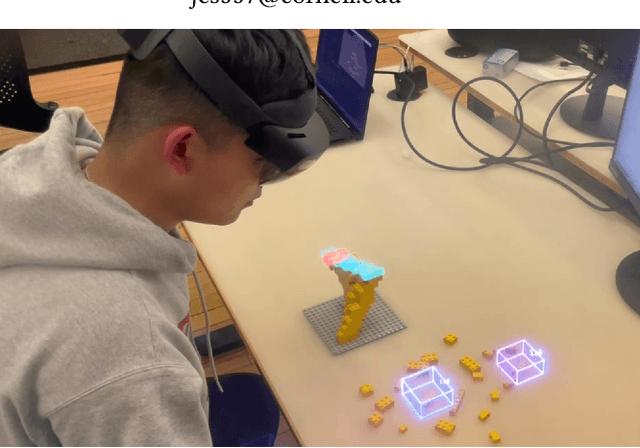

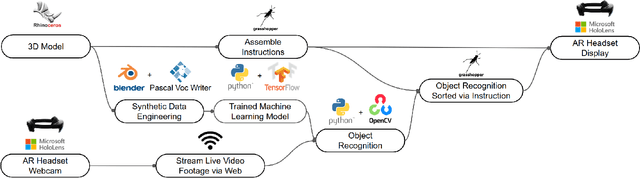

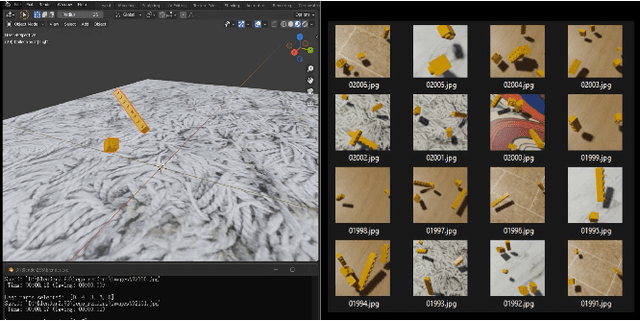

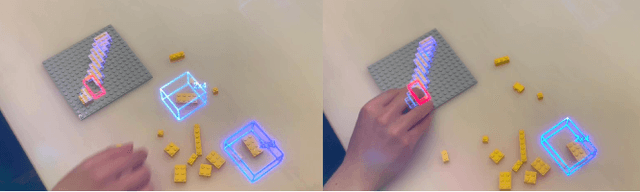

Abstract:We present an AI-assisted Augmented Reality assembly workflow that uses deep learning-based object recognition to identify different assembly components and display step-by-step instructions. For each assembly step, the system displays a bounding box around the corresponding components in the physical space, and where the component should be placed. By connecting assembly instructions with the real-time location of relevant components, the system eliminates the need for manual searching, sorting, or labeling of different components before each assembly. To demonstrate the feasibility of using object recognition for AR-assisted assembly, we highlight a case study involving the assembly of LEGO sculptures.

AR Glulam: Accurate Augmented Reality Using Multiple Fiducial Markers for Glulam Fabrication

Feb 12, 2025Abstract:Recent advancements in Augmented Reality (AR) have demonstrated applications in architecture, design, and fabrication. Compared to conventional 2D construction drawings, AR can be used to superimpose contextual instructions, display 3D spatial information and enable on-site engagement. Despite the potential of AR, the widespread adoption of the technology in the industry is limited by its precision. Precision is important for projects requiring strict construction tolerances, design fidelity, and fabrication feedback. For example, the manufacturing of glulam beams requires tolerances of less than 2mm. The goal of this project is to explore the industrial application of using multiple fiducial markers for high-precision AR fabrication. While the method has been validated in lab settings with a precision of 0.97, this paper focuses on fabricating glulam beams in a factory setting with an industry manufacturer, Unalam Factory.

Gesture Recognition for Feedback Based Mixed Reality and Robotic Fabrication: A Case Study of the UnLog Tower

Sep 28, 2024Abstract:Mixed Reality (MR) platforms enable users to interact with three-dimensional holographic instructions during the assembly and fabrication of highly custom and parametric architectural constructions without the necessity of two-dimensional drawings. Previous MR fabrication projects have primarily relied on digital menus and custom buttons as the interface for user interaction with the MR environment. Despite this approach being widely adopted, it is limited in its ability to allow for direct human interaction with physical objects to modify fabrication instructions within the MR environment. This research integrates user interactions with physical objects through real-time gesture recognition as input to modify, update or generate new digital information enabling reciprocal stimuli between the physical and the virtual environment. Consequently, the digital environment is generative of the user's provided interaction with physical objects to allow seamless feedback in the fabrication process. This research investigates gesture recognition for feedback-based MR workflows for robotic fabrication, human assembly, and quality control in the construction of the UnLog Tower.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge