Alexander Fang

Bach or Mock? A Grading Function for Chorales in the Style of J.S. Bach

Jul 17, 2020

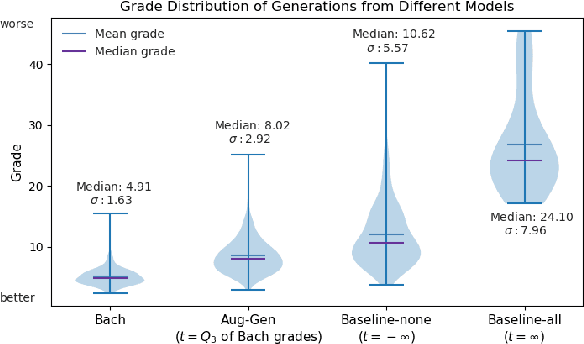

Abstract:Deep generative systems that learn probabilistic models from a corpus of existing music do not explicitly encode knowledge of a musical style, compared to traditional rule-based systems. Thus, it can be difficult to determine whether deep models generate stylistically correct output without expert evaluation, but this is expensive and time-consuming. Therefore, there is a need for automatic, interpretable, and musically-motivated evaluation measures of generated music. In this paper, we introduce a grading function that evaluates four-part chorales in the style of J.S. Bach along important musical features. We use the grading function to evaluate the output of a Transformer model, and show that the function is both interpretable and outperforms human experts at discriminating Bach chorales from model-generated ones.

Incorporating Music Knowledge in Continual Dataset Augmentation for Music Generation

Jul 17, 2020

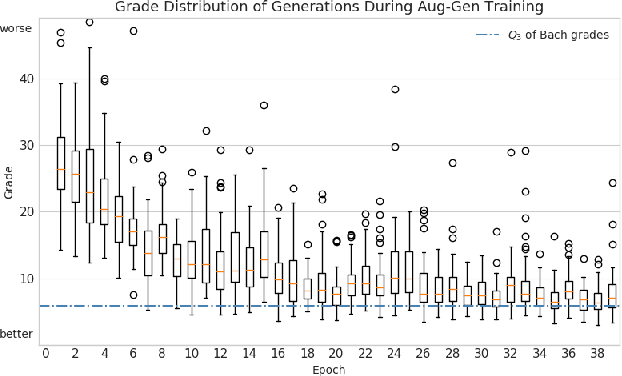

Abstract:Deep learning has rapidly become the state-of-the-art approach for music generation. However, training a deep model typically requires a large training set, which is often not available for specific musical styles. In this paper, we present augmentative generation (Aug-Gen), a method of dataset augmentation for any music generation system trained on a resource-constrained domain. The key intuition of this method is that the training data for a generative system can be augmented by examples the system produces during the course of training, provided these examples are of sufficiently high quality and variety. We apply Aug-Gen to Transformer-based chorale generation in the style of J.S. Bach, and show that this allows for longer training and results in better generative output.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge