Alex Serban

Foundational Models and Federated Learning: Survey, Taxonomy, Challenges and Practical Insights

Sep 05, 2025Abstract:Federated learning has the potential to unlock siloed data and distributed resources by enabling collaborative model training without sharing private data. As more complex foundational models gain widespread use, the need to expand training resources and integrate privately owned data grows as well. In this article, we explore the intersection of federated learning and foundational models, aiming to identify, categorize, and characterize technical methods that integrate the two paradigms. As a unified survey is currently unavailable, we present a literature survey structured around a novel taxonomy that follows the development life-cycle stages, along with a technical comparison of available methods. Additionally, we provide practical insights and guidelines for implementing and evolving these methods, with a specific focus on the healthcare domain as a case study, where the potential impact of federated learning and foundational models is considered significant. Our survey covers multiple intersecting topics, including but not limited to federated learning, self-supervised learning, fine-tuning, distillation, and transfer learning. Initially, we retrieved and reviewed a set of over 4,200 articles. This collection was narrowed to more than 250 thoroughly reviewed articles through inclusion criteria, featuring 42 unique methods. The methods were used to construct the taxonomy and enabled their comparison based on complexity, efficiency, and scalability. We present these results as a self-contained overview that not only summarizes the state of the field but also provides insights into the practical aspects of adopting, evolving, and integrating foundational models with federated learning.

ConceptVAE: Self-Supervised Fine-Grained Concept Disentanglement from 2D Echocardiographies

Feb 03, 2025

Abstract:While traditional self-supervised learning methods improve performance and robustness across various medical tasks, they rely on single-vector embeddings that may not capture fine-grained concepts such as anatomical structures or organs. The ability to identify such concepts and their characteristics without supervision has the potential to improve pre-training methods, and enable novel applications such as fine-grained image retrieval and concept-based outlier detection. In this paper, we introduce ConceptVAE, a novel pre-training framework that detects and disentangles fine-grained concepts from their style characteristics in a self-supervised manner. We present a suite of loss terms and model architecture primitives designed to discretise input data into a preset number of concepts along with their local style. We validate ConceptVAE both qualitatively and quantitatively, demonstrating its ability to detect fine-grained anatomical structures such as blood pools and septum walls from 2D cardiac echocardiographies. Quantitatively, ConceptVAE outperforms traditional self-supervised methods in tasks such as region-based instance retrieval, semantic segmentation, out-of-distribution detection, and object detection. Additionally, we explore the generation of in-distribution synthetic data that maintains the same concepts as the training data but with distinct styles, highlighting its potential for more calibrated data generation. Overall, our study introduces and validates a promising new pre-training technique based on concept-style disentanglement, opening multiple avenues for developing models for medical image analysis that are more interpretable and explainable than black-box approaches.

Generative 3D Cardiac Shape Modelling for In-Silico Trials

Sep 24, 2024

Abstract:We propose a deep learning method to model and generate synthetic aortic shapes based on representing shapes as the zero-level set of a neural signed distance field, conditioned by a family of trainable embedding vectors with encode the geometric features of each shape. The network is trained on a dataset of aortic root meshes reconstructed from CT images by making the neural field vanish on sampled surface points and enforcing its spatial gradient to have unit norm. Empirical results show that our model can represent aortic shapes with high fidelity. Moreover, by sampling from the learned embedding vectors, we can generate novel shapes that resemble real patient anatomies, which can be used for in-silico trials.

Deep Repulsive Prototypes for Adversarial Robustness

May 26, 2021

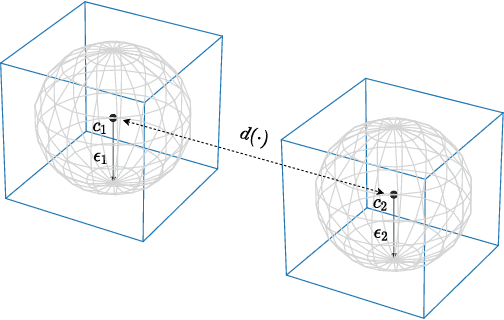

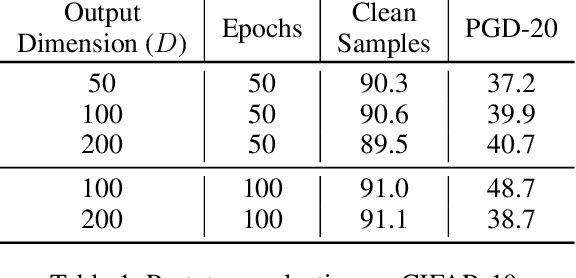

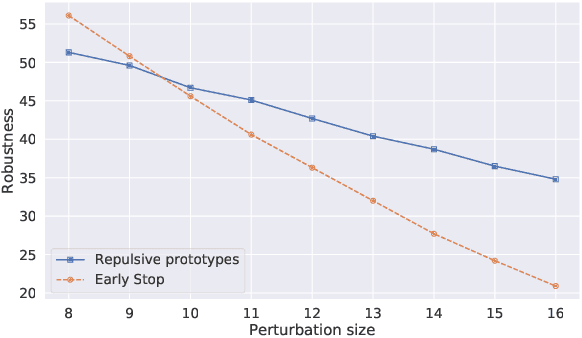

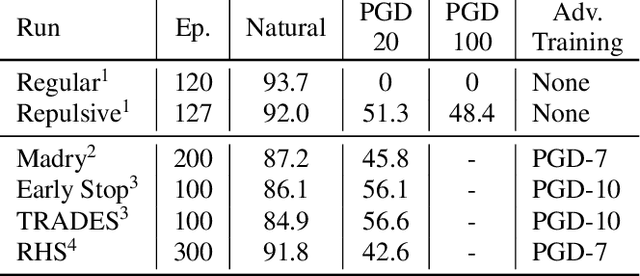

Abstract:While many defences against adversarial examples have been proposed, finding robust machine learning models is still an open problem. The most compelling defence to date is adversarial training and consists of complementing the training data set with adversarial examples. Yet adversarial training severely impacts training time and depends on finding representative adversarial samples. In this paper we propose to train models on output spaces with large class separation in order to gain robustness without adversarial training. We introduce a method to partition the output space into class prototypes with large separation and train models to preserve it. Experimental results shows that models trained with these prototypes -- which we call deep repulsive prototypes -- gain robustness competitive with adversarial training, while also preserving more accuracy on natural samples. Moreover, the models are more resilient to large perturbation sizes. For example, we obtained over 50% robustness for CIFAR-10, with 92% accuracy on natural samples and over 20% robustness for CIFAR-100, with 71% accuracy on natural samples without adversarial training. For both data sets, the models preserved robustness against large perturbations better than adversarially trained models.

Adversarial Examples on Object Recognition: A Comprehensive Survey

Sep 03, 2020

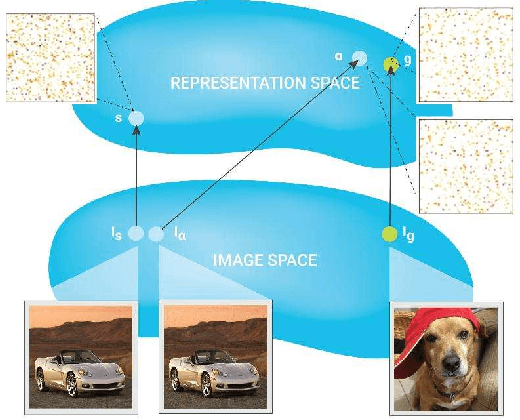

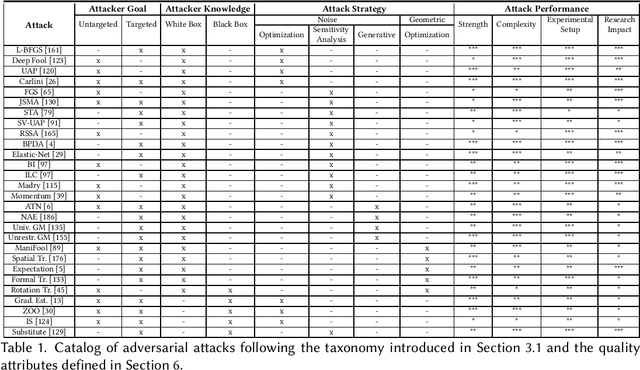

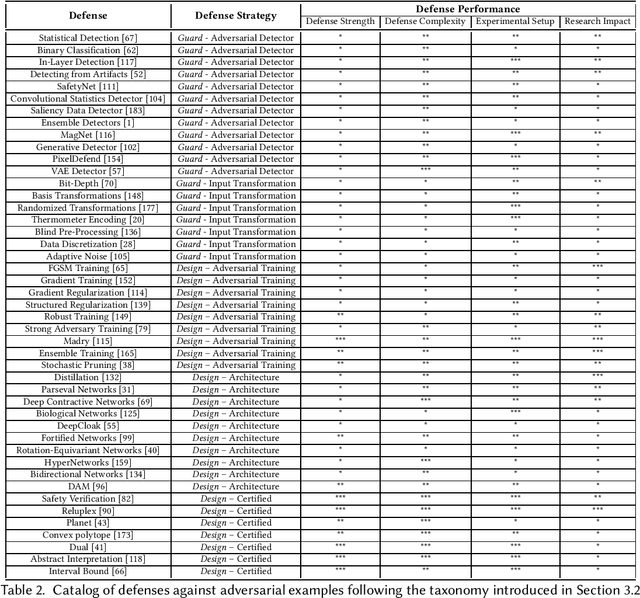

Abstract:Deep neural networks are at the forefront of machine learning research. However, despite achieving impressive performance on complex tasks, they can be very sensitive: Small perturbations of inputs can be sufficient to induce incorrect behavior. Such perturbations, called adversarial examples, are intentionally designed to test the network's sensitivity to distribution drifts. Given their surprisingly small size, a wide body of literature conjectures on their existence and how this phenomenon can be mitigated. In this article we discuss the impact of adversarial examples on security, safety, and robustness of neural networks. We start by introducing the hypotheses behind their existence, the methods used to construct or protect against them, and the capacity to transfer adversarial examples between different machine learning models. Altogether, the goal is to provide a comprehensive and self-contained survey of this growing field of research.

Learning to Learn from Mistakes: Robust Optimization for Adversarial Noise

Aug 12, 2020

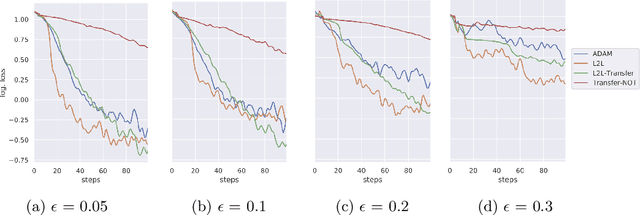

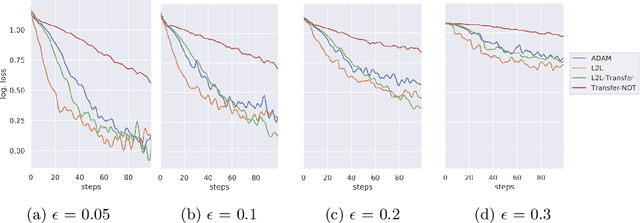

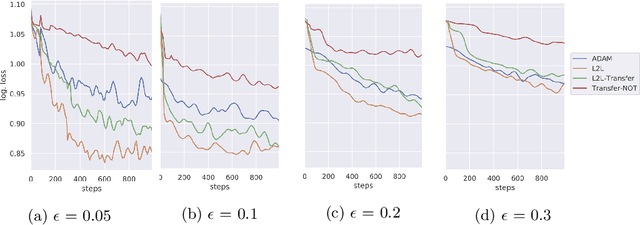

Abstract:Sensitivity to adversarial noise hinders deployment of machine learning algorithms in security-critical applications. Although many adversarial defenses have been proposed, robustness to adversarial noise remains an open problem. The most compelling defense, adversarial training, requires a substantial increase in processing time and it has been shown to overfit on the training data. In this paper, we aim to overcome these limitations by training robust models in low data regimes and transfer adversarial knowledge between different models. We train a meta-optimizer which learns to robustly optimize a model using adversarial examples and is able to transfer the knowledge learned to new models, without the need to generate new adversarial examples. Experimental results show the meta-optimizer is consistent across different architectures and data sets, suggesting it is possible to automatically patch adversarial vulnerabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge