Alex Clay

Noise or Nuance: An Investigation Into Useful Information and Filtering For LLM Driven AKBC

Sep 10, 2025Abstract:RAG and fine-tuning are prevalent strategies for improving the quality of LLM outputs. However, in constrained situations, such as that of the 2025 LM-KBC challenge, such techniques are restricted. In this work we investigate three facets of the triple completion task: generation, quality assurance, and LLM response parsing. Our work finds that in this constrained setting: additional information improves generation quality, LLMs can be effective at filtering poor quality triples, and the tradeoff between flexibility and consistency with LLM response parsing is setting dependent.

Information for Conversation Generation: Proposals Utilising Knowledge Graphs

Oct 21, 2024

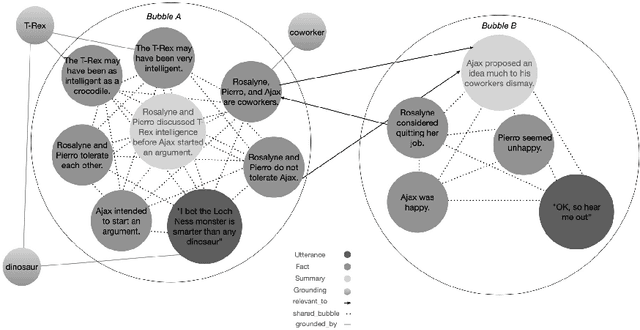

Abstract:LLMs are frequently used tools for conversational generation. Without additional information LLMs can generate lower quality responses due to lacking relevant content and hallucinations, as well as the perception of poor emotional capability, and an inability to maintain a consistent character. Knowledge graphs are commonly used forms of external knowledge and may provide solutions to these challenges. This paper introduces three proposals, utilizing knowledge graphs to enhance LLM generation. Firstly, dynamic knowledge graph embeddings and recommendation could allow for the integration of new information and the selection of relevant knowledge for response generation. Secondly, storing entities with emotional values as additional features may provide knowledge that is better emotionally aligned with the user input. Thirdly, integrating character information through narrative bubbles would maintain character consistency, as well as introducing a structure that would readily incorporate new information.

Cognitively Inspired Components for Social Conversational Agents

Nov 09, 2023

Abstract:Current conversational agents (CA) have seen improvement in conversational quality in recent years due to the influence of large language models (LLMs) like GPT3. However, two key categories of problem remain. Firstly there are the unique technical problems resulting from the approach taken in creating the CA, such as scope with retrieval agents and the often nonsensical answers of former generative agents. Secondly, humans perceive CAs as social actors, and as a result expect the CA to adhere to social convention. Failure on the part of the CA in this respect can lead to a poor interaction and even the perception of threat by the user. As such, this paper presents a survey highlighting a potential solution to both categories of problem through the introduction of cognitively inspired additions to the CA. Through computational facsimiles of semantic and episodic memory, emotion, working memory, and the ability to learn, it is possible to address both the technical and social problems encountered by CAs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge