Alejo J Nevado-Holgado

Information Extraction from Swedish Medical Prescriptions with Sig-Transformer Encoder

Oct 10, 2020

Abstract:Relying on large pretrained language models such as Bidirectional Encoder Representations from Transformers (BERT) for encoding and adding a simple prediction layer has led to impressive performance in many clinical natural language processing (NLP) tasks. In this work, we present a novel extension to the Transformer architecture, by incorporating signature transform with the self-attention model. This architecture is added between embedding and prediction layers. Experiments on a new Swedish prescription data show the proposed architecture to be superior in two of the three information extraction tasks, comparing to baseline models. Finally, we evaluate two different embedding approaches between applying Multilingual BERT and translating the Swedish text to English then encode with a BERT model pretrained on clinical notes.

Learning to Detect Bipolar Disorder and Borderline Personality Disorder with Language and Speech in Non-Clinical Interviews

Aug 08, 2020

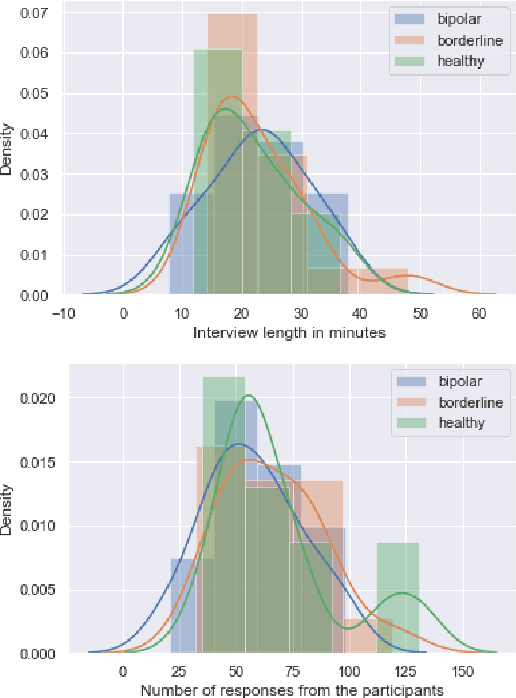

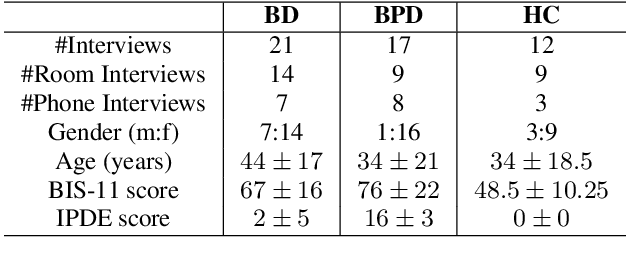

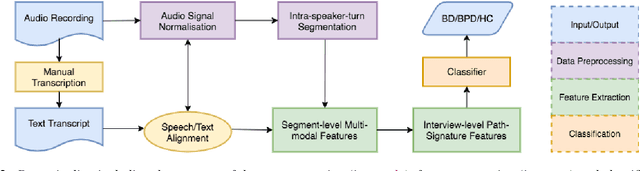

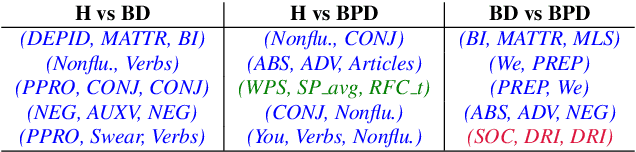

Abstract:Bipolar disorder (BD) and borderline personality disorder (BPD) are both chronic psychiatric disorders. However, their overlapping symptoms and common comorbidity make it challenging for the clinicians to distinguish the two conditions on the basis of a clinical interview. In this work, we first present a new multi-modal dataset containing interviews involving individuals with BD or BPD being interviewed about a non-clinical topic . We investigate the automatic detection of the two conditions, and demonstrate a good linear classifier that can be learnt using a down-selected set of features from the different aspects of the interviews and a novel approach of summarising these features. Finally, we find that different sets of features characterise BD and BPD, thus providing insights into the difference between the automatic screening of the two conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge