Alberto Leon Garcia

Interaction and Conflict Management in AI-assisted Operational Control Loops in 6G

Oct 22, 2021

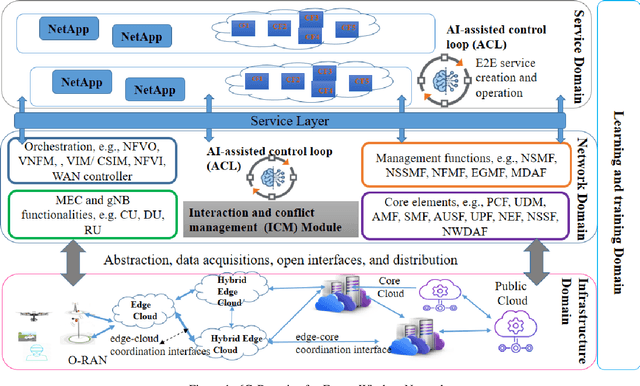

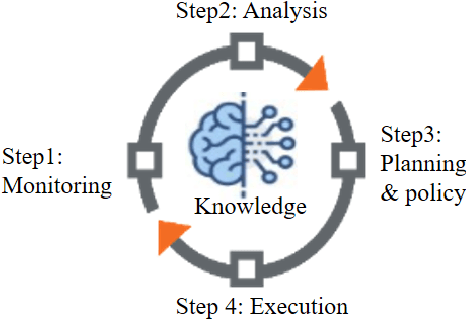

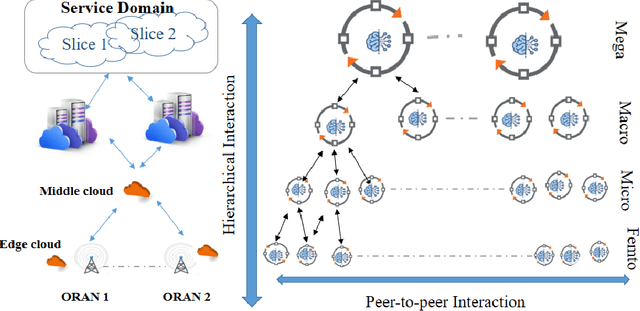

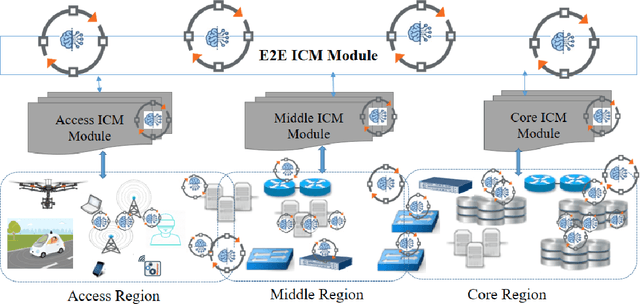

Abstract:This paper studies autonomous and AI-assisted control loops (ACLs) in the next generation of wireless networks in the lens of multi-agent environments. We will study the diverse interactions and conflict management among these loops. We propose "interaction and conflict management" (ICM) modules to achieve coherent, consistent and interactions among these ACLs. We introduce three categories of ACLs based on their sizes, their cooperative and competitive behaviors, and their sharing of datasets and models. These categories help to introduce conflict resolution and interaction management mechanisms for ICM. Using Kubernetes, we present an implementation of ICM to remove the conflicts in the scheduling and rescheduling of Pods for different ACLs in networks.

Generalized ADMM in Distributed Learning via Variational Inequality

Apr 26, 2021

Abstract:Due to the explosion in size and complexity of modern data sets and privacy concerns of data holders, it is increasingly important to be able to solve machine learning problems in distributed manners. The Alternating Direction Method of Multipliers (ADMM) through the concept of consensus variables is a practical algorithm in this context where its diverse variations and its performance have been studied in different application areas. In this paper, we study the effect of the local data sets of users in the distributed learning of ADMM. Our aim is to deploy variational inequality (VI) to attain an unified view of ADMM variations. Through the simulation results, we demonstrate how more general definitions of consensus parameters and introducing the uncertain parameters in distribute approach can help to get the better results in learning processes.

Robust Federated Learning by Mixture of Experts

Apr 23, 2021

Abstract:We present a novel weighted average model based on the mixture of experts (MoE) concept to provide robustness in Federated learning (FL) against the poisoned/corrupted/outdated local models. These threats along with the non-IID nature of data sets can considerably diminish the accuracy of the FL model. Our proposed MoE-FL setup relies on the trust between users and the server where the users share a portion of their public data sets with the server. The server applies a robust aggregation method by solving the optimization problem or the Softmax method to highlight the outlier cases and to reduce their adverse effect on the FL process. Our experiments illustrate that MoE-FL outperforms the performance of the traditional aggregation approach for high rate of poisoned data from attackers.

Representation of Federated Learning via Worst-Case Robust Optimization Theory

Dec 11, 2019

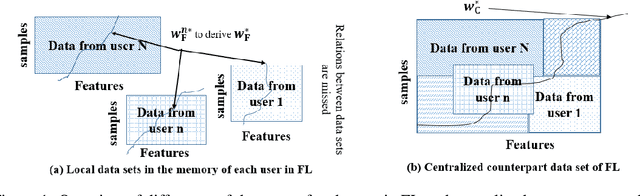

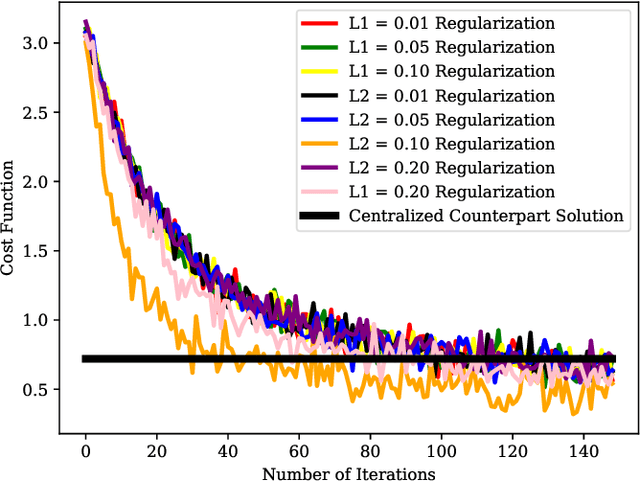

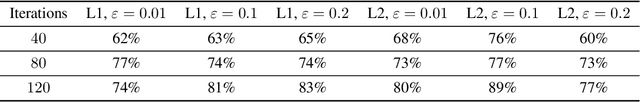

Abstract:Federated learning (FL) is a distributed learning approach where a set of end-user devices participate in the learning process by acting on their isolated local data sets. Here, we process local data sets of users where worst-case optimization theory is used to reformulate the FL problem where the impact of local data sets in training phase is considered as an uncertain function bounded in a closed uncertainty region. This representation allows us to compare the performance of FL with its centralized counterpart, and to replace the uncertain function with a concept of protection functions leading to more tractable formulation. The latter supports applying a regularization factor in each user cost function in FL to reach a better performance. We evaluated our model using the MNIST data set versus the protection function parameters, e.g., regularization factors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge