Albert Vilamala

Deep Convolutional Neural Networks for Interpretable Analysis of EEG Sleep Stage Scoring

Oct 02, 2017

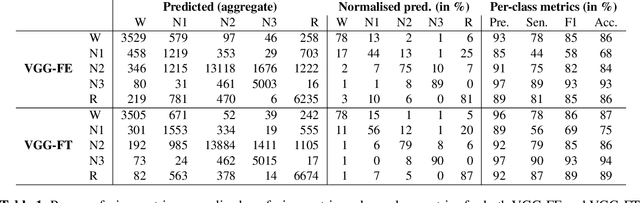

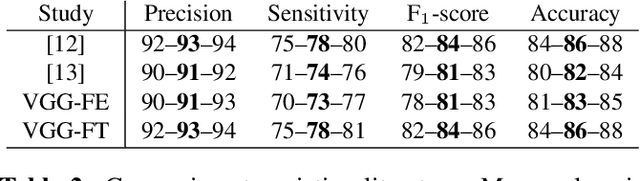

Abstract:Sleep studies are important for diagnosing sleep disorders such as insomnia, narcolepsy or sleep apnea. They rely on manual scoring of sleep stages from raw polisomnography signals, which is a tedious visual task requiring the workload of highly trained professionals. Consequently, research efforts to purse for an automatic stage scoring based on machine learning techniques have been carried out over the last years. In this work, we resort to multitaper spectral analysis to create visually interpretable images of sleep patterns from EEG signals as inputs to a deep convolutional network trained to solve visual recognition tasks. As a working example of transfer learning, a system able to accurately classify sleep stages in new unseen patients is presented. Evaluations in a widely-used publicly available dataset favourably compare to state-of-the-art results, while providing a framework for visual interpretation of outcomes.

Adaptive Smoothing in fMRI Data Processing Neural Networks

Oct 02, 2017

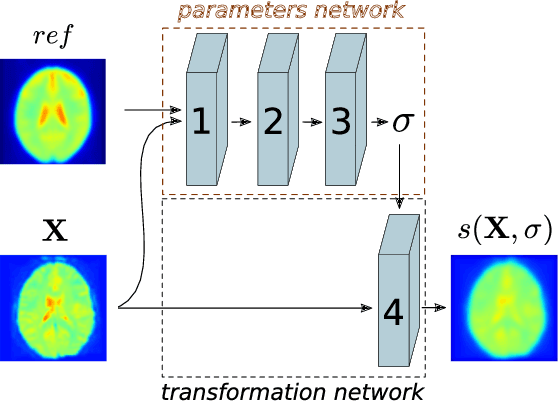

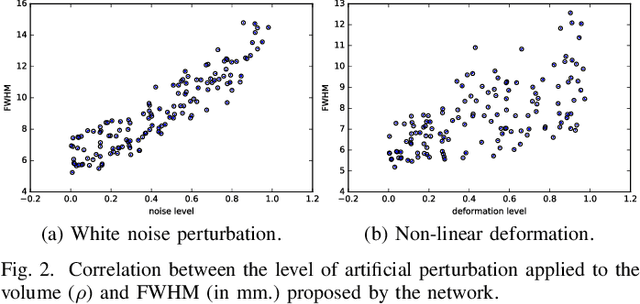

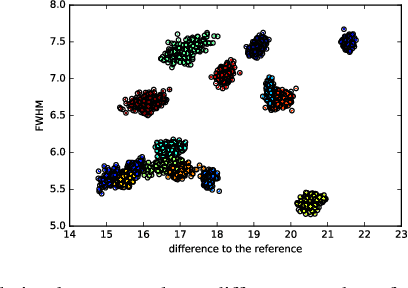

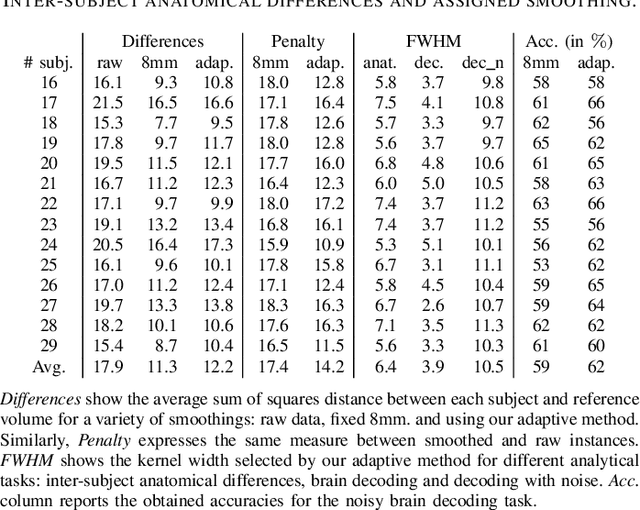

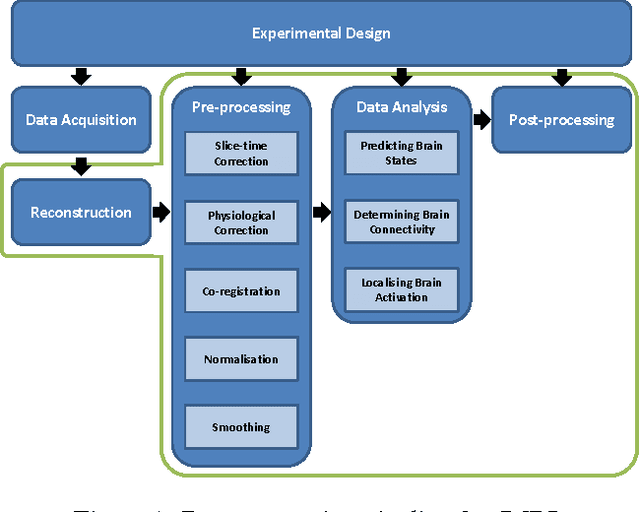

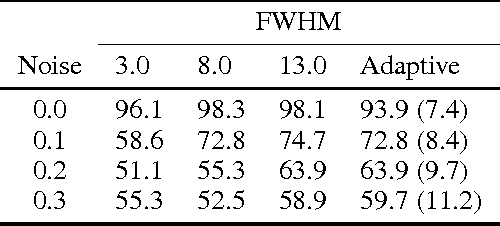

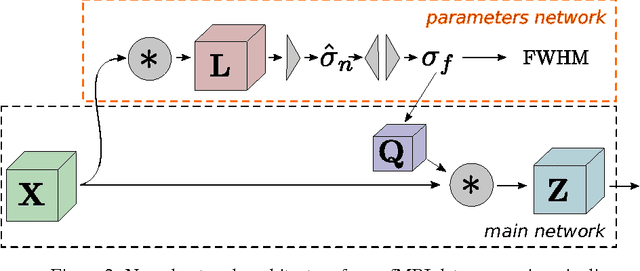

Abstract:Functional Magnetic Resonance Imaging (fMRI) relies on multi-step data processing pipelines to accurately determine brain activity; among them, the crucial step of spatial smoothing. These pipelines are commonly suboptimal, given the local optimisation strategy they use, treating each step in isolation. With the advent of new tools for deep learning, recent work has proposed to turn these pipelines into end-to-end learning networks. This change of paradigm offers new avenues to improvement as it allows for a global optimisation. The current work aims at benefitting from this paradigm shift by defining a smoothing step as a layer in these networks able to adaptively modulate the degree of smoothing required by each brain volume to better accomplish a given data analysis task. The viability is evaluated on real fMRI data where subjects did alternate between left and right finger tapping tasks.

Towards end-to-end optimisation of functional image analysis pipelines

Oct 13, 2016

Abstract:The study of neurocognitive tasks requiring accurate localisation of activity often rely on functional Magnetic Resonance Imaging, a widely adopted technique that makes use of a pipeline of data processing modules, each involving a variety of parameters. These parameters are frequently set according to the local goal of each specific module, not accounting for the rest of the pipeline. Given recent success of neural network research in many different domains, we propose to convert the whole data pipeline into a deep neural network, where the parameters involved are jointly optimised by the network to best serve a common global goal. As a proof of concept, we develop a module able to adaptively apply the most suitable spatial smoothing to every brain volume for each specific neuroimaging task, and we validate its results in a standard brain decoding experiment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge