Alain Bensoussan

Jindal School of Management, The University of Texas at Dallas

Control and optimization for Neural Partial Differential Equations in Supervised Learning

Jun 25, 2025Abstract:Although there is a substantial body of literature on control and optimization problems for parabolic and hyperbolic systems, the specific problem of controlling and optimizing the coefficients of the associated operators within such systems has not yet been thoroughly explored. In this work, we aim to initiate a line of research in control theory focused on optimizing and controlling the coefficients of these operators-a problem that naturally arises in the context of neural networks and supervised learning. In supervised learning, the primary objective is to transport initial data toward target data through the layers of a neural network. We propose a novel perspective: neural networks can be interpreted as partial differential equations (PDEs). From this viewpoint, the control problem traditionally studied in the context of ordinary differential equations (ODEs) is reformulated as a control problem for PDEs, specifically targeting the optimization and control of coefficients in parabolic and hyperbolic operators. To the best of our knowledge, this specific problem has not yet been systematically addressed in the control theory of PDEs. To this end, we propose a dual system formulation for the control and optimization problem associated with parabolic PDEs, laying the groundwork for the development of efficient numerical schemes in future research. We also provide a theoretical proof showing that the control and optimization problem for parabolic PDEs admits minimizers. Finally, we investigate the control problem associated with hyperbolic PDEs and prove the existence of solutions for a corresponding approximated control problem.

Asymptotically efficient one-step stochastic gradient descent

Jun 09, 2023

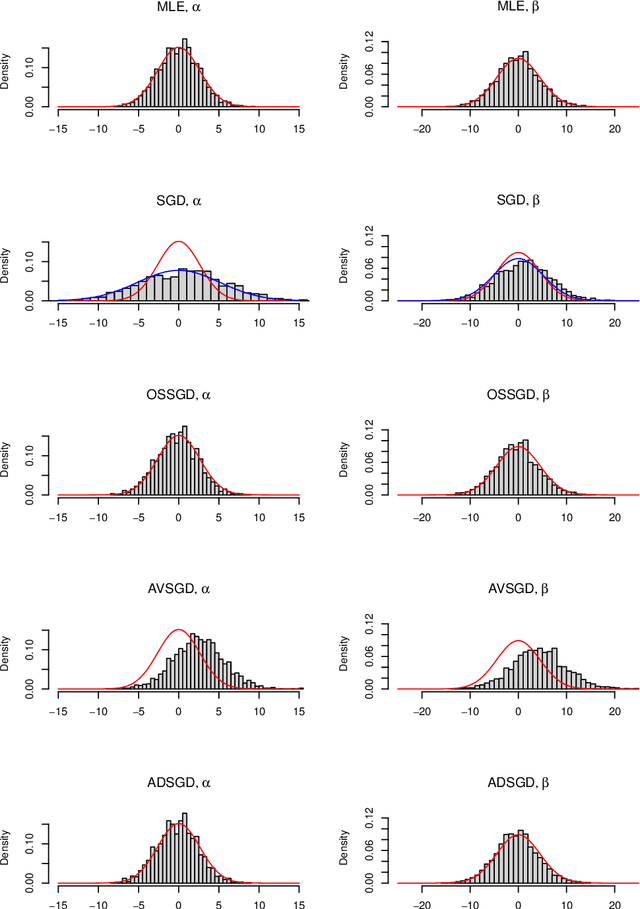

Abstract:A generic, fast and asymptotically efficient method for parametric estimation is described. It is based on the stochastic gradient descent on the loglikelihood function corrected by a single step of the Fisher scoring algorithm. We show theoretically and by simulations in the i.i.d. setting that it is an interesting alternative to the usual stochastic gradient descent with averaging or the adaptative stochastic gradient descent.

Machine Learning and Control Theory

Jun 10, 2020

Abstract:We survey in this article the connections between Machine Learning and Control Theory. Control Theory provide useful concepts and tools for Machine Learning. Conversely Machine Learning can be used to solve large control problems. In the first part of the paper, we develop the connections between reinforcement learning and Markov Decision Processes, which are discrete time control problems. In the second part, we review the concept of supervised learning and the relation with static optimization. Deep learning which extends supervised learning, can be viewed as a control problem. In the third part, we present the links between stochastic gradient descent and mean-field theory. Conversely, in the fourth and fifth parts, we review machine learning approaches to stochastic control problems, and focus on the deterministic case, to explain, more easily, the numerical algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge