Akshay Kumar Sureddy

Safe to Serve: Aligning Instruction-Tuned Models for Safety and Helpfulness

Nov 26, 2024

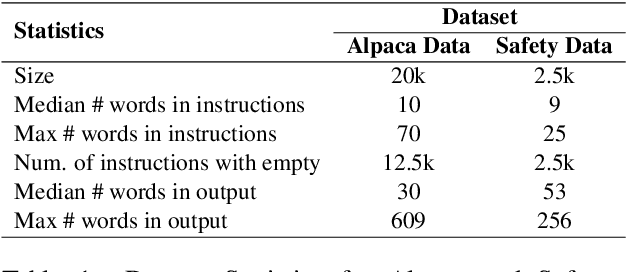

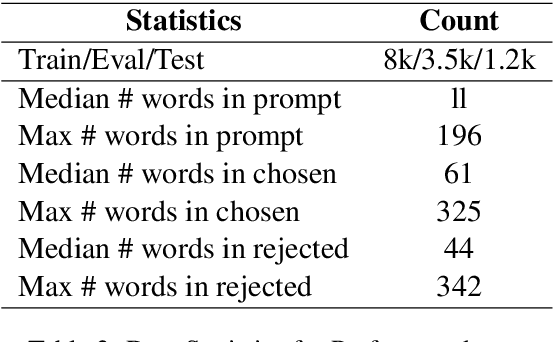

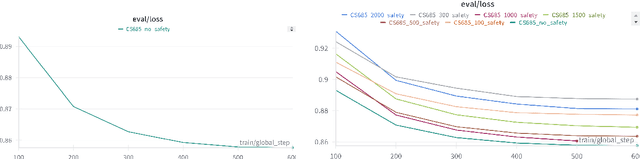

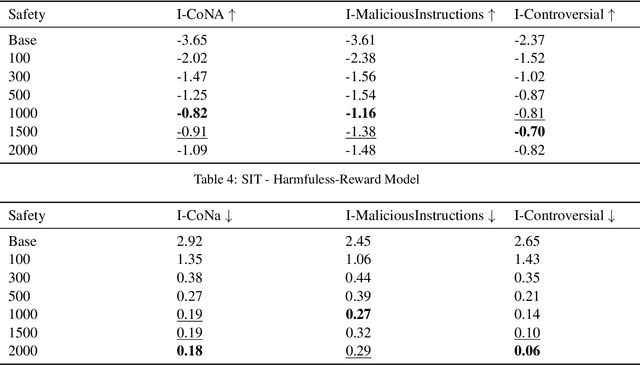

Abstract:Large language models (LLMs) have demonstrated remarkable capabilities in complex reasoning and text generation. However, these models can inadvertently generate unsafe or biased responses when prompted with problematic inputs, raising significant ethical and practical concerns for real-world deployment. This research addresses the critical challenge of developing language models that generate both helpful and harmless content, navigating the delicate balance between model performance and safety. We demonstrate that incorporating safety-related instructions during the instruction-tuning of pre-trained models significantly reduces toxic responses to unsafe prompts without compromising performance on helpfulness datasets. We found Direct Preference Optimization (DPO) to be particularly effective, outperforming both SIT and RAFT by leveraging both chosen and rejected responses for learning. Our approach increased safe responses from 40$\%$ to over 90$\%$ across various harmfulness benchmarks. In addition, we discuss a rigorous evaluation framework encompassing specialized metrics and diverse datasets for safety and helpfulness tasks ensuring a comprehensive assessment of the model's capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge