Akiva Kleinerman

Explaining Decisions of Agents in Mixed-Motive Games

Jul 21, 2024

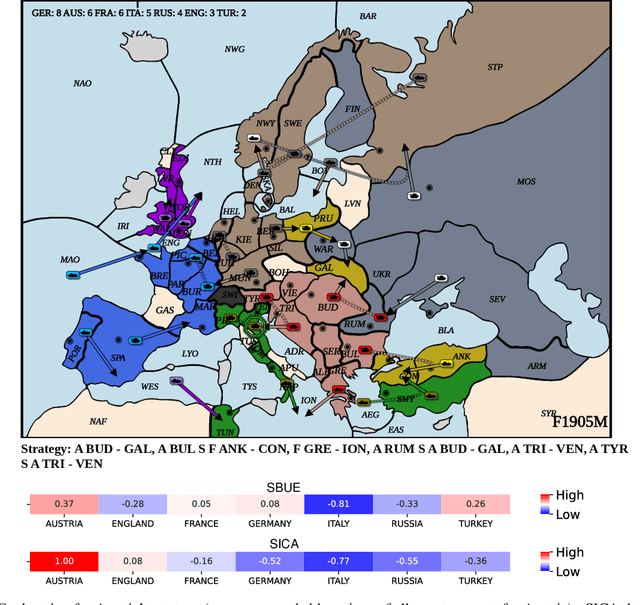

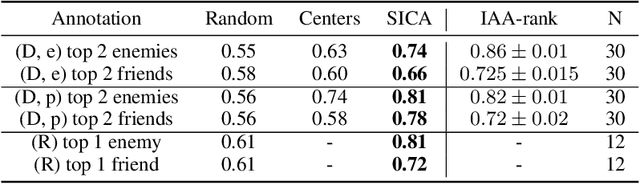

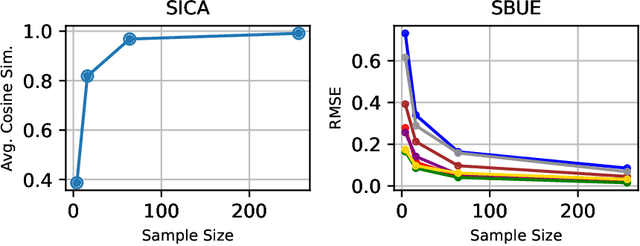

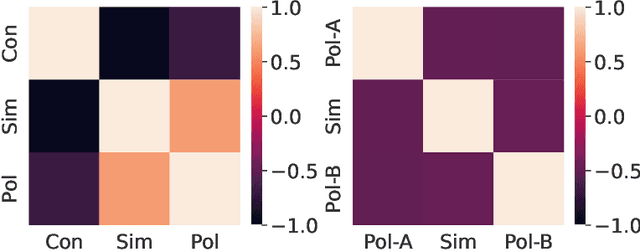

Abstract:In recent years, agents have become capable of communicating seamlessly via natural language and navigating in environments that involve cooperation and competition, a fact that can introduce social dilemmas. Due to the interleaving of cooperation and competition, understanding agents' decision-making in such environments is challenging, and humans can benefit from obtaining explanations. However, such environments and scenarios have rarely been explored in the context of explainable AI. While some explanation methods for cooperative environments can be applied in mixed-motive setups, they do not address inter-agent competition, cheap-talk, or implicit communication by actions. In this work, we design explanation methods to address these issues. Then, we proceed to demonstrate their effectiveness and usefulness for humans, using a non-trivial mixed-motive game as a test case. Lastly, we establish generality and demonstrate the applicability of the methods to other games, including one where we mimic human game actions using large language models.

Towards Outcome-Driven Patient Subgroups: A Machine Learning Analysis Across Six Depression Treatment Studies

Mar 30, 2023Abstract:Major depressive disorder (MDD) is a heterogeneous condition; multiple underlying neurobiological substrates could be associated with treatment response variability. Understanding the sources of this variability and predicting outcomes has been elusive. Machine learning has shown promise in predicting treatment response in MDD, but one limitation has been the lack of clinical interpretability of machine learning models. We analyzed data from six clinical trials of pharmacological treatment for depression (total n = 5438) using the Differential Prototypes Neural Network (DPNN), a neural network model that derives patient prototypes which can be used to derive treatment-relevant patient clusters while learning to generate probabilities for differential treatment response. A model classifying remission and outputting individual remission probabilities for five first-line monotherapies and three combination treatments was trained using clinical and demographic data. Model validity and clinical utility were measured based on area under the curve (AUC) and expected improvement in sample remission rate with model-guided treatment, respectively. Post-hoc analyses yielded clusters (subgroups) based on patient prototypes learned during training. Prototypes were evaluated for interpretability by assessing differences in feature distributions and treatment-specific outcomes. A 3-prototype model achieved an AUC of 0.66 and an expected absolute improvement in population remission rate compared to the sample remission rate. We identified three treatment-relevant patient clusters which were clinically interpretable. It is possible to produce novel treatment-relevant patient profiles using machine learning models; doing so may improve precision medicine for depression. Note: This model is not currently the subject of any active clinical trials and is not intended for clinical use.

Big Data Analytics and AI in Mental Healthcare

Mar 12, 2019Abstract:Mental health conditions cause a great deal of distress or impairment; depression alone will affect 11% of the world's population. The application of Artificial Intelligence (AI) and big-data technologies to mental health has great potential for personalizing treatment selection, prognosticating, monitoring for relapse, detecting and helping to prevent mental health conditions before they reach clinical-level symptomatology, and even delivering some treatments. However, unlike similar applications in other fields of medicine, there are several unique challenges in mental health applications which currently pose barriers towards the implementation of these technologies. Specifically, there are very few widely used or validated biomarkers in mental health, leading to a heavy reliance on patient and clinician derived questionnaire data as well as interpretation of new signals such as digital phenotyping. In addition, diagnosis also lacks the same objective 'gold standard' as in other conditions such as oncology, where clinicians and researchers can often rely on pathological analysis for confirmation of diagnosis. In this chapter we discuss the major opportunities, limitations and techniques used for improving mental healthcare through AI and big-data. We explore both the computational, clinical and ethical considerations and best practices as well as lay out the major researcher directions for the near future.

Providing Explanations for Recommendations in Reciprocal Environments

Jul 03, 2018

Abstract:Automated platforms which support users in finding a mutually beneficial match, such as online dating and job recruitment sites, are becoming increasingly popular. These platforms often include recommender systems that assist users in finding a suitable match. While recommender systems which provide explanations for their recommendations have shown many benefits, explanation methods have yet to be adapted and tested in recommending suitable matches. In this paper, we introduce and extensively evaluate the use of "reciprocal explanations" -- explanations which provide reasoning as to why both parties are expected to benefit from the match. Through an extensive empirical evaluation, in both simulated and real-world dating platforms with 287 human participants, we find that when the acceptance of a recommendation involves a significant cost (e.g., monetary or emotional), reciprocal explanations outperform standard explanation methods which consider the recommendation receiver alone. However, contrary to what one may expect, when the cost of accepting a recommendation is negligible, reciprocal explanations are shown to be less effective than the traditional explanation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge