Ajay Nayak

PyGraph: Robust Compiler Support for CUDA Graphs in PyTorch

Mar 25, 2025

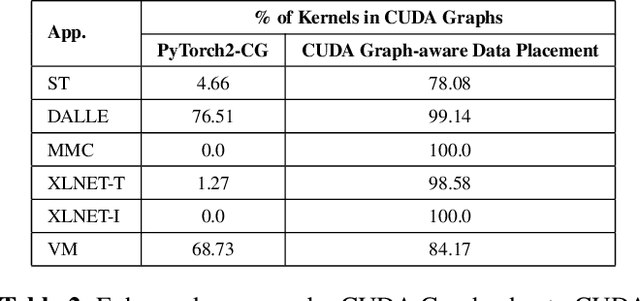

Abstract:CUDA Graphs -- a recent hardware feature introduced for NVIDIA GPUs -- aim to reduce CPU launch overhead by capturing and launching a series of GPU tasks (kernels) as a DAG. However, deploying CUDA Graphs faces several challenges today due to the static structure of a graph. It also incurs performance overhead due to data copy. In fact, we show a counter-intuitive result -- deploying CUDA Graphs hurts performance in many cases. We introduce PyGraph, a novel approach to automatically harness the power of CUDA Graphs within PyTorch2. Driven by three key observations, PyGraph embodies three novel optimizations: it enables wider deployment of CUDA Graphs, reduces GPU kernel parameter copy overheads, and selectively deploys CUDA Graphs based on a cost-benefit analysis. PyGraph seamlessly integrates with PyTorch2's compilation toolchain, enabling efficient use of CUDA Graphs without manual modifications to the code. We evaluate PyGraph across various machine learning benchmarks, demonstrating substantial performance improvements over PyTorch2.

vAttention: Dynamic Memory Management for Serving LLMs without PagedAttention

May 07, 2024

Abstract:Efficient use of GPU memory is essential for high throughput LLM inference. Prior systems reserved memory for the KV-cache ahead-of-time, resulting in wasted capacity due to internal fragmentation. Inspired by OS-based virtual memory systems, vLLM proposed PagedAttention to enable dynamic memory allocation for KV-cache. This approach eliminates fragmentation, enabling high-throughput LLM serving with larger batch sizes. However, to be able to allocate physical memory dynamically, PagedAttention changes the layout of KV-cache from contiguous virtual memory to non-contiguous virtual memory. This change requires attention kernels to be rewritten to support paging, and serving framework to implement a memory manager. Thus, the PagedAttention model leads to software complexity, portability issues, redundancy and inefficiency. In this paper, we propose vAttention for dynamic KV-cache memory management. In contrast to PagedAttention, vAttention retains KV-cache in contiguous virtual memory and leverages low-level system support for demand paging, that already exists, to enable on-demand physical memory allocation. Thus, vAttention unburdens the attention kernel developer from having to explicitly support paging and avoids re-implementation of memory management in the serving framework. We show that vAttention enables seamless dynamic memory management for unchanged implementations of various attention kernels. vAttention also generates tokens up to 1.97x faster than vLLM, while processing input prompts up to 3.92x and 1.45x faster than the PagedAttention variants of FlashAttention and FlashInfer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge