Aigul Nugmanova

Style Transfer for Texts: Retrain, Report Errors, Compare with Rewrites

Aug 29, 2019

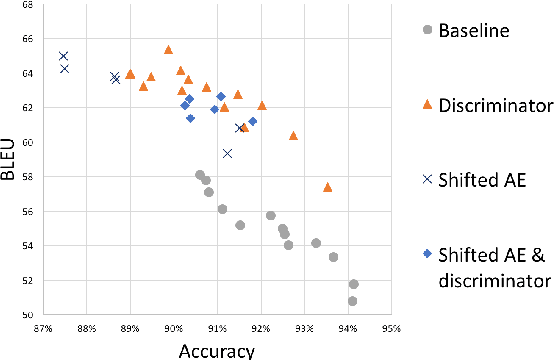

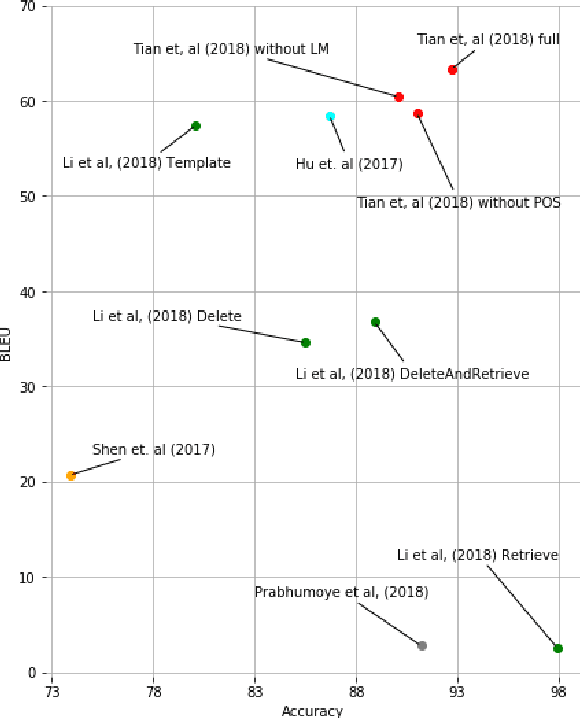

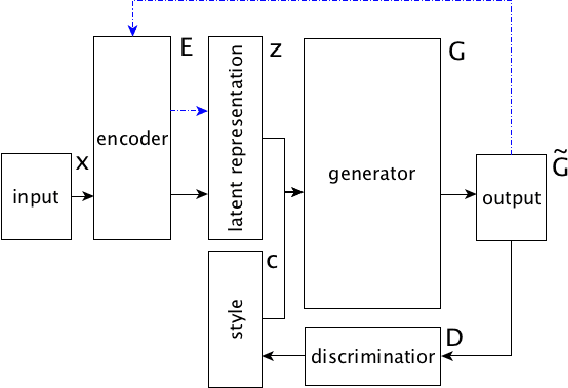

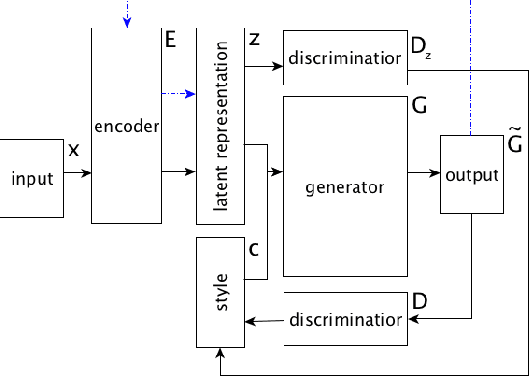

Abstract:This paper shows that standard assessment methodology for style transfer has several significant problems. First, the standard metrics for style accuracy and semantics preservation vary significantly on different re-runs. Therefore one has to report error margins for the obtained results. Second, starting with certain values of bilingual evaluation understudy (BLEU) between input and output and accuracy of the sentiment transfer the optimization of these two standard metrics diverge from the intuitive goal of the style transfer task. Finally, due to the nature of the task itself, there is a specific dependence between these two metrics that could be easily manipulated. Under these circumstances, we suggest taking BLEU between input and human-written reformulations into consideration for benchmarks. We also propose three new architectures that outperform state of the art in terms of this metric.

Strategy of the Negative Sampling for Training Retrieval-Based Dialogue Systems

Nov 24, 2018

Abstract:The article describes the new approach for quality improvement of automated dialogue systems for customer support service. Analysis produced in the paper demonstrates the dependency of the quality of the retrieval-based dialogue system quality on the choice of negative responses. The proposed approach implies choosing the negative samples according to the distribution of responses in the train set. In this implementation the negative samples are randomly chosen from the original response distribution and from the "artificial" distribution of negative responses, such as uniform distribution or the distribution obtained by transformation of the original one. The results obtained for the implemented systems and reported in this paper confirm the significant improvement of automated dialogue systems quality in case of using the negative responses from transformed distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge