Ahtsham Manzoor

INFACT: An Online Human Evaluation Framework for Conversational Recommendation

Sep 07, 2022

Abstract:Conversational recommender systems (CRS) are interactive agents that support their users in recommendation-related goals through multi-turn conversations. Generally, a CRS can be evaluated in various dimensions. Today's CRS mainly rely on offline(computational) measures to assess the performance of their algorithms in comparison to different baselines. However, offline measures can have limitations, for example, when the metrics for comparing a newly generated response with a ground truth do not correlate with human perceptions, because various alternative generated responses might be suitable too in a given dialog situation. Current research on machine learning-based CRS models therefore acknowledges the importance of humans in the evaluation process, knowing that pure offline measures may not be sufficient in evaluating a highly interactive system like a CRS.

INSPIRED2: An Improved Dataset for Sociable Conversational Recommendation

Aug 08, 2022

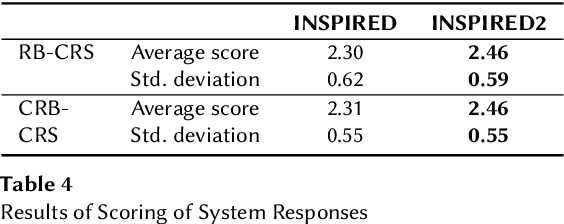

Abstract:Conversational recommender systems (CRS) that interact with users in natural language utilize recommendation dialogs collected with the help of paired humans, where one plays the role of a seeker and the other as a recommender. These recommendation dialogs include items and entities to disclose seekers' preferences in natural language. However, in order to precisely model the seekers' preferences and respond consistently, mainly CRS rely on explicitly annotated items and entities that appear in the dialog, and usually leverage the domain knowledge. In this work, we investigate INSPIRED, a dataset consisting of recommendation dialogs for the sociable conversational recommendation, where items and entities were explicitly annotated using automatic keyword or pattern matching techniques. To this end, we found a large number of cases where items and entities were either wrongly annotated or missing annotations at all. The question however remains to what extent automatic techniques for annotations are effective. Moreover, it is unclear what is the relative impact of poor and improved annotations on the overall effectiveness of a CRS in terms of the consistency and quality of responses. In this regard, first, we manually fixed the annotations and removed the noise in the INSPIRED dataset. Second, we evaluate the performance of several benchmark CRS using both versions of the dataset. Our analyses suggest that with the improved version of the dataset, i.e., INSPIRED2, various benchmark CRS outperformed and that dialogs are rich in knowledge concepts compared to when the original version is used. We release our improved dataset (INSPIRED2) publicly at https://github.com/ahtsham58/INSPIRED2.

Towards Retrieval-based Conversational Recommendation

Sep 06, 2021

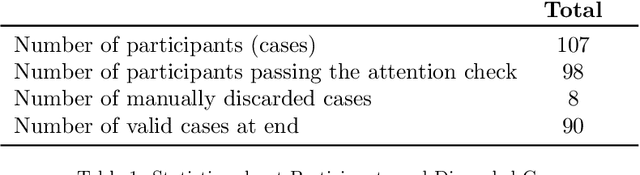

Abstract:Conversational recommender systems have attracted immense attention recently. The most recent approaches rely on neural models trained on recorded dialogs between humans, implementing an end-to-end learning process. These systems are commonly designed to generate responses given the user's utterances in natural language. One main challenge is that these generated responses both have to be appropriate for the given dialog context and must be grammatically and semantically correct. An alternative to such generation-based approaches is to retrieve responses from pre-recorded dialog data and to adapt them if needed. Such retrieval-based approaches were successfully explored in the context of general conversational systems, but have received limited attention in recent years for CRS. In this work, we re-assess the potential of such approaches and design and evaluate a novel technique for response retrieval and ranking. A user study (N=90) revealed that the responses by our system were on average of higher quality than those of two recent generation-based systems. We furthermore found that the quality ranking of the two generation-based approaches is not aligned with the results from the literature, which points to open methodological questions. Overall, our research underlines that retrieval-based approaches should be considered an alternative or complement to language generation approaches.

A Survey on Conversational Recommender Systems

Apr 01, 2020

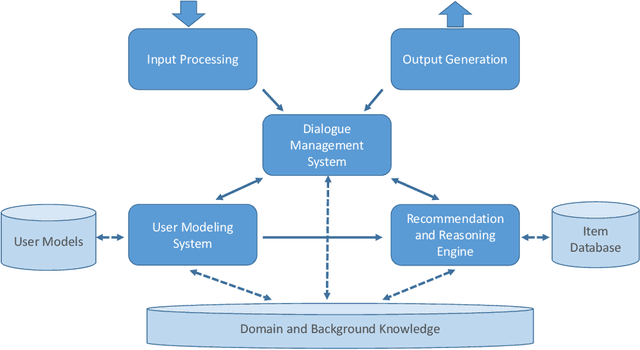

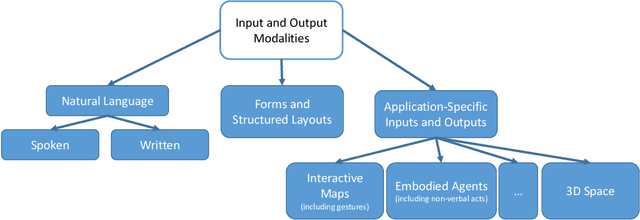

Abstract:Recommender systems are software applications that help users to find items of interest in situations of information overload. Current research often assumes a one-shot interaction paradigm, where the users' preferences are estimated based on past observed behavior and where the presentation of a ranked list of suggestions is the main, one-directional form of user interaction. Conversational recommender systems (CRS) take a different approach and support a richer set of interactions. These interactions can, for example, help to improve the preference elicitation process or allow the user to ask questions about the recommendations and to give feedback. The interest in CRS has significantly increased in the past few years. This development is mainly due to the significant progress in the area of natural language processing, the emergence of new voice-controlled home assistants, and the increased use of chatbot technology. With this paper, we provide a detailed survey of existing approaches to conversational recommendation. We categorize these approaches in various dimensions, e.g., in terms of the supported user intents or the knowledge they use in the background. Moreover, we discuss technological approaches, review how CRS are evaluated, and finally identify a number of gaps that deserve more research in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge