Adolfo G. Ramirez-Aristizabal

EEG2Mel: Reconstructing Sound from Brain Responses to Music

Jul 28, 2022

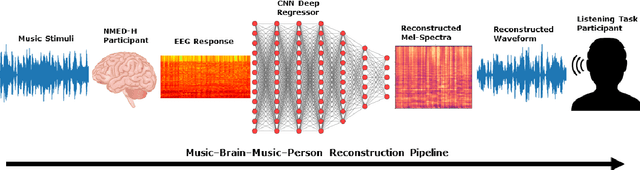

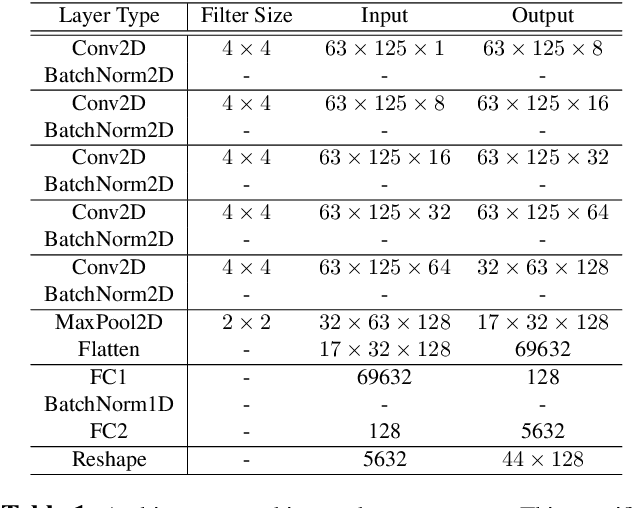

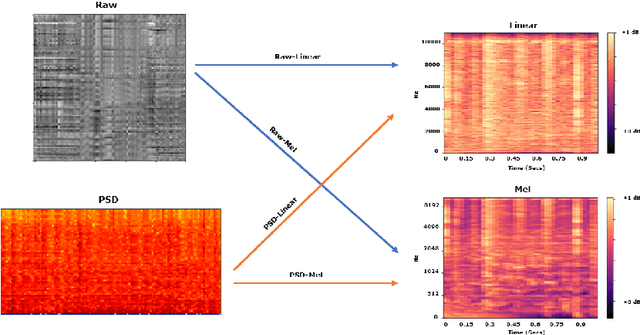

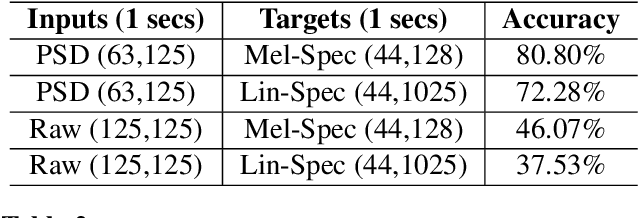

Abstract:Information retrieval from brain responses to auditory and visual stimuli has shown success through classification of song names and image classes presented to participants while recording EEG signals. Information retrieval in the form of reconstructing auditory stimuli has also shown some success, but here we improve on previous methods by reconstructing music stimuli well enough to be perceived and identified independently. Furthermore, deep learning models were trained on time-aligned music stimuli spectrum for each corresponding one-second window of EEG recording, which greatly reduces feature extraction steps needed when compared to prior studies. The NMED-Tempo and NMED-Hindi datasets of participants passively listening to full length songs were used to train and validate Convolutional Neural Network (CNN) regressors. The efficacy of raw voltage versus power spectrum inputs and linear versus mel spectrogram outputs were tested, and all inputs and outputs were converted into 2D images. The quality of reconstructed spectrograms was assessed by training classifiers which showed 81% accuracy for mel-spectrograms and 72% for linear spectrograms (10% chance accuracy). Lastly, reconstructions of auditory music stimuli were discriminated by listeners at an 85% success rate (50% chance) in a two-alternative match-to-sample task.

Image-based eeg classification of brain responses to song recordings

Jan 31, 2022

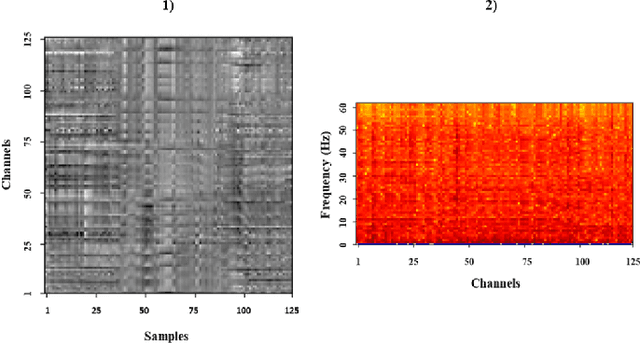

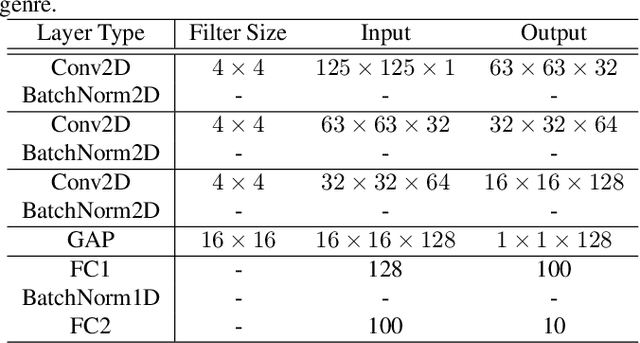

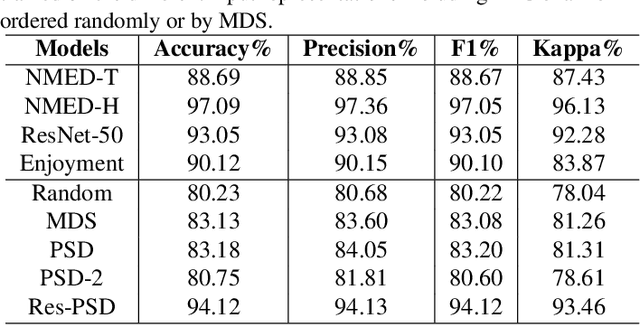

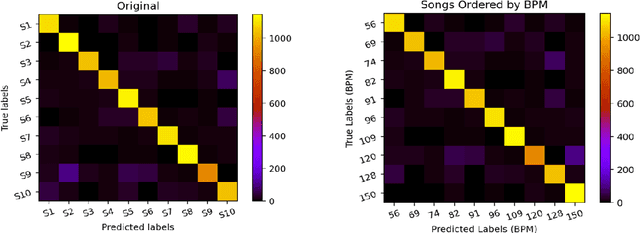

Abstract:Classifying EEG responses to naturalistic acoustic stimuli is of theoretical and practical importance, but standard approaches are limited by processing individual channels separately on very short sound segments (a few seconds or less). Recent developments have shown classification for music stimuli (~2 mins) by extracting spectral components from EEG and using convolutional neural networks (CNNs). This paper proposes an efficient method to map raw EEG signals to individual songs listened for end-to-end classification. EEG channels are treated as a dimension of a [Channel x Sample] image tile, and images are classified using CNNs. Our experimental results (88.7%) compete with state-of-the-art methods (85.0%), yet our classification task is more challenging by processing longer stimuli that were similar to each other in perceptual quality, and were unfamiliar to participants. We also adopt a transfer learning scheme using a pre-trained ResNet-50, confirming the effectiveness of transfer learning despite image domains unrelated from each other.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge