Adam Safron

Institute for Advanced Consciousness Studies, Santa Monica, CA, Allen Discovery Center

The Contingencies of Physical Embodiment Allow for Open-Endedness and Care

Oct 08, 2025

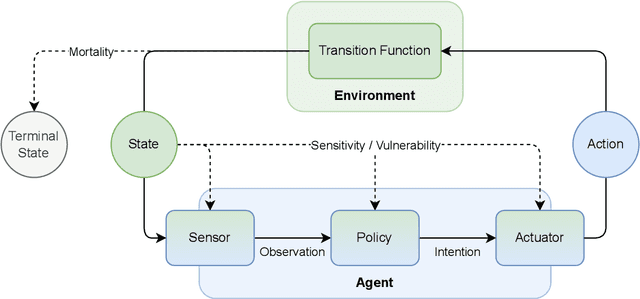

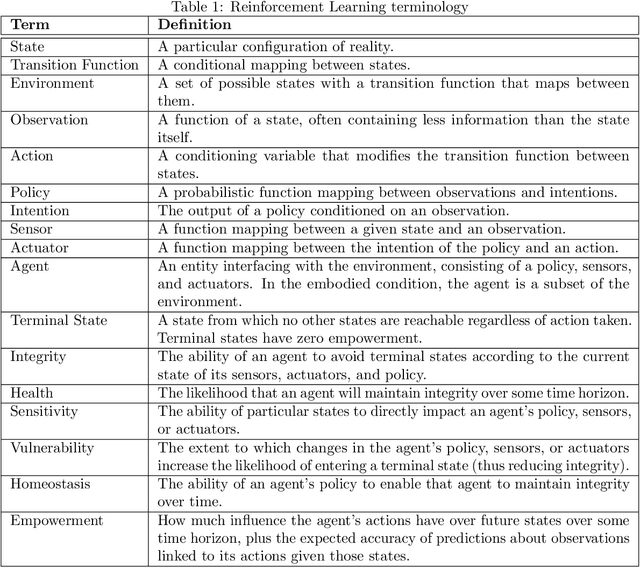

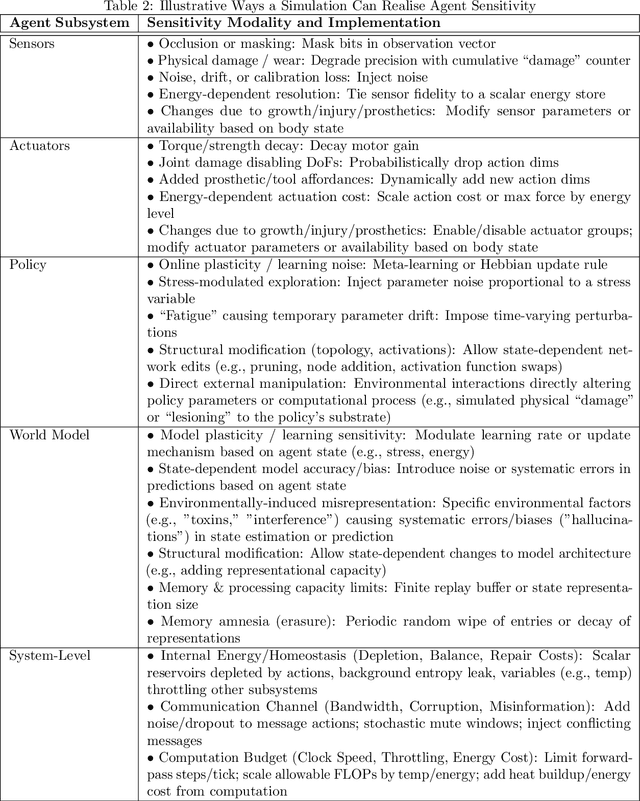

Abstract:Physical vulnerability and mortality are often seen as obstacles to be avoided in the development of artificial agents, which struggle to adapt to open-ended environments and provide aligned care. Meanwhile, biological organisms survive, thrive, and care for each other in an open-ended physical world with relative ease and efficiency. Understanding the role of the conditions of life in this disparity can aid in developing more robust, adaptive, and caring artificial agents. Here we define two minimal conditions for physical embodiment inspired by the existentialist phenomenology of Martin Heidegger: being-in-the-world (the agent is a part of the environment) and being-towards-death (unless counteracted, the agent drifts toward terminal states due to the second law of thermodynamics). We propose that from these conditions we can obtain both a homeostatic drive - aimed at maintaining integrity and avoiding death by expending energy to learn and act - and an intrinsic drive to continue to do so in as many ways as possible. Drawing inspiration from Friedrich Nietzsche's existentialist concept of will-to-power, we examine how intrinsic drives to maximize control over future states, e.g., empowerment, allow agents to increase the probability that they will be able to meet their future homeostatic needs, thereby enhancing their capacity to maintain physical integrity. We formalize these concepts within a reinforcement learning framework, which enables us to examine how intrinsically driven embodied agents learning in open-ended multi-agent environments may cultivate the capacities for open-endedness and care.ov

Relational Norms for Human-AI Cooperation

Feb 17, 2025Abstract:How we should design and interact with social artificial intelligence depends on the socio-relational role the AI is meant to emulate or occupy. In human society, relationships such as teacher-student, parent-child, neighbors, siblings, or employer-employee are governed by specific norms that prescribe or proscribe cooperative functions including hierarchy, care, transaction, and mating. These norms shape our judgments of what is appropriate for each partner. For example, workplace norms may allow a boss to give orders to an employee, but not vice versa, reflecting hierarchical and transactional expectations. As AI agents and chatbots powered by large language models are increasingly designed to serve roles analogous to human positions - such as assistant, mental health provider, tutor, or romantic partner - it is imperative to examine whether and how human relational norms should extend to human-AI interactions. Our analysis explores how differences between AI systems and humans, such as the absence of conscious experience and immunity to fatigue, may affect an AI's capacity to fulfill relationship-specific functions and adhere to corresponding norms. This analysis, which is a collaborative effort by philosophers, psychologists, relationship scientists, ethicists, legal experts, and AI researchers, carries important implications for AI systems design, user behavior, and regulation. While we accept that AI systems can offer significant benefits such as increased availability and consistency in certain socio-relational roles, they also risk fostering unhealthy dependencies or unrealistic expectations that could spill over into human-human relationships. We propose that understanding and thoughtfully shaping (or implementing) suitable human-AI relational norms will be crucial for ensuring that human-AI interactions are ethical, trustworthy, and favorable to human well-being.

Dream to Explore: Adaptive Simulations for Autonomous Systems

Oct 27, 2021

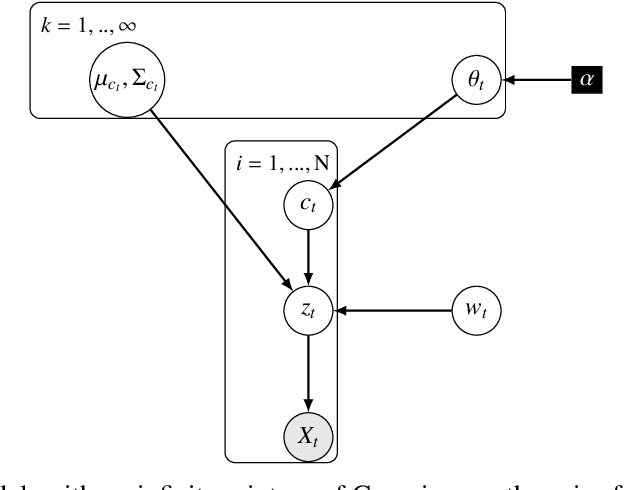

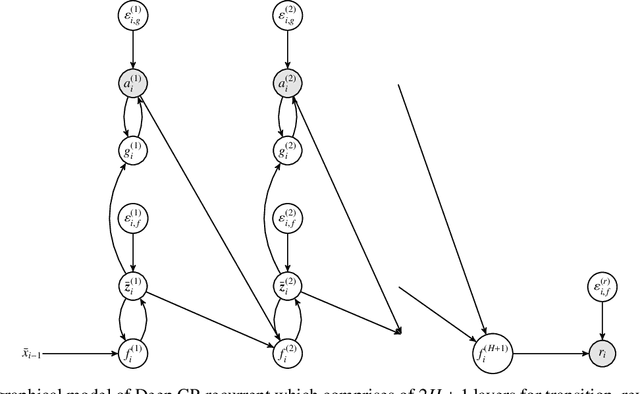

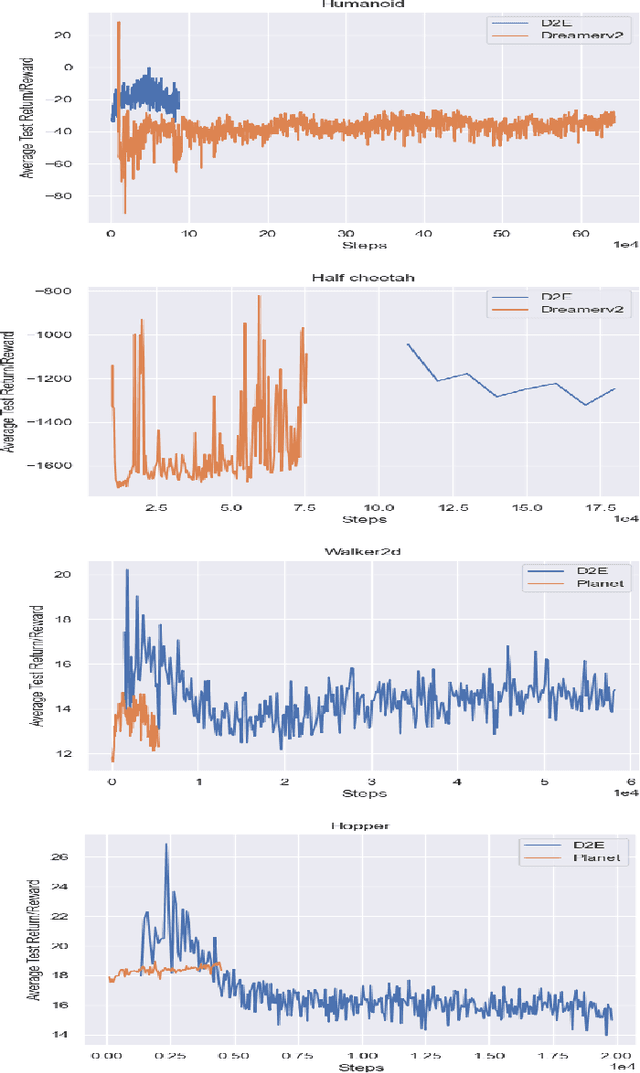

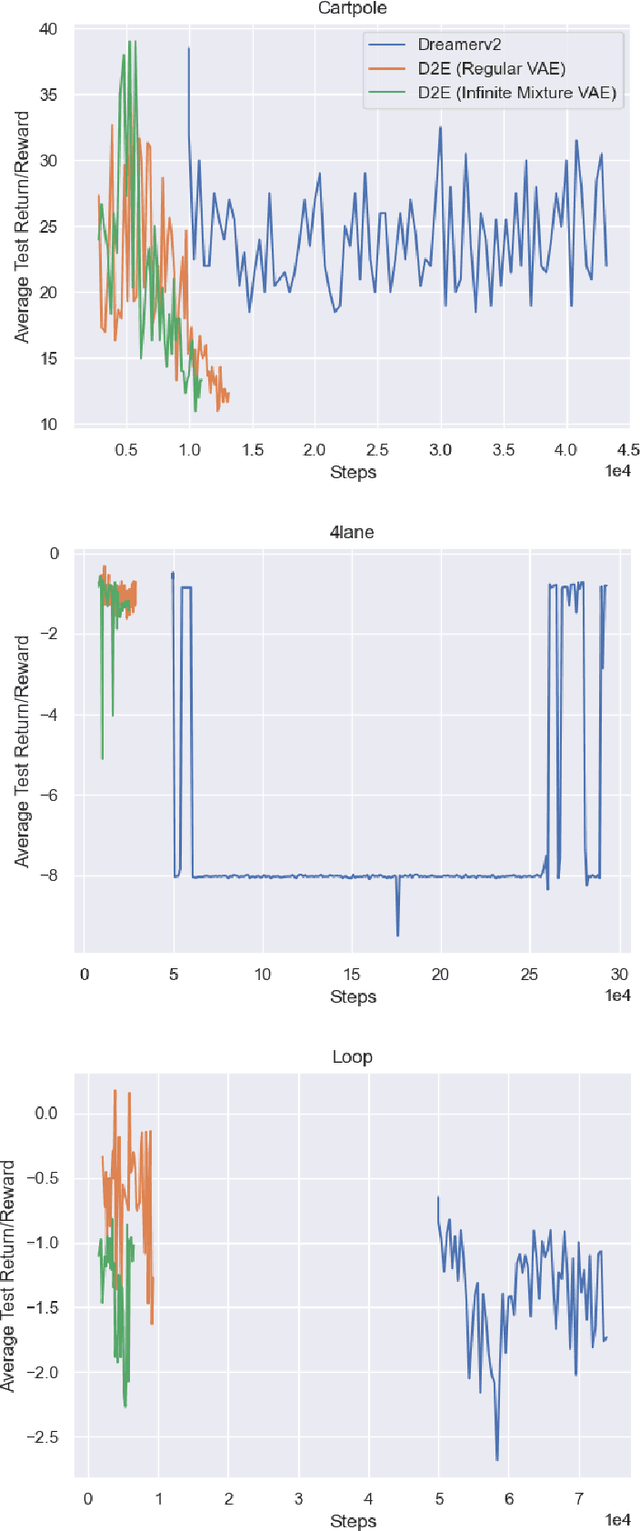

Abstract:One's ability to learn a generative model of the world without supervision depends on the extent to which one can construct abstract knowledge representations that generalize across experiences. To this end, capturing an accurate statistical structure from observational data provides useful inductive biases that can be transferred to novel environments. Here, we tackle the problem of learning to control dynamical systems by applying Bayesian nonparametric methods, which is applied to solve visual servoing tasks. This is accomplished by first learning a state space representation, then inferring environmental dynamics and improving the policies through imagined future trajectories. Bayesian nonparametric models provide automatic model adaptation, which not only combats underfitting and overfitting, but also allows the model's unbounded dimension to be both flexible and computationally tractable. By employing Gaussian processes to discover latent world dynamics, we mitigate common data efficiency issues observed in reinforcement learning and avoid introducing explicit model bias by describing the system's dynamics. Our algorithm jointly learns a world model and policy by optimizing a variational lower bound of a log-likelihood with respect to the expected free energy minimization objective function. Finally, we compare the performance of our model with the state-of-the-art alternatives for continuous control tasks in simulated environments.

Integrative Biological Simulation, Neuropsychology, and AI Safety

Nov 07, 2018Abstract:We propose a biologically-inspired research agenda with parallel tracks aimed at AI and AI safety. The bottom-up component consists of building a sequence of biophysically realistic simulations of simple organisms such as the nematode Caenorhabditis elegans, the fruit fly Drosophila melanogaster, and the zebrafish Danio rerio to serve as platforms for research into AI algorithms and system architectures. The top-down component consists of an approach to value alignment that grounds AI goal structures in neuropsychology. Our belief is that parallel pursuit of these tracks will inform the development of value-aligned AI systems that have been inspired by embodied organisms with sensorimotor integration. An important set of side benefits is that the research trajectories we describe here are grounded in long-standing intellectual traditions within existing research communities and funding structures. In addition, these research programs overlap with significant contemporary themes in the biological and psychological sciences such as data/model integration and reproducibility.

AI Safety and Reproducibility: Establishing Robust Foundations for the Neuropsychology of Human Values

Sep 08, 2018Abstract:We propose the creation of a systematic effort to identify and replicate key findings in neuropsychology and allied fields related to understanding human values. Our aim is to ensure that research underpinning the value alignment problem of artificial intelligence has been sufficiently validated to play a role in the design of AI systems.

* 5 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge