Abdur R. Shahid

Evaluating Apple Intelligence's Writing Tools for Privacy Against Large Language Model-Based Inference Attacks: Insights from Early Datasets

Jun 04, 2025Abstract:The misuse of Large Language Models (LLMs) to infer emotions from text for malicious purposes, known as emotion inference attacks, poses a significant threat to user privacy. In this paper, we investigate the potential of Apple Intelligence's writing tools, integrated across iPhone, iPad, and MacBook, to mitigate these risks through text modifications such as rewriting and tone adjustment. By developing early novel datasets specifically for this purpose, we empirically assess how different text modifications influence LLM-based detection. This capability suggests strong potential for Apple Intelligence's writing tools as privacy-preserving mechanisms. Our findings lay the groundwork for future adaptive rewriting systems capable of dynamically neutralizing sensitive emotional content to enhance user privacy. To the best of our knowledge, this research provides the first empirical analysis of Apple Intelligence's text-modification tools within a privacy-preservation context with the broader goal of developing on-device, user-centric privacy-preserving mechanisms to protect against LLMs-based advanced inference attacks on deployed systems.

Sponge Attacks on Sensing AI: Energy-Latency Vulnerabilities and Defense via Model Pruning

May 09, 2025Abstract:Recent studies have shown that sponge attacks can significantly increase the energy consumption and inference latency of deep neural networks (DNNs). However, prior work has focused primarily on computer vision and natural language processing tasks, overlooking the growing use of lightweight AI models in sensing-based applications on resource-constrained devices, such as those in Internet of Things (IoT) environments. These attacks pose serious threats of energy depletion and latency degradation in systems where limited battery capacity and real-time responsiveness are critical for reliable operation. This paper makes two key contributions. First, we present the first systematic exploration of energy-latency sponge attacks targeting sensing-based AI models. Using wearable sensing-based AI as a case study, we demonstrate that sponge attacks can substantially degrade performance by increasing energy consumption, leading to faster battery drain, and by prolonging inference latency. Second, to mitigate such attacks, we investigate model pruning, a widely adopted compression technique for resource-constrained AI, as a potential defense. Our experiments show that pruning-induced sparsity significantly improves model resilience against sponge poisoning. We also quantify the trade-offs between model efficiency and attack resilience, offering insights into the security implications of model compression in sensing-based AI systems deployed in IoT environments.

Exploring Audio Editing Features as User-Centric Privacy Defenses Against Emotion Inference Attacks

Jan 30, 2025

Abstract:The rapid proliferation of speech-enabled technologies, including virtual assistants, video conferencing platforms, and wearable devices, has raised significant privacy concerns, particularly regarding the inference of sensitive emotional information from audio data. Existing privacy-preserving methods often compromise usability and security, limiting their adoption in practical scenarios. This paper introduces a novel, user-centric approach that leverages familiar audio editing techniques, specifically pitch and tempo manipulation, to protect emotional privacy without sacrificing usability. By analyzing popular audio editing applications on Android and iOS platforms, we identified these features as both widely available and usable. We rigorously evaluated their effectiveness against a threat model, considering adversarial attacks from diverse sources, including Deep Neural Networks (DNNs), Large Language Models (LLMs), and and reversibility testing. Our experiments, conducted on three distinct datasets, demonstrate that pitch and tempo manipulation effectively obfuscates emotional data. Additionally, we explore the design principles for lightweight, on-device implementation to ensure broad applicability across various devices and platforms.

TriplePlay: Enhancing Federated Learning with CLIP for Non-IID Data and Resource Efficiency

Sep 09, 2024

Abstract:The rapid advancement and increasing complexity of pretrained models, exemplified by CLIP, offer significant opportunities as well as challenges for Federated Learning (FL), a critical component of privacy-preserving artificial intelligence. This research delves into the intricacies of integrating large foundation models like CLIP within FL frameworks to enhance privacy, efficiency, and adaptability across heterogeneous data landscapes. It specifically addresses the challenges posed by non-IID data distributions, the computational and communication overheads of leveraging such complex models, and the skewed representation of classes within datasets. We propose TriplePlay, a framework that integrates CLIP as an adapter to enhance FL's adaptability and performance across diverse data distributions. This approach addresses the long-tail distribution challenge to ensure fairness while reducing resource demands through quantization and low-rank adaptation techniques.Our simulation results demonstrate that TriplePlay effectively decreases GPU usage costs and speeds up the learning process, achieving convergence with reduced communication overhead.

Large Language Model Integrated Healthcare Cyber-Physical Systems Architecture

Jul 25, 2024Abstract:Cyber-physical systems have become an essential part of the modern healthcare industry. The healthcare cyber-physical systems (HCPS) combine physical and cyber components to improve the healthcare industry. While HCPS has many advantages, it also has some drawbacks, such as a lengthy data entry process, a lack of real-time processing, and limited real-time patient visualization. To overcome these issues, this paper represents an innovative approach to integrating large language model (LLM) to enhance the efficiency of the healthcare system. By incorporating LLM at various layers, HCPS can leverage advanced AI capabilities to improve patient outcomes, advance data processing, and enhance decision-making.

Distributed Threat Intelligence at the Edge Devices: A Large Language Model-Driven Approach

May 14, 2024

Abstract:With the proliferation of edge devices, there is a significant increase in attack surface on these devices. The decentralized deployment of threat intelligence on edge devices, coupled with adaptive machine learning techniques such as the in-context learning feature of large language models (LLMs), represents a promising paradigm for enhancing cybersecurity on low-powered edge devices. This approach involves the deployment of lightweight machine learning models directly onto edge devices to analyze local data streams, such as network traffic and system logs, in real-time. Additionally, distributing computational tasks to an edge server reduces latency and improves responsiveness while also enhancing privacy by processing sensitive data locally. LLM servers can enable these edge servers to autonomously adapt to evolving threats and attack patterns, continuously updating their models to improve detection accuracy and reduce false positives. Furthermore, collaborative learning mechanisms facilitate peer-to-peer secure and trustworthy knowledge sharing among edge devices, enhancing the collective intelligence of the network and enabling dynamic threat mitigation measures such as device quarantine in response to detected anomalies. The scalability and flexibility of this approach make it well-suited for diverse and evolving network environments, as edge devices only send suspicious information such as network traffic and system log changes, offering a resilient and efficient solution to combat emerging cyber threats at the network edge. Thus, our proposed framework can improve edge computing security by providing better security in cyber threat detection and mitigation by isolating the edge devices from the network.

Towards Sustainable SecureML: Quantifying Carbon Footprint of Adversarial Machine Learning

Mar 27, 2024Abstract:The widespread adoption of machine learning (ML) across various industries has raised sustainability concerns due to its substantial energy usage and carbon emissions. This issue becomes more pressing in adversarial ML, which focuses on enhancing model security against different network-based attacks. Implementing defenses in ML systems often necessitates additional computational resources and network security measures, exacerbating their environmental impacts. In this paper, we pioneer the first investigation into adversarial ML's carbon footprint, providing empirical evidence connecting greater model robustness to higher emissions. Addressing the critical need to quantify this trade-off, we introduce the Robustness Carbon Trade-off Index (RCTI). This novel metric, inspired by economic elasticity principles, captures the sensitivity of carbon emissions to changes in adversarial robustness. We demonstrate the RCTI through an experiment involving evasion attacks, analyzing the interplay between robustness against attacks, performance, and carbon emissions.

Label Flipping Data Poisoning Attack Against Wearable Human Activity Recognition System

Aug 17, 2022

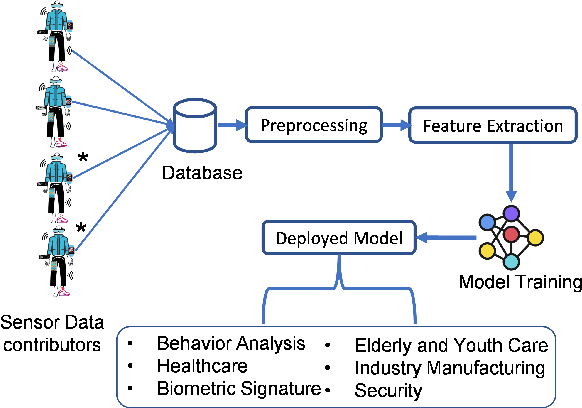

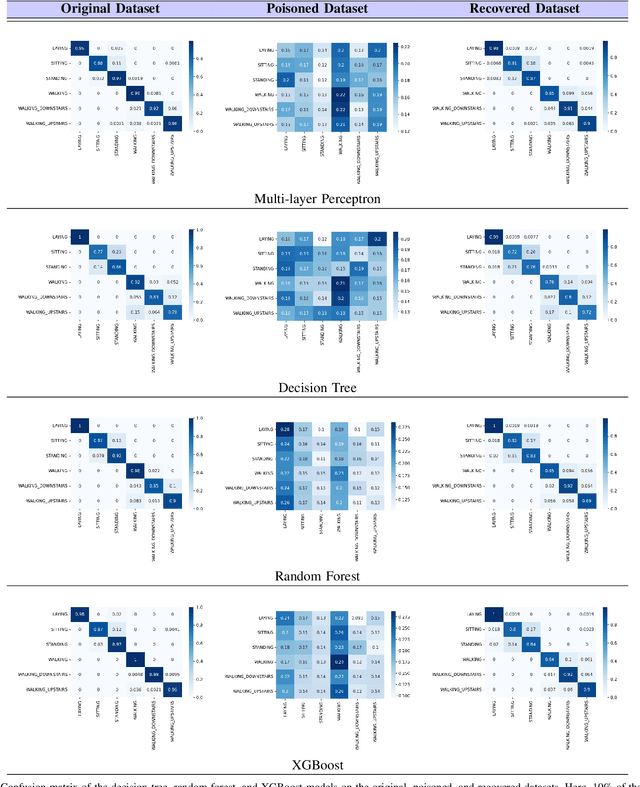

Abstract:Human Activity Recognition (HAR) is a problem of interpreting sensor data to human movement using an efficient machine learning (ML) approach. The HAR systems rely on data from untrusted users, making them susceptible to data poisoning attacks. In a poisoning attack, attackers manipulate the sensor readings to contaminate the training set, misleading the HAR to produce erroneous outcomes. This paper presents the design of a label flipping data poisoning attack for a HAR system, where the label of a sensor reading is maliciously changed in the data collection phase. Due to high noise and uncertainty in the sensing environment, such an attack poses a severe threat to the recognition system. Besides, vulnerability to label flipping attacks is dangerous when activity recognition models are deployed in safety-critical applications. This paper shades light on how to carry out the attack in practice through smartphone-based sensor data collection applications. This is an earlier research work, to our knowledge, that explores attacking the HAR models via label flipping poisoning. We implement the proposed attack and test it on activity recognition models based on the following machine learning algorithms: multi-layer perceptron, decision tree, random forest, and XGBoost. Finally, we evaluate the effectiveness of K-nearest neighbors (KNN)-based defense mechanism against the proposed attack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge