A. W. Tow

Design of a Multi-Modal End-Effector and Grasping System: How Integrated Design helped win the Amazon Robotics Challenge

Jun 19, 2018

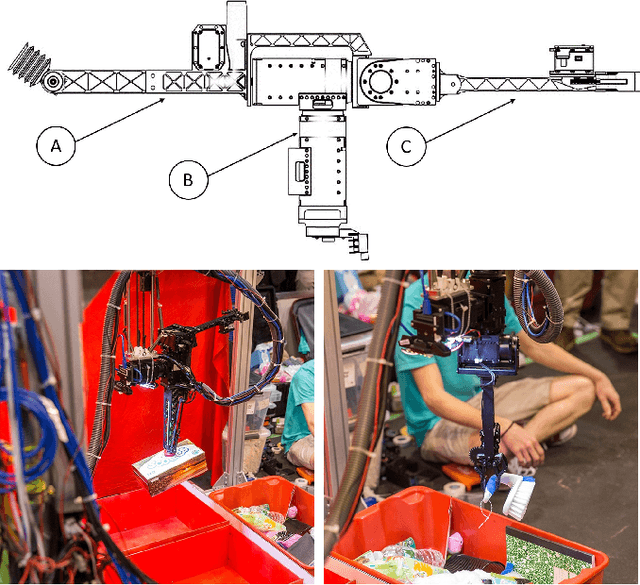

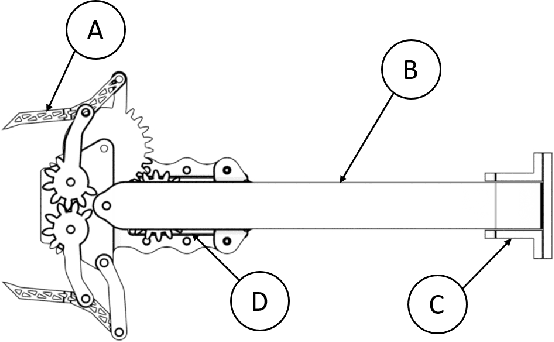

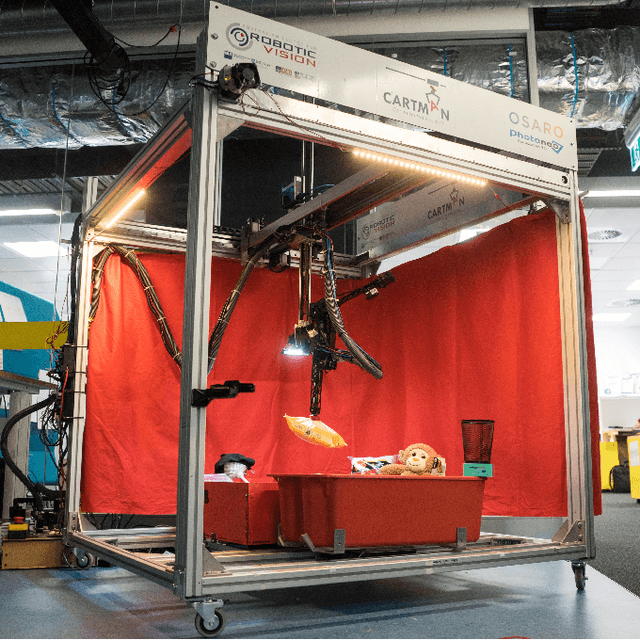

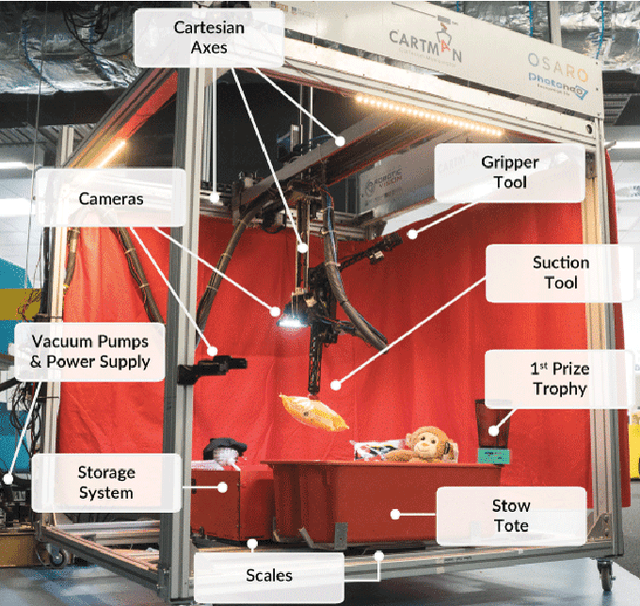

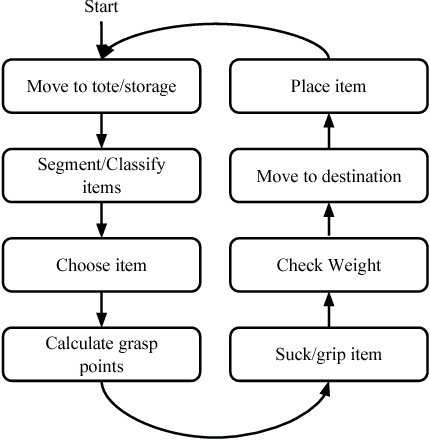

Abstract:We present the grasping system and design approach behind Cartman, the winning entrant in the 2017 Amazon Robotics Challenge. We investigate the design processes leading up to the final iteration of the system and describe the emergent solution by comparing it with key robotics design aspects. Following our experience, we propose a new design aspect, precision vs. redundancy, that should be considered alongside the previously proposed design aspects of modularity vs. integration, generality vs. assumptions, computation vs. embodiment and planning vs. feedback. We present the grasping system behind Cartman, the winning robot in the 2017 Amazon Robotics Challenge. The system makes strong use of redundancy in design by implementing complimentary tools, a suction gripper and a parallel gripper. This multi-modal end-effector is combined with three grasp synthesis algorithms to accommodate the range of objects provided by Amazon during the challenge. We provide a detailed system description and an evaluation of its performance before discussing the broader nature of the system with respect to the key aspects of robotic design as initially proposed by the winners of the first Amazon Picking Challenge. To address the principal nature of our grasping system and the reason for its success, we propose an additional robotic design aspect `precision vs. redundancy'. The full design of our robotic system, including the end-effector, is open sourced and available at http://juxi.net/projects/AmazonRoboticsChallenge/

Mechanical Design of a Cartesian Manipulator for Warehouse Pick and Place

Jun 18, 2018

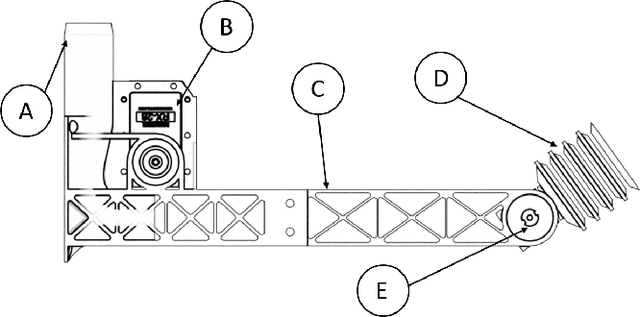

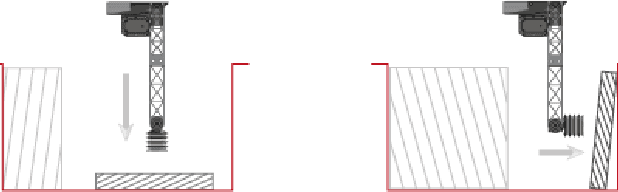

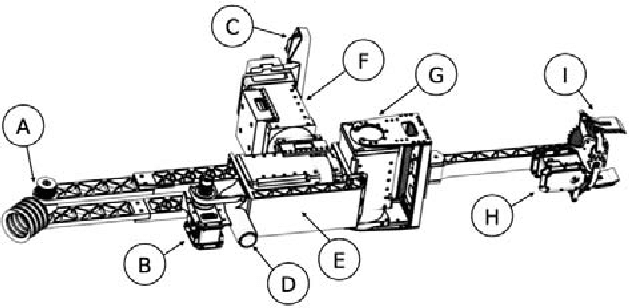

Abstract:Robotic manipulation and grasping in cluttered and unstructured environments is a current challenge for robotics. Enabling robots to operate in these challenging environments have direct applications from automating warehouses to harvesting fruit in agriculture. One of the main challenges associated with these difficult robotic manipulation tasks is the motion planning and control problem for multi-DoF (Degree of Freedom) manipulators. This paper presents the design and performance evaluation of a low-cost Cartesian manipulator, Cartman who took first place in the Amazon Robotics Challenge 2017. It can perform pick and place tasks of household items in a cluttered environment. The robot is capable of linear speeds of 1 m/s and angular speeds of 1.5 rad/s, capable of sub-millimetre static accuracy and safe payload capacity of 2kg. Cartman can be produced for under 10 000 AUD. The complete design is open sourced and can be found at http://juxi.net/projects/AmazonRoboticsChallenge.

Cartman: The low-cost Cartesian Manipulator that won the Amazon Robotics Challenge

Feb 26, 2018

Abstract:The Amazon Robotics Challenge enlisted sixteen teams to each design a pick-and-place robot for autonomous warehousing, addressing development in robotic vision and manipulation. This paper presents the design of our custom-built, cost-effective, Cartesian robot system Cartman, which won first place in the competition finals by stowing 14 (out of 16) and picking all 9 items in 27 minutes, scoring a total of 272 points. We highlight our experience-centred design methodology and key aspects of our system that contributed to our competitiveness. We believe these aspects are crucial to building robust and effective robotic systems.

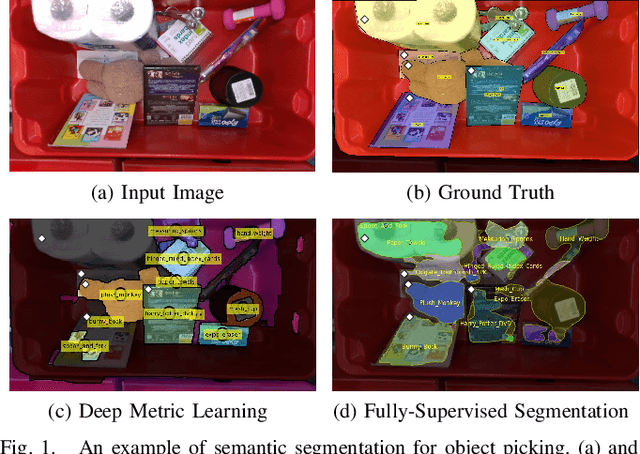

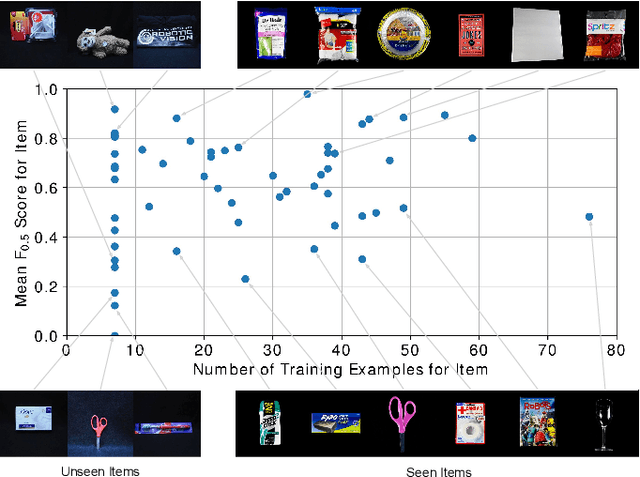

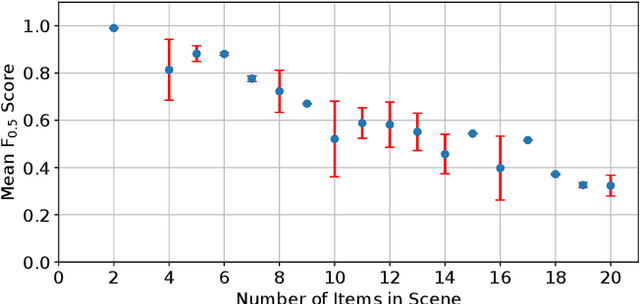

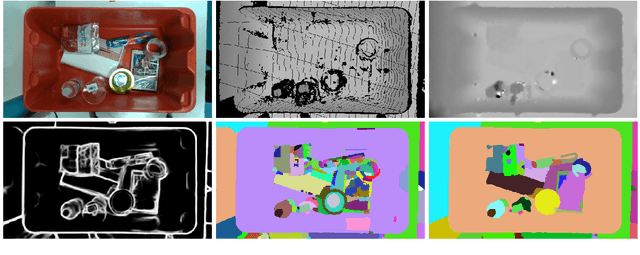

Semantic Segmentation from Limited Training Data

Sep 22, 2017

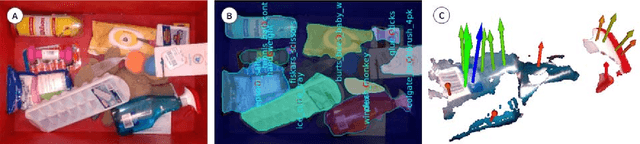

Abstract:We present our approach for robotic perception in cluttered scenes that led to winning the recent Amazon Robotics Challenge (ARC) 2017. Next to small objects with shiny and transparent surfaces, the biggest challenge of the 2017 competition was the introduction of unseen categories. In contrast to traditional approaches which require large collections of annotated data and many hours of training, the task here was to obtain a robust perception pipeline with only few minutes of data acquisition and training time. To that end, we present two strategies that we explored. One is a deep metric learning approach that works in three separate steps: semantic-agnostic boundary detection, patch classification and pixel-wise voting. The other is a fully-supervised semantic segmentation approach with efficient dataset collection. We conduct an extensive analysis of the two methods on our ARC 2017 dataset. Interestingly, only few examples of each class are sufficient to fine-tune even very deep convolutional neural networks for this specific task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge