A. Selcuk Uluagac

Adversarial Attacks to Machine Learning-Based Smart Healthcare Systems

Oct 07, 2020

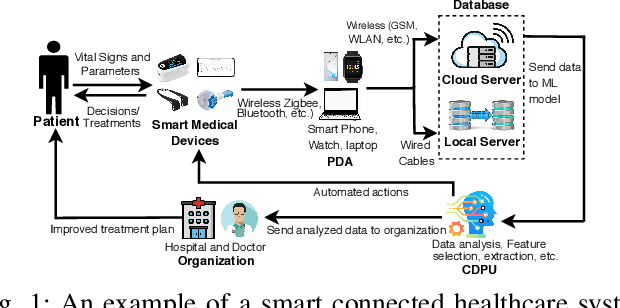

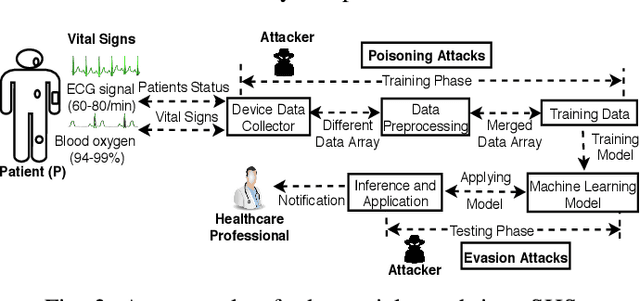

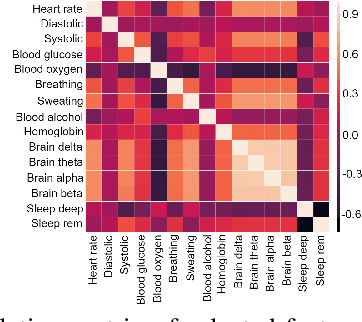

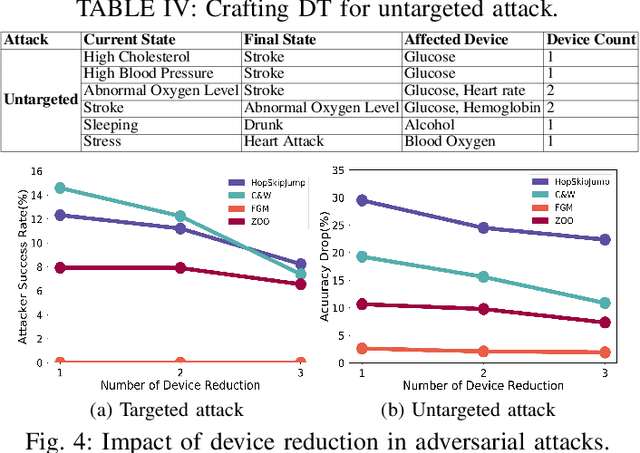

Abstract:The increasing availability of healthcare data requires accurate analysis of disease diagnosis, progression, and realtime monitoring to provide improved treatments to the patients. In this context, Machine Learning (ML) models are used to extract valuable features and insights from high-dimensional and heterogeneous healthcare data to detect different diseases and patient activities in a Smart Healthcare System (SHS). However, recent researches show that ML models used in different application domains are vulnerable to adversarial attacks. In this paper, we introduce a new type of adversarial attacks to exploit the ML classifiers used in a SHS. We consider an adversary who has partial knowledge of data distribution, SHS model, and ML algorithm to perform both targeted and untargeted attacks. Employing these adversarial capabilities, we manipulate medical device readings to alter patient status (disease-affected, normal condition, activities, etc.) in the outcome of the SHS. Our attack utilizes five different adversarial ML algorithms (HopSkipJump, Fast Gradient Method, Crafting Decision Tree, Carlini & Wagner, Zeroth Order Optimization) to perform different malicious activities (e.g., data poisoning, misclassify outputs, etc.) on a SHS. Moreover, based on the training and testing phase capabilities of an adversary, we perform white box and black box attacks on a SHS. We evaluate the performance of our work in different SHS settings and medical devices. Our extensive evaluation shows that our proposed adversarial attack can significantly degrade the performance of a ML-based SHS in detecting diseases and normal activities of the patients correctly, which eventually leads to erroneous treatment.

Real-time Analysis of Privacy-aware IoT Applications

Nov 24, 2019

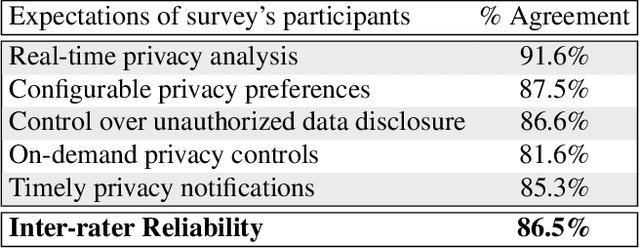

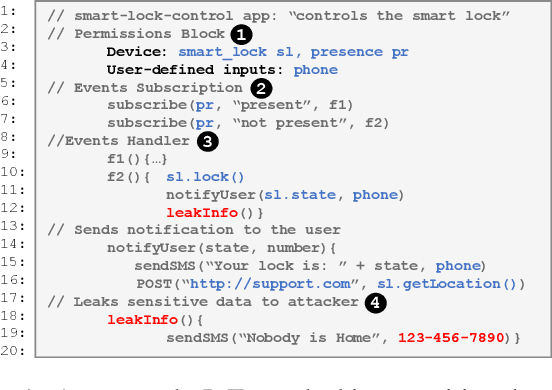

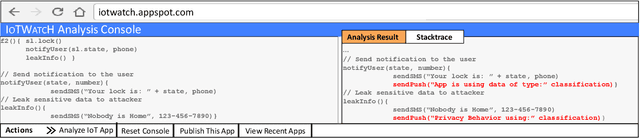

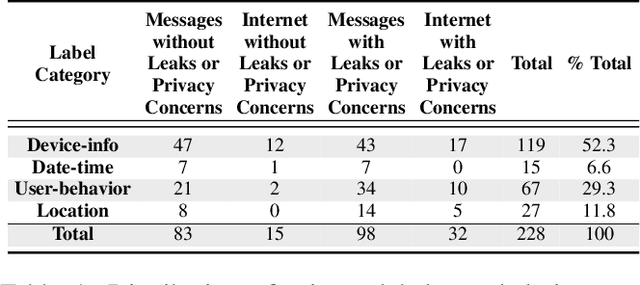

Abstract:Users trust IoT apps to control and automate their smart devices. These apps necessarily have access to sensitive data to implement their functionality. However, users lack visibility into how their sensitive data is used (or leaked), and they often blindly trust the app developers. In this paper, we present IoTWatcH, a novel dynamic analysis tool that uncovers the privacy risks of IoT apps in real-time. We designed and built IoTWatcH based on an IoT privacy survey that considers the privacy needs of IoT users. IoTWatcH provides users with a simple interface to specify their privacy preferences with an IoT app. Then, in runtime, it analyzes both the data that is sent out of the IoT app and its recipients using Natural Language Processing (NLP) techniques. Moreover, IoTWatcH informs the users with its findings to make them aware of the privacy risks with the IoT app. We implemented IoTWatcH on real IoT applications. Specifically, we analyzed 540 IoT apps to train the NLP model and evaluate its effectiveness. IoTWatcH successfully classifies IoT app data sent to external parties to correct privacy labels with an average accuracy of 94.25%, and flags IoT apps that leak privacy data to unauthorized parties. Finally, IoTWatcH yields minimal overhead to an IoT app's execution, on average 105 ms additional latency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge