Łukasz Lepak

Reinforcement learning for optimization of energy trading strategy

Mar 28, 2023Abstract:An increasing part of energy is produced from renewable sources by a large number of small producers. The efficiency of these sources is volatile and, to some extent, random, exacerbating the energy market balance problem. In many countries, that balancing is performed on day-ahead (DA) energy markets. In this paper, we consider automated trading on a DA energy market by a medium size prosumer. We model this activity as a Markov Decision Process and formalize a framework in which a ready-to-use strategy can be optimized with real-life data. We synthesize parametric trading strategies and optimize them with an evolutionary algorithm. We also use state-of-the-art reinforcement learning algorithms to optimize a black-box trading strategy fed with available information from the environment that can impact future prices.

Reinforcement Learning for on-line Sequence Transformation

May 28, 2021

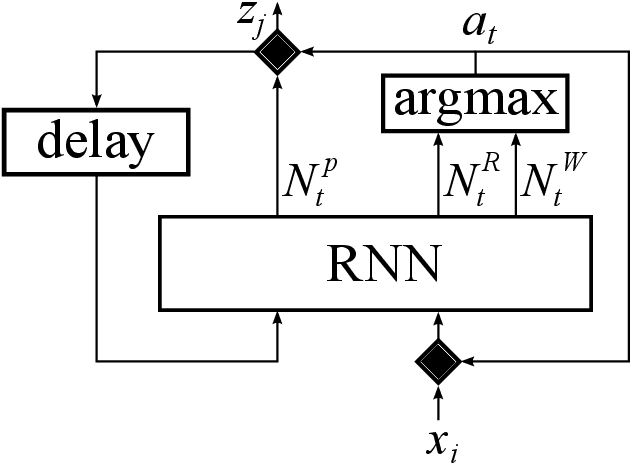

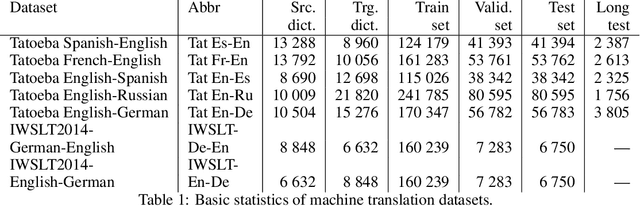

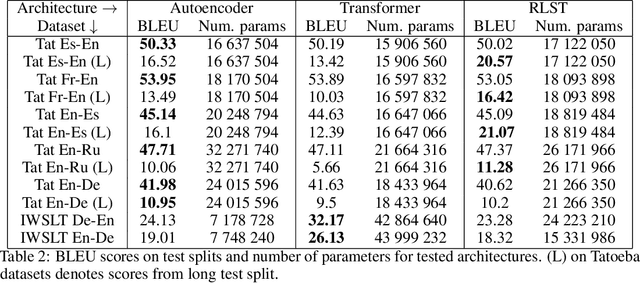

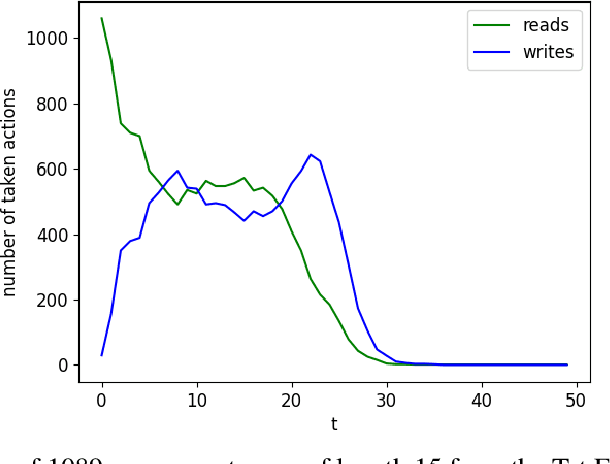

Abstract:A number of problems in the processing of sound and natural language, as well as in other areas, can be reduced to simultaneously reading an input sequence and writing an output sequence of generally different length. There are well developed methods that produce the output sequence based on the entirely known input. However, efficient methods that enable such transformations on-line do not exist. In this paper we introduce an architecture that learns with reinforcement to make decisions about whether to read a token or write another token. This architecture is able to transform potentially infinite sequences on-line. In an experimental study we compare it with state-of-the-art methods for neural machine translation. While it produces slightly worse translations than Transformer, it outperforms the autoencoder with attention, even though our architecture translates texts on-line thereby solving a more difficult problem than both reference methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge