Özge Nilay Yalçın

Contextual Emotion Recognition using Large Vision Language Models

May 14, 2024

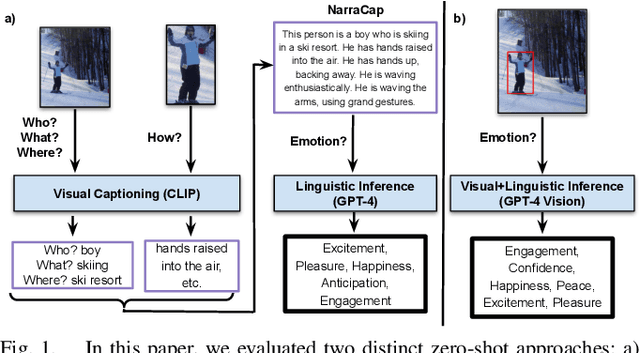

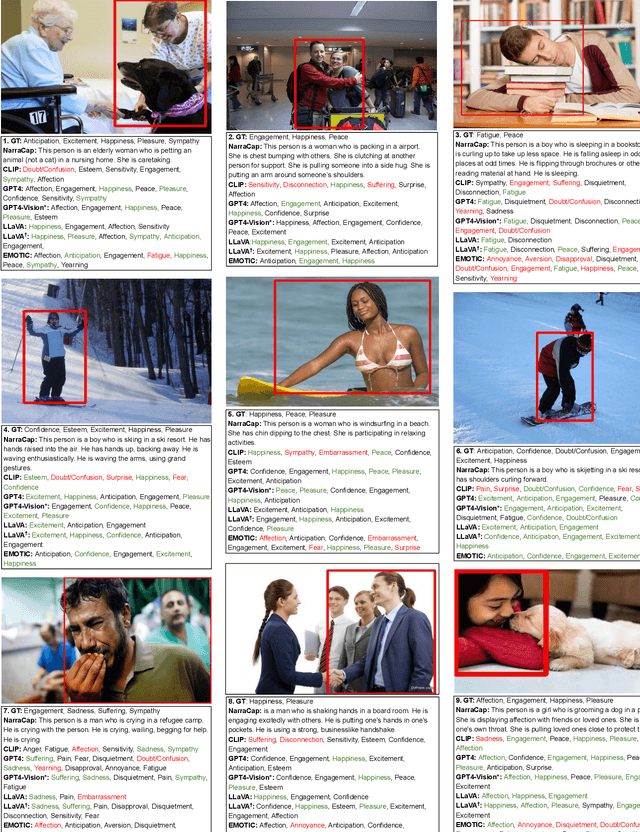

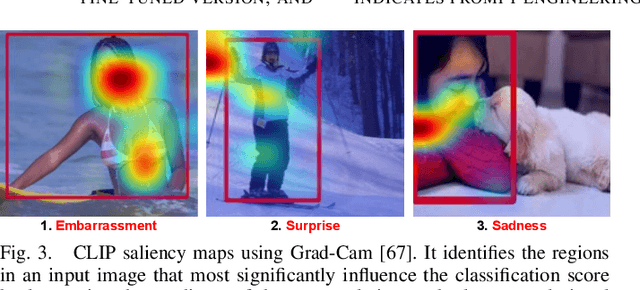

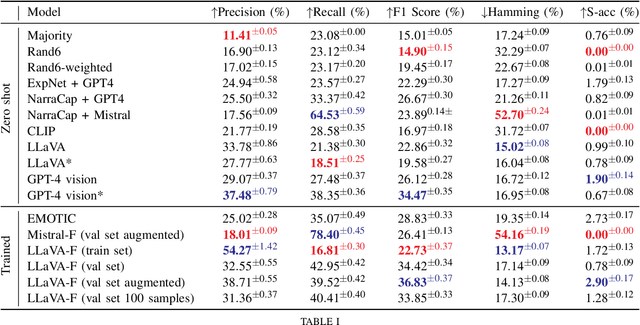

Abstract:"How does the person in the bounding box feel?" Achieving human-level recognition of the apparent emotion of a person in real world situations remains an unsolved task in computer vision. Facial expressions are not enough: body pose, contextual knowledge, and commonsense reasoning all contribute to how humans perform this emotional theory of mind task. In this paper, we examine two major approaches enabled by recent large vision language models: 1) image captioning followed by a language-only LLM, and 2) vision language models, under zero-shot and fine-tuned setups. We evaluate the methods on the Emotions in Context (EMOTIC) dataset and demonstrate that a vision language model, fine-tuned even on a small dataset, can significantly outperform traditional baselines. The results of this work aim to help robots and agents perform emotionally sensitive decision-making and interaction in the future.

Evaluating Empathy in Artificial Agents

Aug 14, 2019Abstract:The novel research area of computational empathy is in its infancy and moving towards developing methods and standards. One major problem is the lack of agreement on the evaluation of empathy in artificial interactive systems. Even though the existence of well-established methods from psychology, psychiatry and neuroscience, the translation between these methods and computational empathy is not straightforward. It requires a collective effort to develop metrics that are more suitable for interactive artificial agents. This paper is aimed as an attempt to initiate the dialogue on this important problem. We examine the evaluation methods for empathy in humans and provide suggestions for the development of better metrics to evaluate empathy in artificial agents. We acknowledge the difficulty of arriving at a single solution in a vast variety of interactive systems and propose a set of systematic approaches that can be used with a variety of applications and systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge