Zero-shot Learning and Knowledge Transfer in Music Classification and Tagging

Paper and Code

Jun 20, 2019

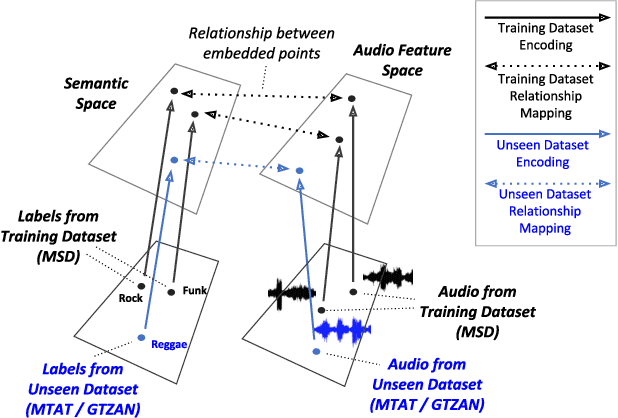

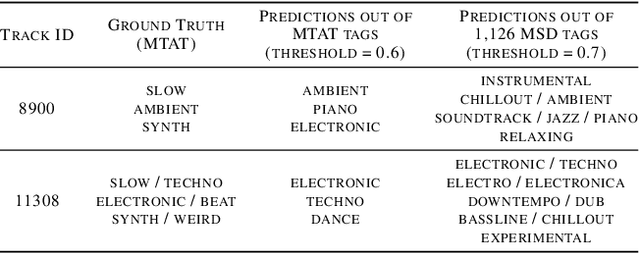

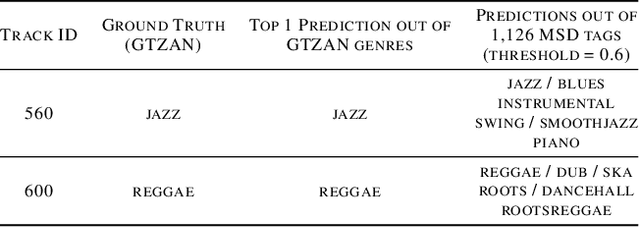

Music classification and tagging is conducted through categorical supervised learning with a fixed set of labels. In principle, this cannot make predictions on unseen labels. Zero-shot learning is an approach to solve the problem by using side information about the semantic labels. We recently investigated this concept of zero-shot learning in music classification and tagging task by projecting both audio and label space on a single semantic space. In this work, we extend the work to verify the generalization ability of zero-shot learning model by conducting knowledge transfer to different music corpora.

* International Conference on Machine Learning (ICML) 2019, Machine

Learning for Music Discovery Workshop

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge