YASENN: Explaining Neural Networks via Partitioning Activation Sequences

Paper and Code

Nov 07, 2018

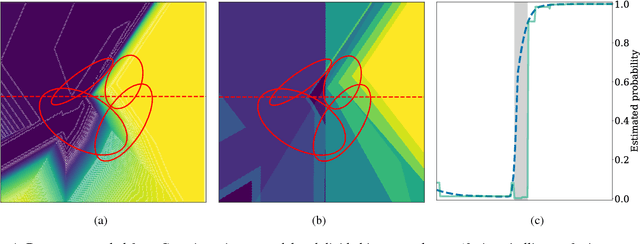

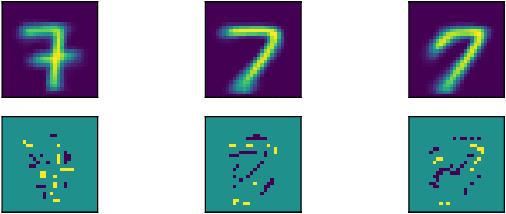

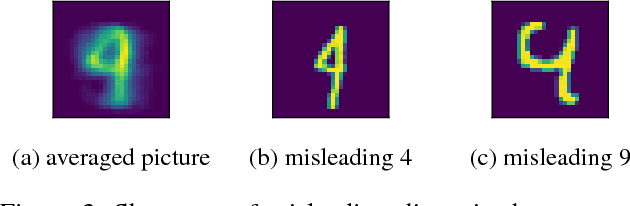

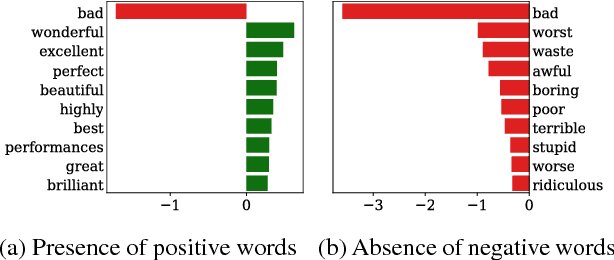

We introduce a novel approach to feed-forward neural network interpretation based on partitioning the space of sequences of neuron activations. In line with this approach, we propose a model-specific interpretation method, called YASENN. Our method inherits many advantages of model-agnostic distillation, such as an ability to focus on the particular input region and to express an explanation in terms of features different from those observed by a neural network. Moreover, examination of distillation error makes the method applicable to the problems with low tolerance to interpretation mistakes. Technically, YASENN distills the network with an ensemble of layer-wise gradient boosting decision trees and encodes the sequences of neuron activations with leaf indices. The finite number of unique codes induces a partitioning of the input space. Each partition may be described in a variety of ways, including examination of an interpretable model (e.g. a logistic regression or a decision tree) trained to discriminate between objects of those partitions. Our experiments provide an intuition behind the method and demonstrate revealed artifacts in neural network decision making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge