XAI-N: Sensor-based Robot Navigation using Expert Policies and Decision Trees

Paper and Code

Apr 22, 2021

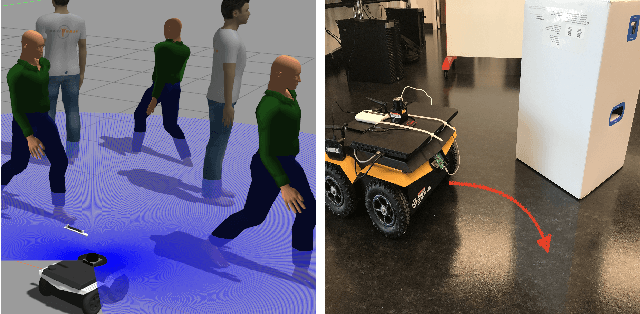

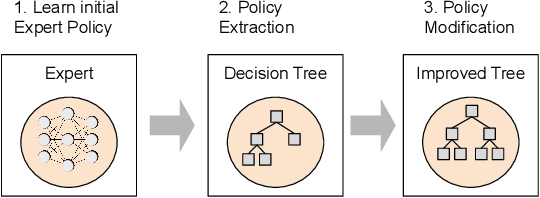

We present a novel sensor-based learning navigation algorithm to compute a collision-free trajectory for a robot in dense and dynamic environments with moving obstacles or targets. Our approach uses deep reinforcement learning-based expert policy that is trained using a sim2real paradigm. In order to increase the reliability and handle the failure cases of the expert policy, we combine with a policy extraction technique to transform the resulting policy into a decision tree format. The resulting decision tree has properties which we use to analyze and modify the policy and improve performance on navigation metrics including smoothness, frequency of oscillation, frequency of immobilization, and obstruction of target. We are able to modify the policy to address these imperfections without retraining, combining the learning power of deep learning with the control of domain-specific algorithms. We highlight the benefits of our algorithm in simulated environments and navigating a Clearpath Jackal robot among moving pedestrians.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge