Wireless Ad Hoc Federated Learning: A Fully Distributed Cooperative Machine Learning

Paper and Code

May 24, 2022

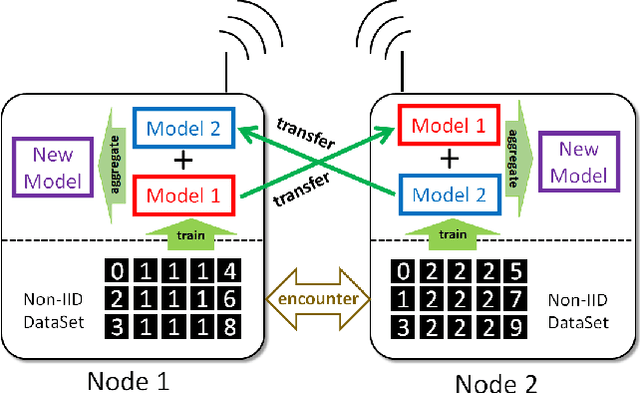

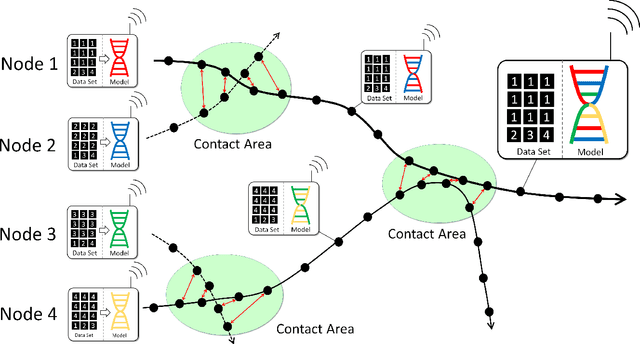

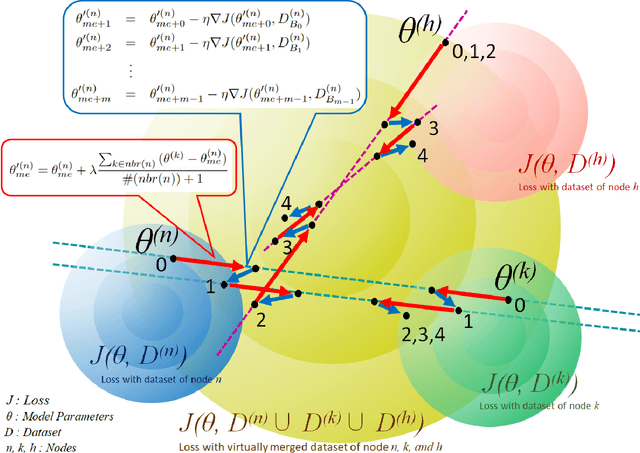

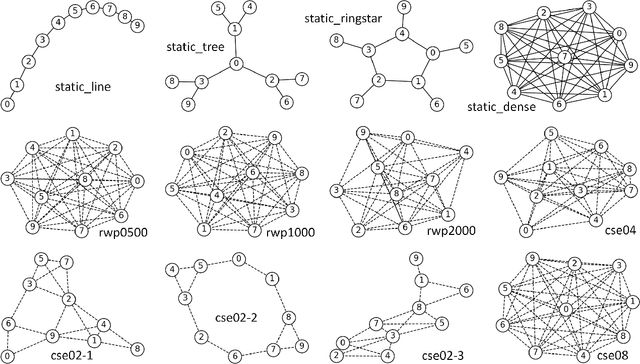

Federated learning has allowed training of a global model by aggregating local models trained on local nodes. However, it still takes client-server model, which can be further distributed, fully decentralized, or even partially connected, or totally opportunistic. In this paper, we propose a wireless ad hoc federated learning (WAFL) -- a fully distributed cooperative machine learning organized by the nodes physically nearby. Here, each node has a wireless interface and can communicate with each other when they are within the radio range. The nodes are expected to move with people, vehicles, or robots, producing opportunistic contacts with each other. In WAFL, each node trains a model individually with the local data it has. When a node encounter with others, they exchange their trained models, and generate new aggregated models, which are expected to be more general compared to the locally trained models on Non-IID data. For evaluation, we have prepared four static communication networks and two types of dynamic and opportunistic communication networks based on random waypoint mobility and community-structured environment, and then studied the training process of a fully connected neural network with 90% Non-IID MNIST dataset. The evaluation results indicate that WAFL allowed the convergence of model parameters among the nodes toward generalization, even with opportunistic node contact scenarios -- whereas in self-training (or lonely training) case, they have diverged. This WAFL's model generalization contributed to achieving higher accuracy 94.7-96.2% to the testing IID dataset compared to the self-training case 84.7%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge