WiFi-based Spatiotemporal Human Action Perception

Paper and Code

Jun 20, 2022

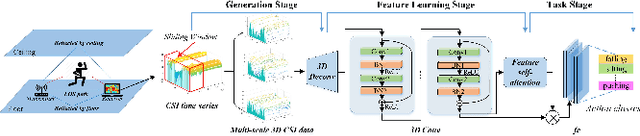

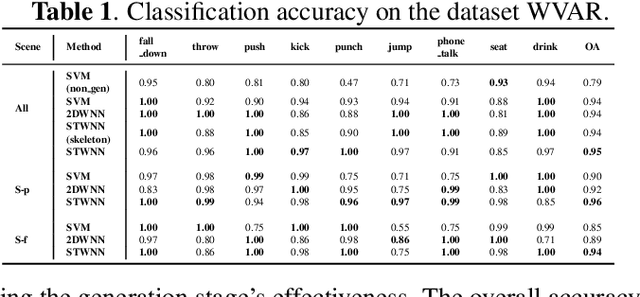

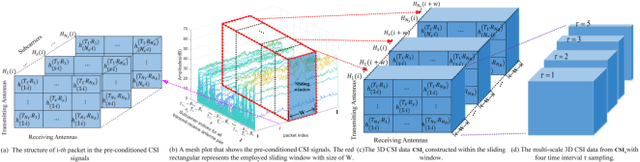

WiFi-based sensing for human activity recognition (HAR) has recently become a hot topic as it brings great benefits when compared with video-based HAR, such as eliminating the demands of line-of-sight (LOS) and preserving privacy. Making the WiFi signals to 'see' the action, however, is quite coarse and thus still in its infancy. An end-to-end spatiotemporal WiFi signal neural network (STWNN) is proposed to enable WiFi-only sensing in both line-of-sight and non-line-of-sight scenarios. Especially, the 3D convolution module is able to explore the spatiotemporal continuity of WiFi signals, and the feature self-attention module can explicitly maintain dominant features. In addition, a novel 3D representation for WiFi signals is designed to preserve multi-scale spatiotemporal information. Furthermore, a small wireless-vision dataset (WVAR) is synchronously collected to extend the potential of STWNN to 'see' through occlusions. Quantitative and qualitative results on WVAR and the other three public benchmark datasets demonstrate the effectiveness of our approach on both accuracy and shift consistency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge