Why Neural Machine Translation Prefers Empty Outputs

Paper and Code

Dec 24, 2020

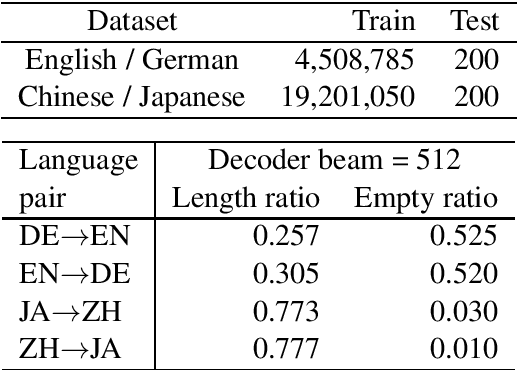

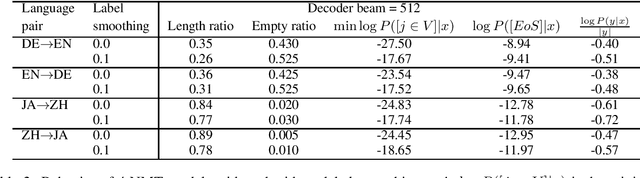

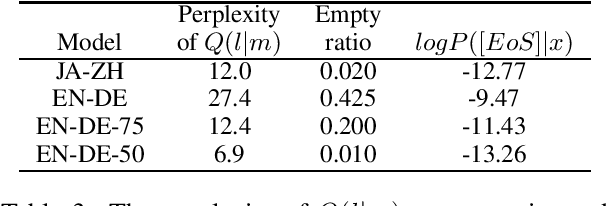

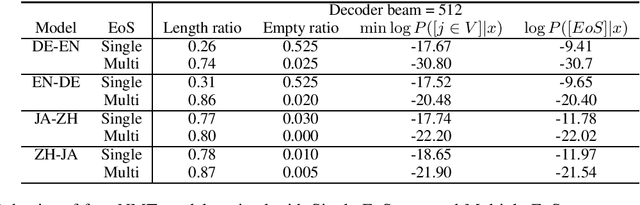

We investigate why neural machine translation (NMT) systems assign high probability to empty translations. We find two explanations. First, label smoothing makes correct-length translations less confident, making it easier for the empty translation to finally outscore them. Second, NMT systems use the same, high-frequency EoS word to end all target sentences, regardless of length. This creates an implicit smoothing that increases zero-length translations. Using different EoS types in target sentences of different lengths exposes and eliminates this implicit smoothing.

* 6 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge