When, where, and how to add new neurons to ANNs

Paper and Code

Feb 17, 2022

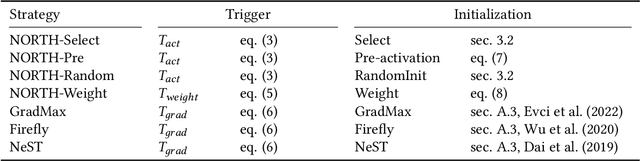

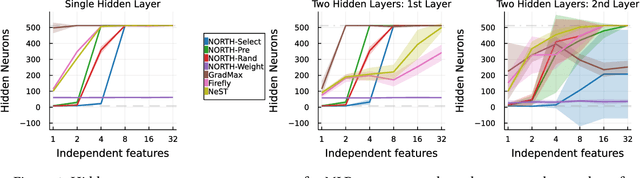

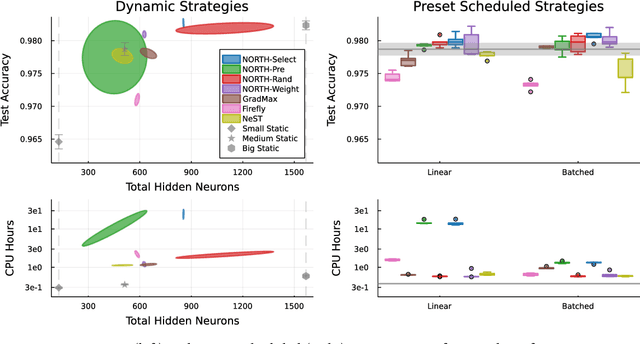

Neurogenesis in ANNs is an understudied and difficult problem, even compared to other forms of structural learning like pruning. By decomposing it into triggers and initializations, we introduce a framework for studying the various facets of neurogenesis: when, where, and how to add neurons during the learning process. We present the Neural Orthogonality (NORTH*) suite of neurogenesis strategies, combining layer-wise triggers and initializations based on the orthogonality of activations or weights to dynamically grow performant networks that converge to an efficient size. We evaluate our contributions against other recent neurogenesis works with MLPs.

View paper on

OpenReview

OpenReview

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge