When and Why Test-Time Augmentation Works

Paper and Code

Nov 23, 2020

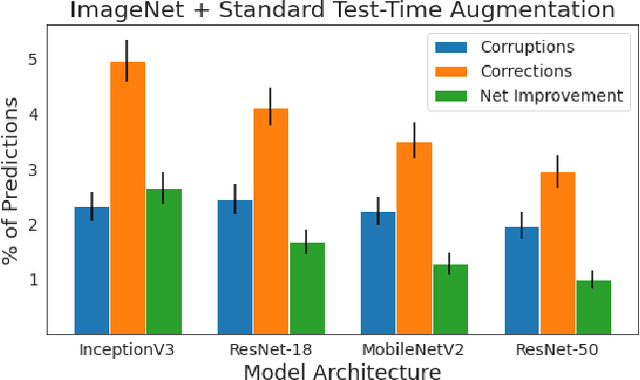

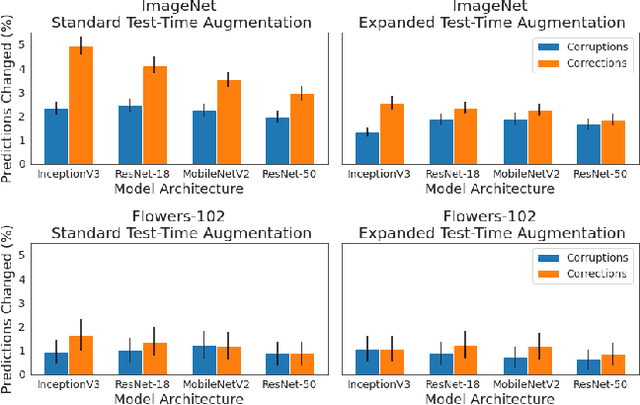

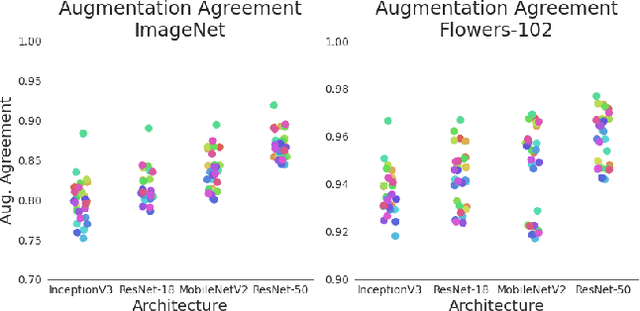

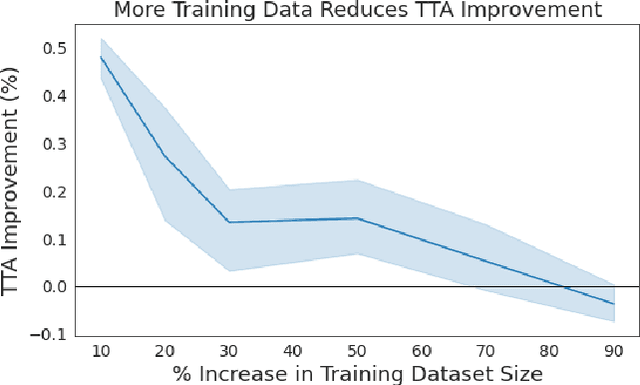

Test-time augmentation (TTA)---the aggregation of predictions across transformed versions of a test input---is a common practice in image classification. In this paper, we present theoretical and experimental analyses that shed light on 1) when test time augmentation is likely to be helpful and 2) when to use various test-time augmentation policies. A key finding is that even when TTA produces a net improvement in accuracy, it can change many correct predictions into incorrect predictions. We delve into when and why test-time augmentation changes a prediction from being correct to incorrect and vice versa. Our analysis suggests that the nature and amount of training data, the model architecture, and the augmentation policy all matter. Building on these insights, we present a learning-based method for aggregating test-time augmentations. Experiments across a diverse set of models, datasets, and augmentations show that our method delivers consistent improvements over existing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge