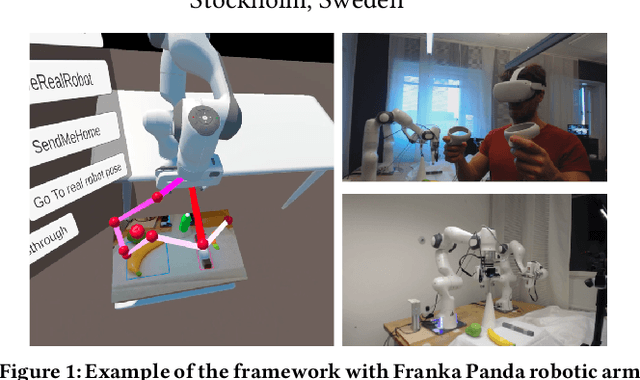

What you see is what you get: A VR Framework for Correcting Robot Errors

Paper and Code

Jan 17, 2023

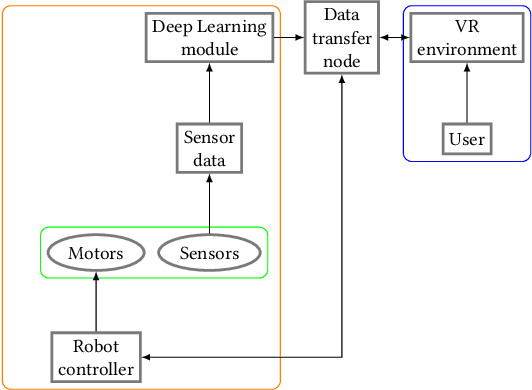

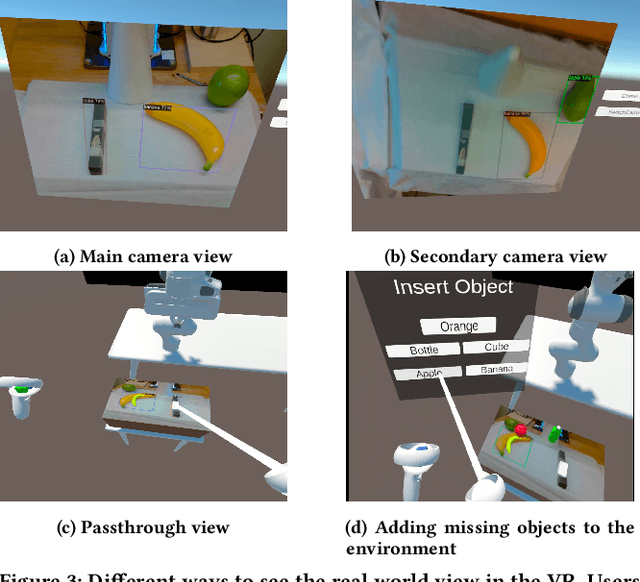

Many solutions tailored for intuitive visualization or teleoperation of virtual, augmented and mixed (VAM) reality systems are not robust to robot failures, such as the inability to detect and recognize objects in the environment or planning unsafe trajectories. In this paper, we present a novel virtual reality (VR) framework where users can (i) recognize when the robot has failed to detect a real-world object, (ii) correct the error in VR, (iii) modify proposed object trajectories and, (iv) implement behaviors on a real-world robot. Finally, we propose a user study aimed at testing the efficacy of our framework. Project materials can be found in the OSF repository.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge