What My Motion tells me about Your Pose: Self-Supervised Fine-Tuning of Observed Vehicle Orientation Angle

Paper and Code

Jul 29, 2020

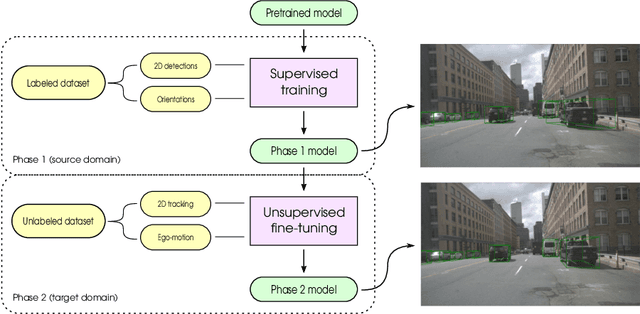

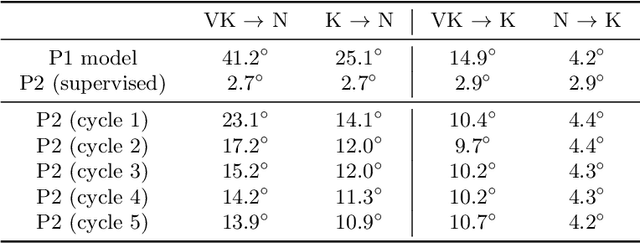

The determination of the relative 6 Degree of Freedom (DoF) pose of vehicles around the ego-vehicle from monocular cameras is an important aspect of the perception problem for Autonomous Vehicles (AVs) and Driver Assist Technology (DAT). Current deep learning techniques used for tackling this problem are data hungry, driving the need for unsupervised or self-supervised methods. In this paper, we consider the domain adaptation task of fine-tuning a vehicle orientation estimator on a new domain without labels. By leveraging the ego-motion consistencies obtained from a monocular SLAM method, we show that our self-supervised fine-tuning scheme consistently improves the accuracy of the resulting network. More specifically, when transitioning from Virtual Kitti to nuScenes, up to 70% of the performance is recovered compared to the 100% of a supervised method. Our self-supervised method hence allows us to safely transfer vehicle orientation estimators to new domains without requiring expensive new labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge