What Does DALL-E 2 Know About Radiology?

Paper and Code

Sep 27, 2022

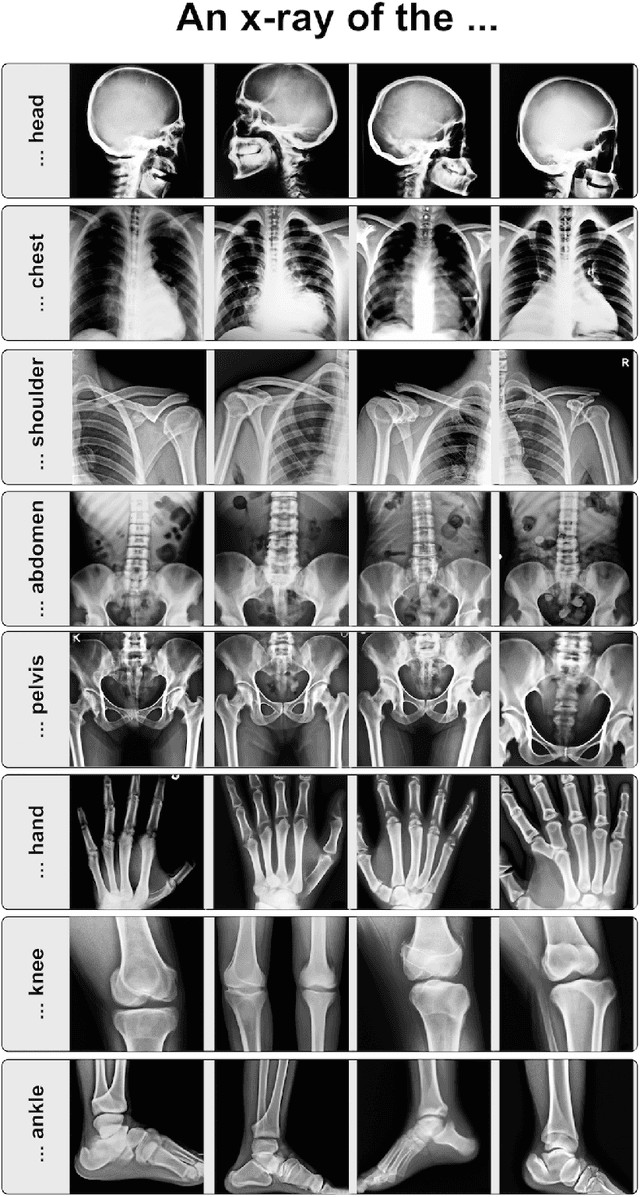

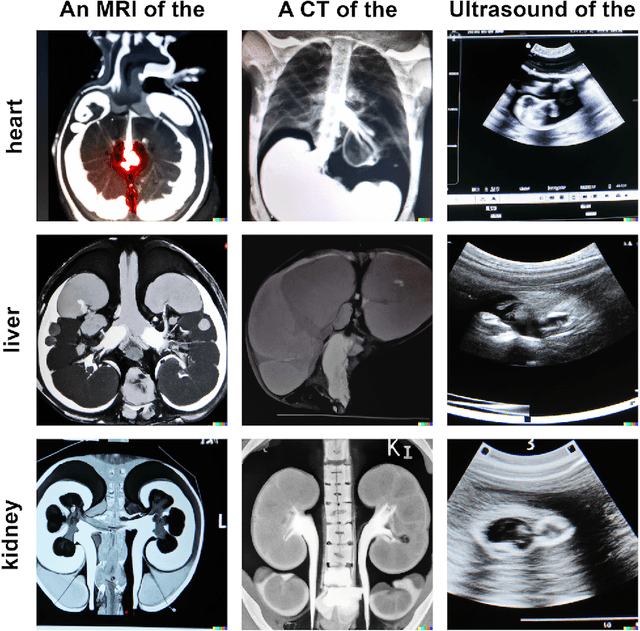

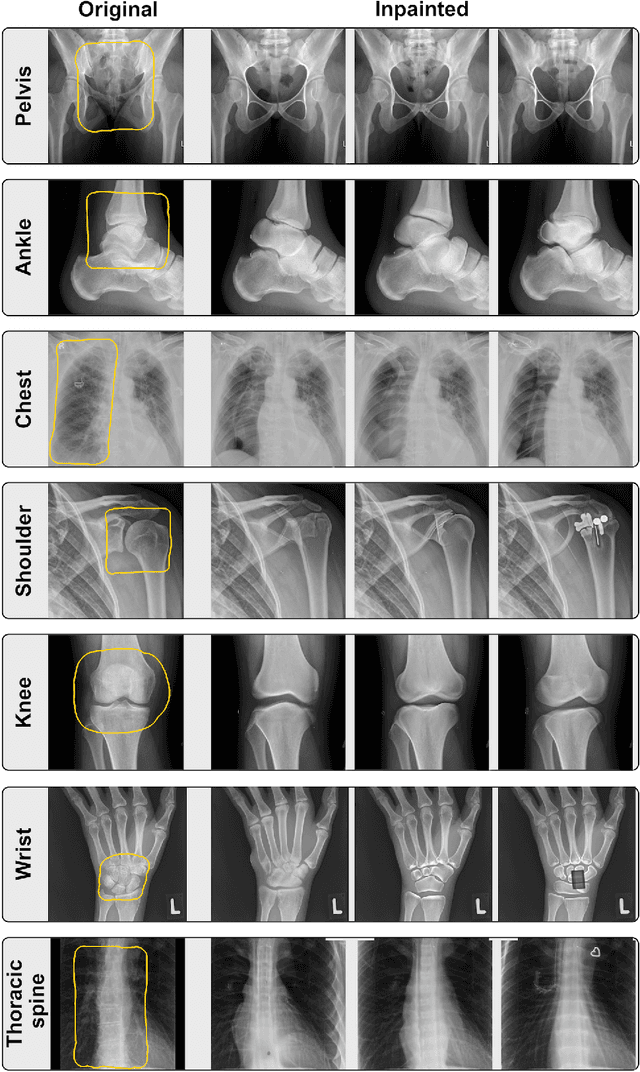

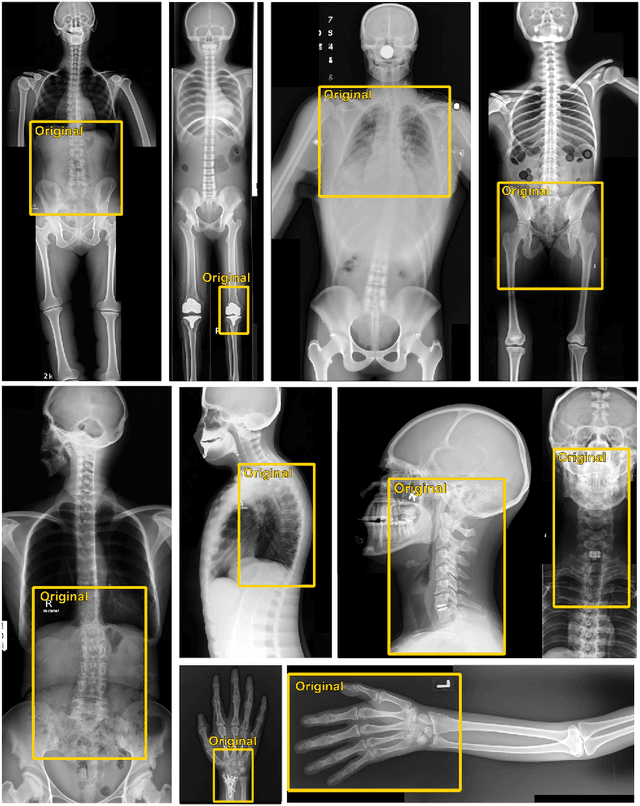

Generative models such as DALL-E 2 could represent a promising future tool for image generation, augmentation, and manipulation for artificial intelligence research in radiology provided that these models have sufficient medical domain knowledge. Here we show that DALL-E 2 has learned relevant representations of X-ray images with promising capabilities in terms of zero-shot text-to-image generation of new images, continuation of an image beyond its original boundaries, or removal of elements, while pathology generation or CT, MRI, and ultrasound images are still limited. The use of generative models for augmenting and generating radiological data thus seems feasible, even if further fine-tuning and adaptation of these models to the respective domain is required beforehand.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge