VirtualConductor: Music-driven Conducting Video Generation System

Paper and Code

Jul 28, 2021

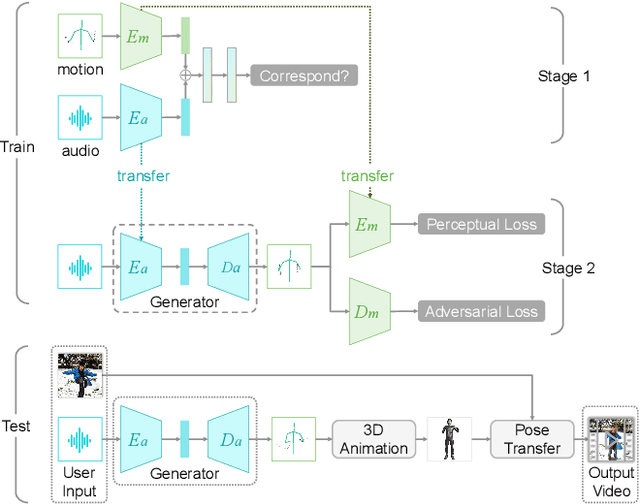

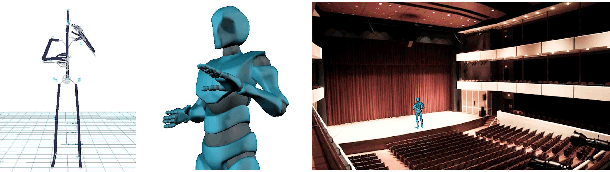

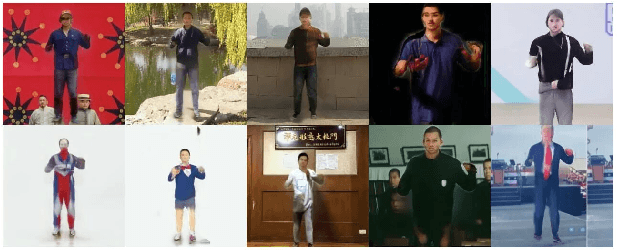

In this demo, we present VirtualConductor, a system that can generate conducting video from any given music and a single user's image. First, a large-scale conductor motion dataset is collected and constructed. Then, we propose Audio Motion Correspondence Network (AMCNet) and adversarial-perceptual learning to learn the cross-modal relationship and generate diverse, plausible, music-synchronized motion. Finally, we combine 3D animation rendering and a pose transfer model to synthesize conducting video from a single given user's image. Therefore, any user can become a virtual conductor through the system.

* Accepted by IEEE International Conference on Multimedia and Expo

(ICME) 2021, demo track. Best demo

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge