Video Imitation GAN: Learning control policies by imitating raw videos using generative adversarial reward estimation

Paper and Code

Oct 02, 2018

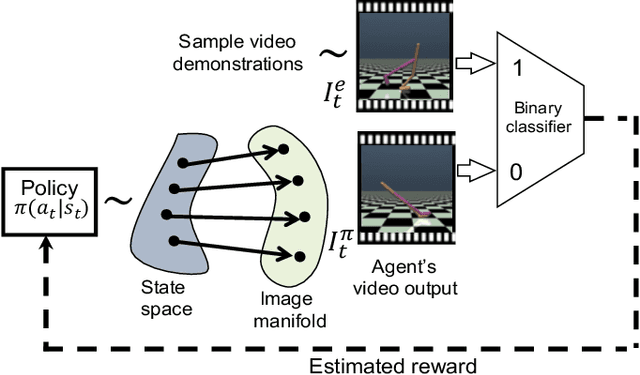

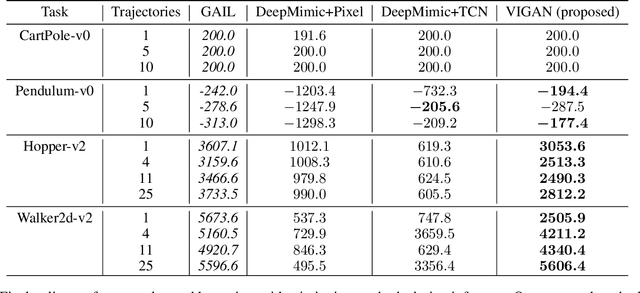

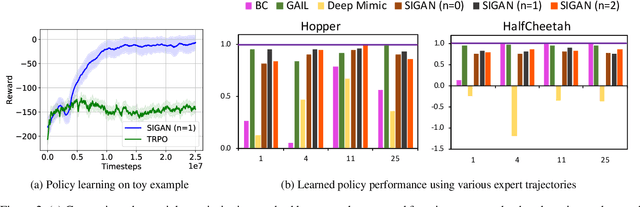

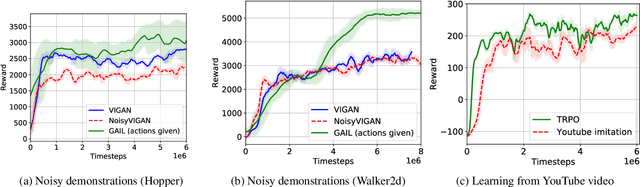

Natural imitation in humans usually consists of mimicking visual demonstrations of another person by continuously refining our skills until our performance is visually akin to the expert demonstrations. In this paper, we are interested in imitation learning of artificial agents in the natural setting - acquiring motor skills by watching raw video demonstrations. Traditional methods for learning from videos rely on extracting meaningful low-dimensional features from the videos followed by a separate hand-crafted reward estimation step based on feature separation between the agent and expert. We propose an imitation learning framework from raw video demonstrations, that reduces the dependence on hand engineered reward functions, by jointly learning the feature extraction and separation estimation steps, using generative adversarial networks. Additionally, we establish the equivalence between adversarial imitation from image manifolds and low-level state distribution matching, under certain conditions. Experimental results show that our proposed imitation learning method from raw videos produces a similar performance to state-of-the-art imitation learning techniques with low-level state and action information available while outperforming existing video imitation methods. Furthermore, we show that our method can learn action policies by imitating video demonstrations available on YouTube with performance comparable to learned agents from true reward signal. Please see the video at https://youtu.be/bvNpV2Q4rOA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge