VH-HFCN based Parking Slot and Lane Markings Segmentation on Panoramic Surround View

Paper and Code

May 07, 2018

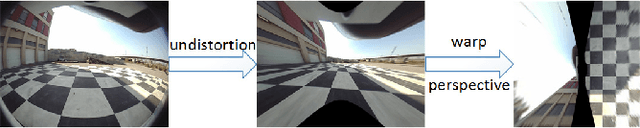

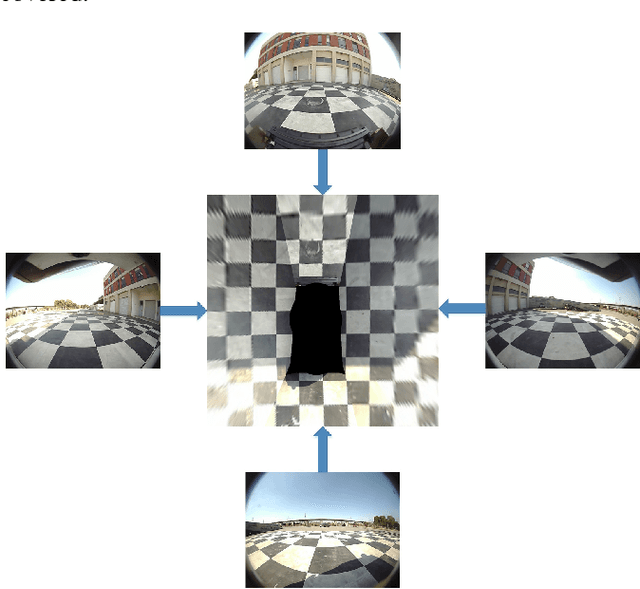

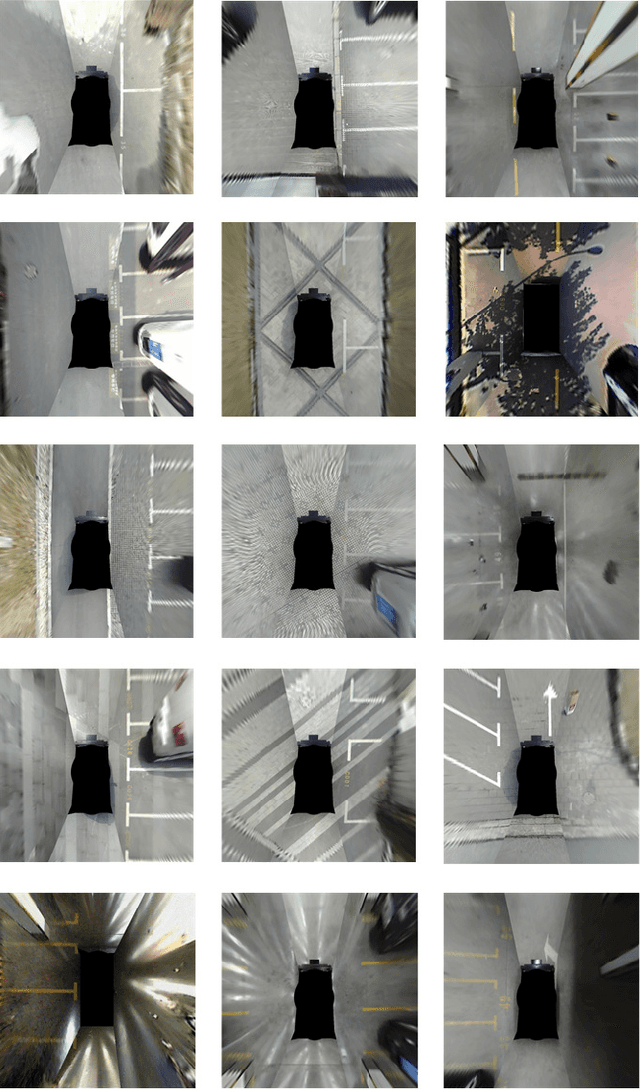

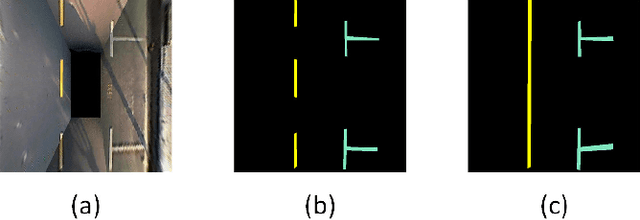

The automatic parking is being massively developed by car manufacturers and providers. Until now, there are two problems with the automatic parking. First, there is no openly-available segmentation labels of parking slot on panoramic surround view (PSV) dataset. Second, how to detect parking slot and road structure robustly. Therefore, in this paper, we build up a public PSV dataset. At the same time, we proposed a highly fused convolutional network (HFCN) based segmentation method for parking slot and lane markings based on the PSV dataset. A surround-view image is made of four calibrated images captured from four fisheye cameras. We collect and label more than 4,200 surround view images for this task, which contain various illuminated scenes of different types of parking slots. A VH-HFCN network is proposed, which adopts an HFCN as the base, with an extra efficient VH-stage for better segmenting various markings. The VH-stage consists of two independent linear convolution paths with vertical and horizontal convolution kernels respectively. This modification enables the network to robustly and precisely extract linear features. We evaluated our model on the PSV dataset and the results showed outstanding performance in ground markings segmentation. Based on the segmented markings, parking slots and lanes are acquired by skeletonization, hough line transform and line arrangement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge