Vessel-following model for inland waterways based on deep reinforcement learning

Paper and Code

Jul 07, 2022

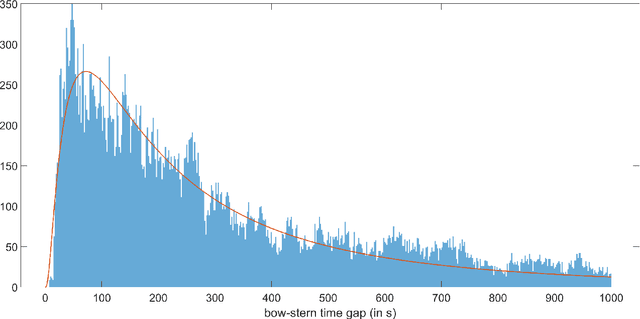

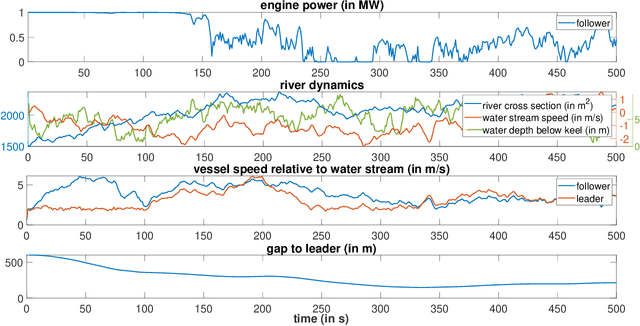

While deep reinforcement learning (RL) has been increasingly applied in designing car-following models in the last years, this study aims at investigating the feasibility of RL-based vehicle-following for complex vehicle dynamics and strong environmental disturbances. As a use case, we developed an inland waterways vessel-following model based on realistic vessel dynamics, which considers environmental influences, such as varying stream velocity and river profile. We extracted natural vessel behavior from anonymized AIS data to formulate a reward function that reflects a realistic driving style next to comfortable and safe navigation. Aiming at high generalization capabilities, we propose an RL training environment that uses stochastic processes to model leading trajectory and river dynamics. To validate the trained model, we defined different scenarios that have not been seen in training, including realistic vessel-following on the Middle Rhine. Our model demonstrated safe and comfortable driving in all scenarios, proving excellent generalization abilities. Furthermore, traffic oscillations could effectively be dampened by deploying the trained model on a sequence of following vessels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge