Variational inference with a quantum computer

Paper and Code

Mar 11, 2021

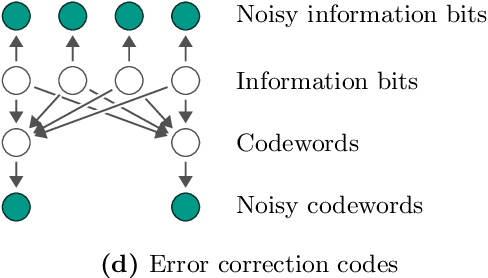

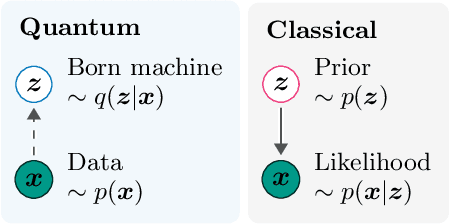

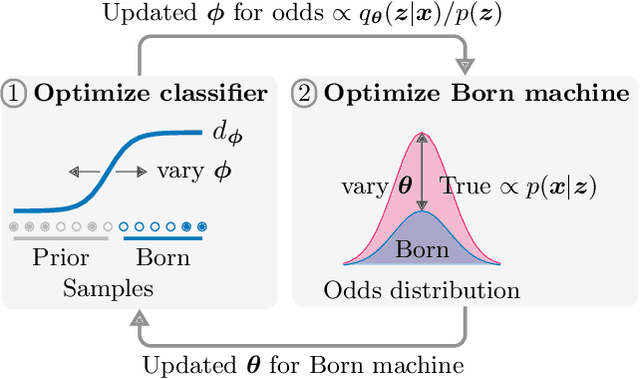

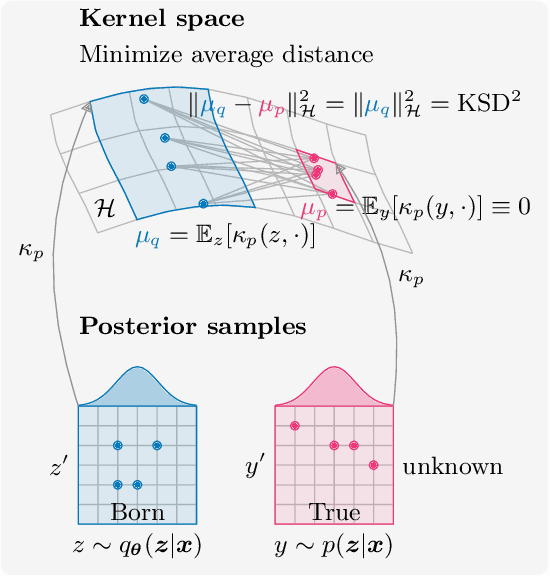

Inference is the task of drawing conclusions about unobserved variables given observations of related variables. Applications range from identifying diseases from symptoms to classifying economic regimes from price movements. Unfortunately, performing exact inference is intractable in general. One alternative is variational inference, where a candidate probability distribution is optimized to approximate the posterior distribution over unobserved variables. For good approximations a flexible and highly expressive candidate distribution is desirable. In this work, we propose quantum Born machines as variational distributions over discrete variables. We apply the framework of operator variational inference to achieve this goal. In particular, we adopt two specific realizations: one with an adversarial objective and one based on the kernelized Stein discrepancy. We demonstrate the approach numerically using examples of Bayesian networks, and implement an experiment on an IBM quantum computer. Our techniques enable efficient variational inference with distributions beyond those that are efficiently representable on a classical computer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge