Using Synthetic Corruptions to Measure Robustness to Natural Distribution Shifts

Paper and Code

Jul 26, 2021

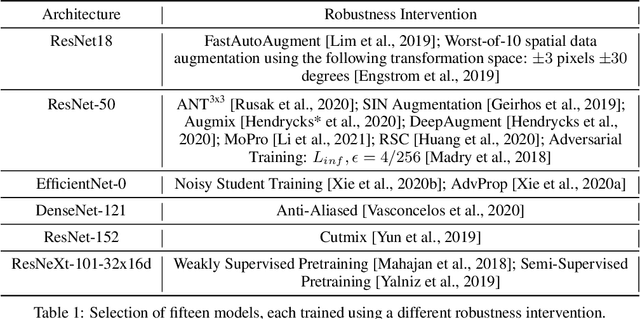

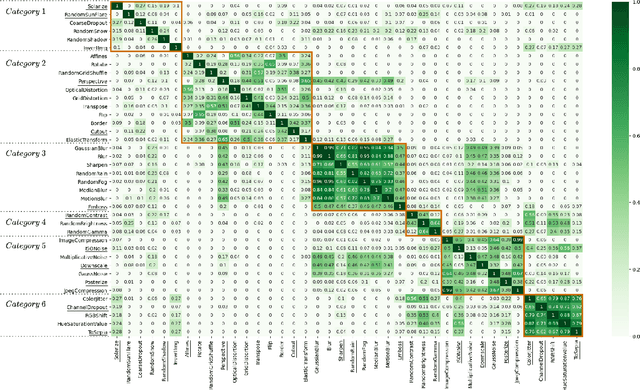

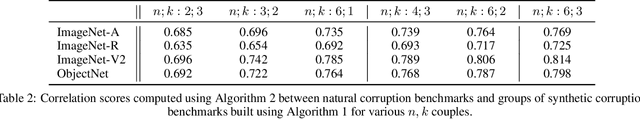

Synthetic corruptions gathered into a benchmark are frequently used to measure neural network robustness to distribution shifts. However, robustness to synthetic corruption benchmarks is not always predictive of robustness to distribution shifts encountered in real-world applications. In this paper, we propose a methodology to build synthetic corruption benchmarks that make robustness estimations more correlated with robustness to real-world distribution shifts. Using the overlapping criterion, we split synthetic corruptions into categories that help to better understand neural network robustness. Based on these categories, we identify three parameters that are relevant to take into account when constructing a corruption benchmark: number of represented categories, balance among categories and size of benchmarks. Applying the proposed methodology, we build a new benchmark called ImageNet-Syn2Nat to predict image classifier robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge