Using Monocular Vision and Human Body Priors for AUVs to Autonomously Approach Divers

Paper and Code

Nov 05, 2021

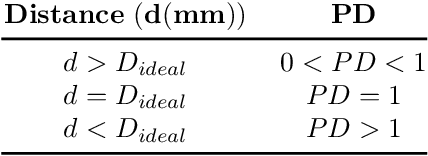

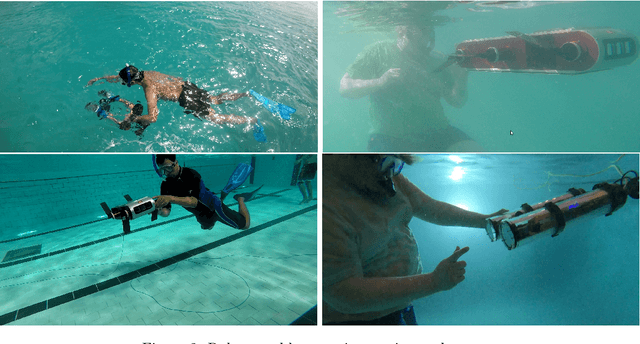

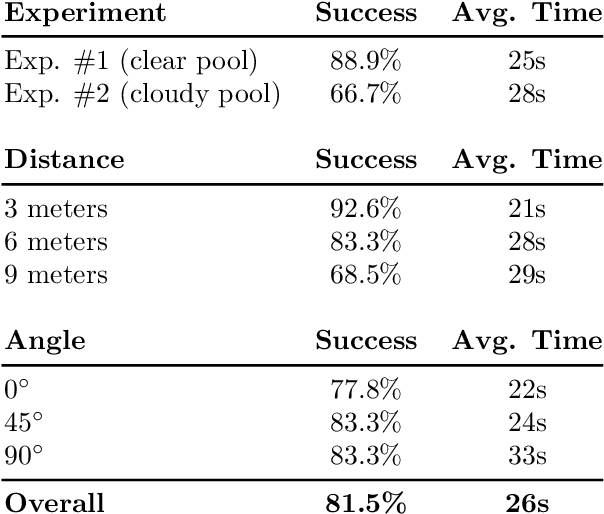

Direct communication between humans and autonomous underwater vehicles (AUVs) is a relatively underexplored area in human-robot interaction (HRI) research, although many tasks (\eg surveillance, inspection, and search-and-rescue) require close diver-robot collaboration. Many core functionalities in this domain are in need of further study to improve robotic capabilities for ease of interaction. One of these is the challenge of autonomous robots approaching and positioning themselves relative to divers to initiate and facilitate interactions. Suboptimal AUV positioning can lead to poor quality interaction and lead to excessive cognitive and physical load for divers. In this paper, we introduce a novel method for AUVs to autonomously navigate and achieve diver-relative positioning to begin interaction. Our method is based only on monocular vision, requires no global localization, and is computationally efficient. We present our algorithm along with an implementation of said algorithm on board both a simulated and physical AUV, performing extensive evaluations in the form of closed-water tests in a controlled pool. Analysis of our results show that the proposed monocular vision-based algorithm performs reliably and efficiently operating entirely on-board the AUV.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge