Update Aware Device Scheduling for Federated Learning at the Wireless Edge

Paper and Code

Jan 28, 2020

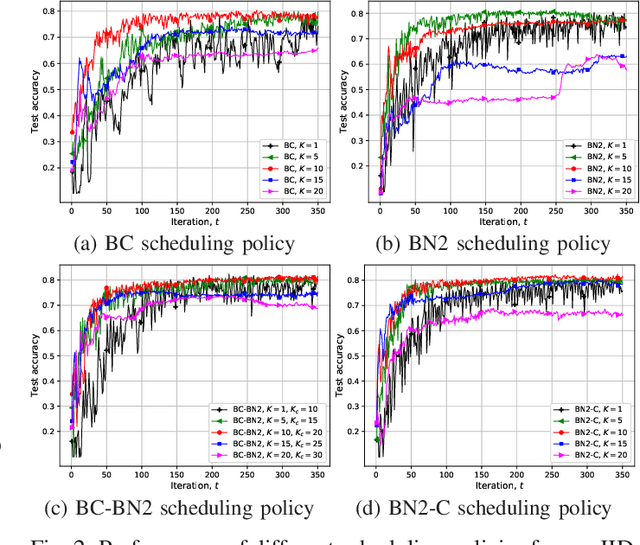

We study federated learning (FL) at the wireless edge, where power-limited devices with local datasets train a joint model with the help of a remote parameter server (PS). We assume that the devices are connected to the PS through a bandwidth-limited shared wireless channel. At each iteration of FL, a subset of the devices are scheduled to transmit their local model updates to the PS over orthogonal channel resources. We design novel scheduling policies, that decide on the subset of devices to transmit at each round not only based on their channel conditions, but also on the significance of their local model updates. Numerical results show that the proposed scheduling policy provides a better long-term performance than scheduling policies based only on either of the two metrics individually. We also observe that when the data is independent and identically distributed (i.i.d.) across devices, selecting a single device at each round provides the best performance, while when the data distribution is non-i.i.d., more devices should be scheduled.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge