UnweaveNet: Unweaving Activity Stories

Paper and Code

Dec 19, 2021

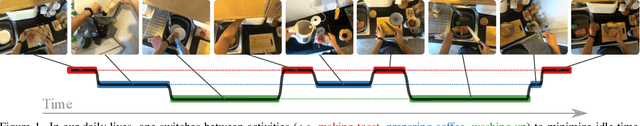

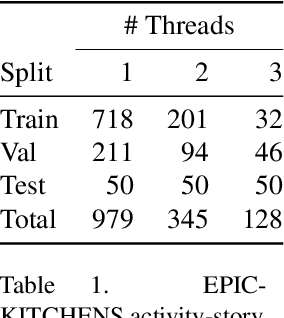

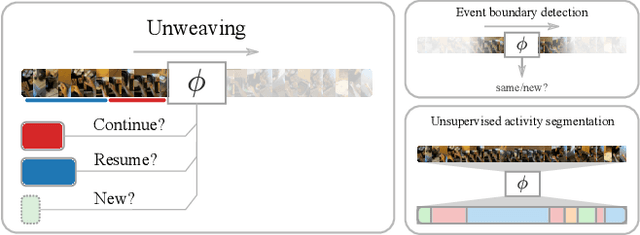

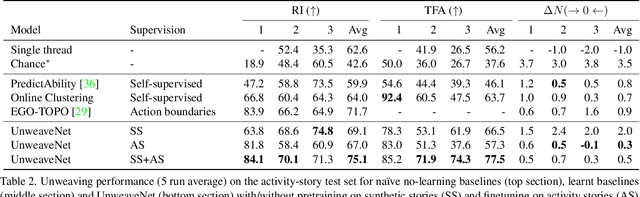

Our lives can be seen as a complex weaving of activities; we switch from one activity to another, to maximise our achievements or in reaction to demands placed upon us. Observing a video of unscripted daily activities, we parse the video into its constituent activity threads through a process we call unweaving. To accomplish this, we introduce a video representation explicitly capturing activity threads called a thread bank, along with a neural controller capable of detecting goal changes and resuming of past activities, together forming UnweaveNet. We train and evaluate UnweaveNet on sequences from the unscripted egocentric dataset EPIC-KITCHENS. We propose and showcase the efficacy of pretraining UnweaveNet in a self-supervised manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge