Unsupervised Video Interpolation by Learning Multilayered 2.5D Motion Fields

Paper and Code

Apr 21, 2022

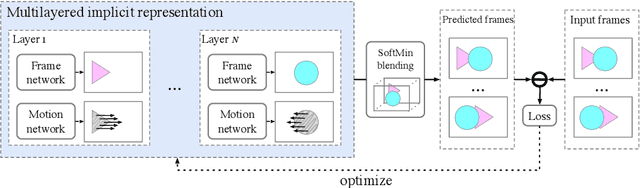

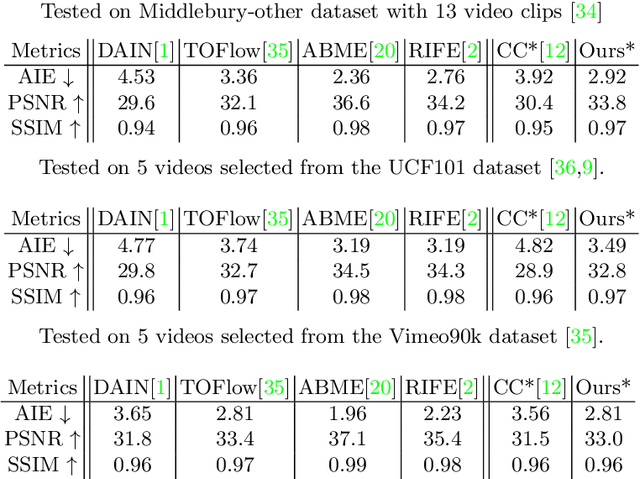

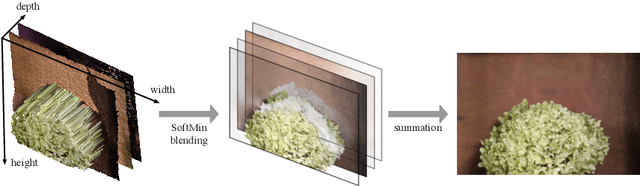

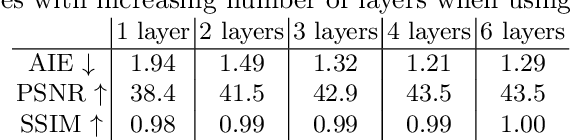

The problem of video frame interpolation is to increase the temporal resolution of a low frame-rate video, by interpolating novel frames between existing temporally sparse frames. This paper presents a self-supervised approach to video frame interpolation that requires only a single video. We pose the video as a set of layers. Each layer is parameterized by two implicit neural networks -- one for learning a static frame and the other for a time-varying motion field corresponding to video dynamics. Together they represent an occlusion-free subset of the scene with a pseudo-depth channel. To model inter-layer occlusions, all layers are lifted to the 2.5D space so that the frontal layer occludes distant layers. This is done by assigning each layer a depth channel, which we call `pseudo-depth', whose partial order defines the occlusion between layers. The pseudo-depths are converted to visibility values through a fully differentiable SoftMin function so that closer layers are more visible than layers in a distance. On the other hand, we parameterize the video motions by solving an ordinary differentiable equation (ODE) defined on a time-varying neural velocity field that guarantees valid motions. This implicit neural representation learns the video as a space-time continuum, allowing frame interpolation at any temporal resolution. We demonstrate the effectiveness of our method on real-world datasets, where our method achieves comparable performance to state-of-the-arts that require ground truth labels for training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge