Unsupervised Learning of 3D Scene Flow with 3D Odometry Assistance

Paper and Code

Sep 11, 2022

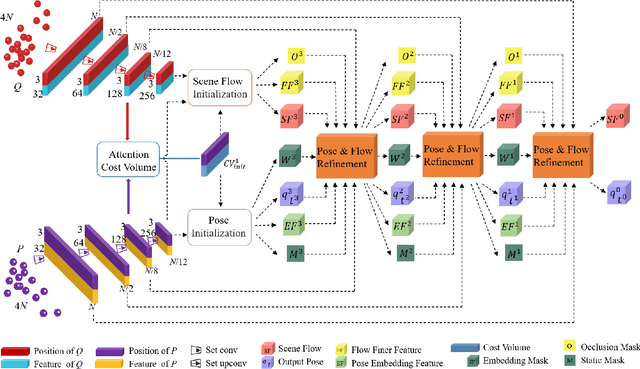

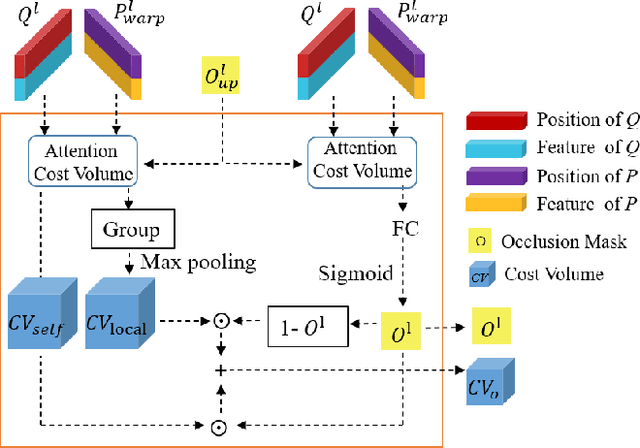

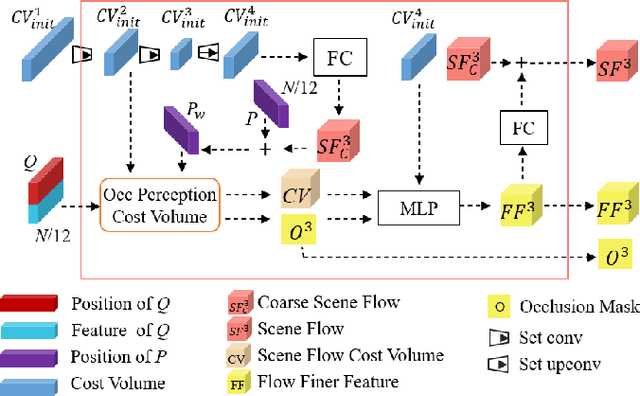

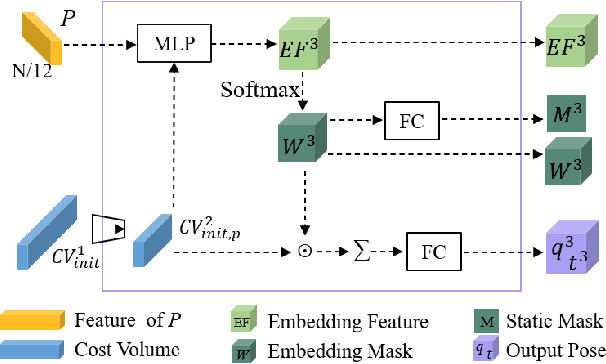

Scene flow represents the 3D motion of each point in the scene, which explicitly describes the distance and the direction of each point's movement. Scene flow estimation is used in various applications such as autonomous driving fields, activity recognition, and virtual reality fields. As it is challenging to annotate scene flow with ground truth for real-world data, this leaves no real-world dataset available to provide a large amount of data with ground truth for scene flow estimation. Therefore, many works use synthesized data to pre-train their network and real-world LiDAR data to finetune. Unlike the previous unsupervised learning of scene flow in point clouds, we propose to use odometry information to assist the unsupervised learning of scene flow and use real-world LiDAR data to train our network. Supervised odometry provides more accurate shared cost volume for scene flow. In addition, the proposed network has mask-weighted warp layers to get a more accurate predicted point cloud. The warp operation means applying an estimated pose transformation or scene flow to a source point cloud to obtain a predicted point cloud and is the key to refining scene flow from coarse to fine. When performing warp operations, the points in different states use different weights for the pose transformation and scene flow transformation. We classify the states of points as static, dynamic, and occluded, where the static masks are used to divide static and dynamic points, and the occlusion masks are used to divide occluded points. The mask-weighted warp layer indicates that static masks and occlusion masks are used as weights when performing warp operations. Our designs are proved to be effective in ablation experiments. The experiment results show the promising prospect of an odometry-assisted unsupervised learning method for 3D scene flow in real-world data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge