Unsupervised domain adaptation semantic segmentation of high-resolution remote sensing imagery with invariant domain-level context memory

Paper and Code

Aug 16, 2022

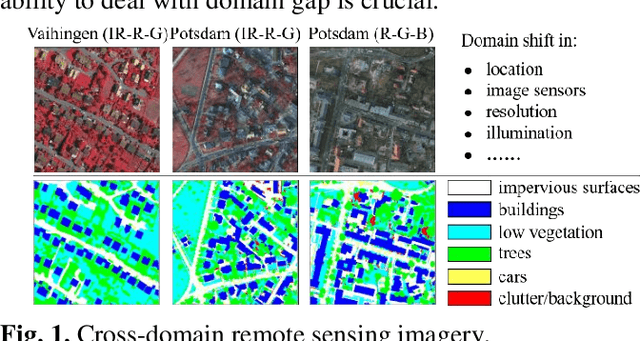

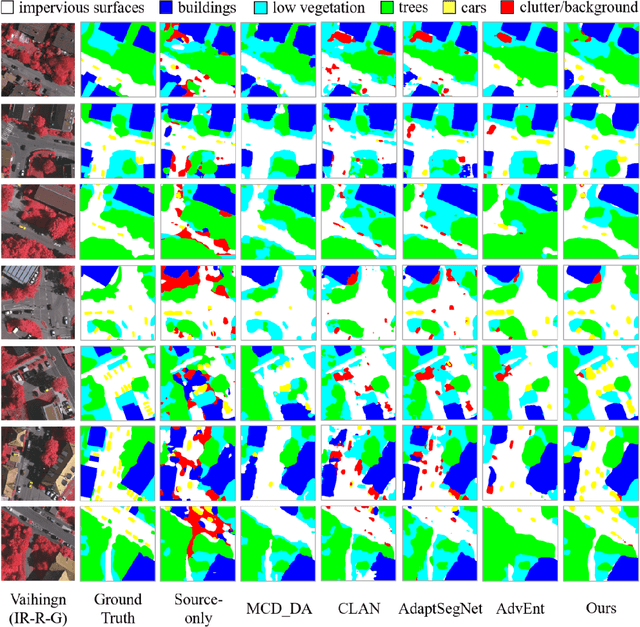

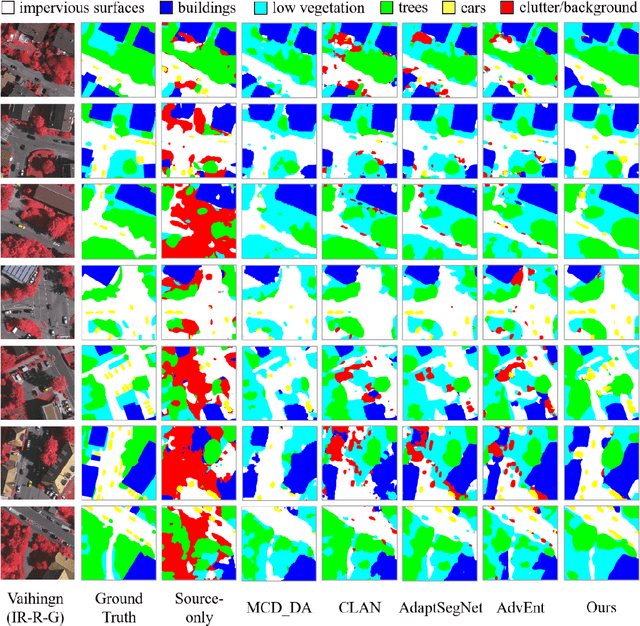

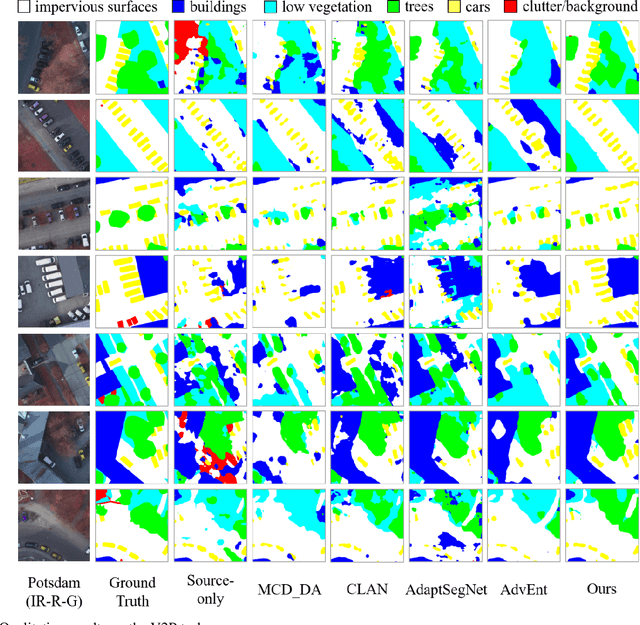

Semantic segmentation is a key technique involved in automatic interpretation of high-resolution remote sensing (HRS) imagery and has drawn much attention in the remote sensing community. Deep convolutional neural networks (DCNNs) have been successfully applied to the HRS imagery semantic segmentation task due to their hierarchical representation ability. However, the heavy dependency on a large number of training data with dense annotation and the sensitiveness to the variation of data distribution severely restrict the potential application of DCNNs for the semantic segmentation of HRS imagery. This study proposes a novel unsupervised domain adaptation semantic segmentation network (MemoryAdaptNet) for the semantic segmentation of HRS imagery. MemoryAdaptNet constructs an output space adversarial learning scheme to bridge the domain distribution discrepancy between source domain and target domain and to narrow the influence of domain shift. Specifically, we embed an invariant feature memory module to store invariant domain-level context information because the features obtained from adversarial learning only tend to represent the variant feature of current limited inputs. This module is integrated by a category attention-driven invariant domain-level context aggregation module to current pseudo invariant feature for further augmenting the pixel representations. An entropy-based pseudo label filtering strategy is used to update the memory module with high-confident pseudo invariant feature of current target images. Extensive experiments under three cross-domain tasks indicate that our proposed MemoryAdaptNet is remarkably superior to the state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge